Facebook has long struggled to adequately moderate its platform for potentially violent and abusive content. And a series of recently leaked guidelines and policies of its internal monitoring practices reveals all the chaos that goes behind the company’s efforts to curb harassment.

The Guardian has published a massive trove of internal documents used to train Facebook moderators how to appropriately deal with potentially offensive messages and imagery on its platform.

The large collection of manuals – pegged the Facebook Files – outlines the diverse and often conflicting set of rules the social media giant relies on when handling potentially abusive posts. These principles ultimately guide moderators when handling messages of violence, hate speech, terrorism or self-harm.

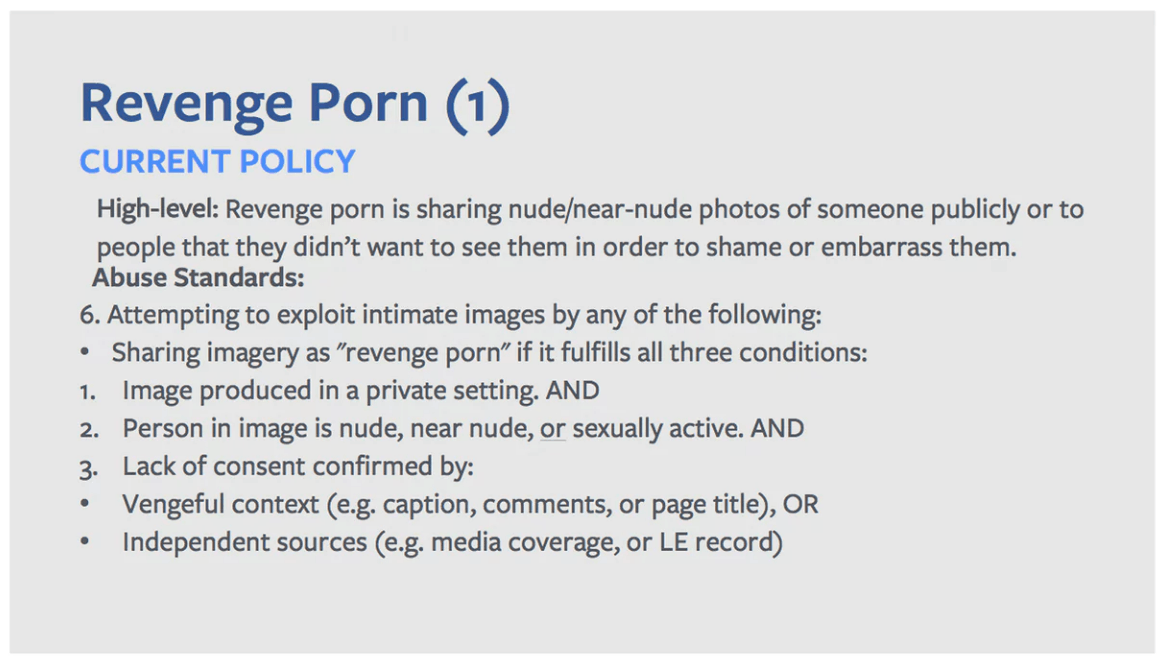

Among other things, the internal rulebook offers advice on how to address a string of new challenges – like “revenge porn” and images of animal abuse – that Facebook moderators face on a daily basis as they weed through millions of user-generated reports each week.

In addition to complex and confusing guidelines, perhaps most troubling is how the sheer volume of reports has made it almost impossible for moderators to scrupulously ferret out abusive content. “Facebook cannot keep control of its content,” one source told The Guardian. “It has grown too big, too quickly.”

To better put things into perspective: Moderators often have less than 10 seconds to make a decision on whether certain posts breach the company’s terms of services.

Such complications present an even larger conundrum since they make it more difficult to establish the boundaries between what counts as potentially violent content and free speech.

For instance, while Facebook’s policies stipulate that posts like “Someone shoot Trump” ought to be removed from the platform since heads of state fall under a “protected category.”

By contrast, it is perfectly fine to say things like “fuck off and die” as well as “To snap a bitch’s neck, make sure to apply all your pressure to the middle of her throat” since such statements fail to qualify as “credible threats.”

The same twisted logic applies to tonnes of other categories like videos of violent deaths and photos of animal abuse, both of which can be freely shared on the platform as long as the content in question can help raise awareness of the issues it portrays.

Here are some examples provided in the leaked training manuals. Please note that a red cross indicates the post violates the website’s terms of services, and a green tick means the message is appropriate for the platform and does not require additional moderation:

To explain this reasoning, the manuals point out the following:

We should say that violent language is most often not credible until specificity of language gives us a reasonable ground to accept that there is no longer simply an expression of emotion but a transition to a plot or design. From this perspective language such as ‘I’m going to kill you’ or ‘Fuck off and die’ is not credible and is a violent expression of dislike and frustration.

The rulebook further notes that one of the reasons people feel “safe” to “use violent language to express frustration” on the social media platform is because “they feel indifferent towards the person they are making the threats about because of the lack of empathy created by communication via devices as opposed to face to face.”

Facebook Head of Global Policy Management Monika Bickert said in a statement that the company has ” a really diverse global community and people are going to have very different ideas about what is OK to share.”

“No matter where you draw the line there are always going to be some grey areas. For instance, the line between satire and humour and inappropriate content is sometimes very grey. It is very difficult to decide whether some things belong on the site or not,” she continued.

In an effort to fend off the chain of unfortunate deaths displayed on the website, earlier this month CEO Mark Zuckerberg announced Facebook is expanding its 4,500-strong operations team with 3,000 new hires to keep an eye out for violence-related reports.

Still, if the company is to succeed in its initiative to effectively counteract violence and harassment, it must also take a step back and re-evaluate the very rules and guidelines it leans on when moderating problematic content.

While Facebook is certainly right to refrain from deleting some potentially violent content in order to help raising awareness and perhaps even advancing active investigations, the subsequent spread of such content could in some cases be damaging to other individuals – and this is where things start to get blurry.

To see more details from the leaked Facebook Files, head to this page. The Guardian has also built this interactive simulator that puts you in the shoes of a real Facebook moderator, demonstrating the numerous difficulties involved in filtering through thousands of potentially offensive posts and images.

Get the TNW newsletter

Get the most important tech news in your inbox each week.