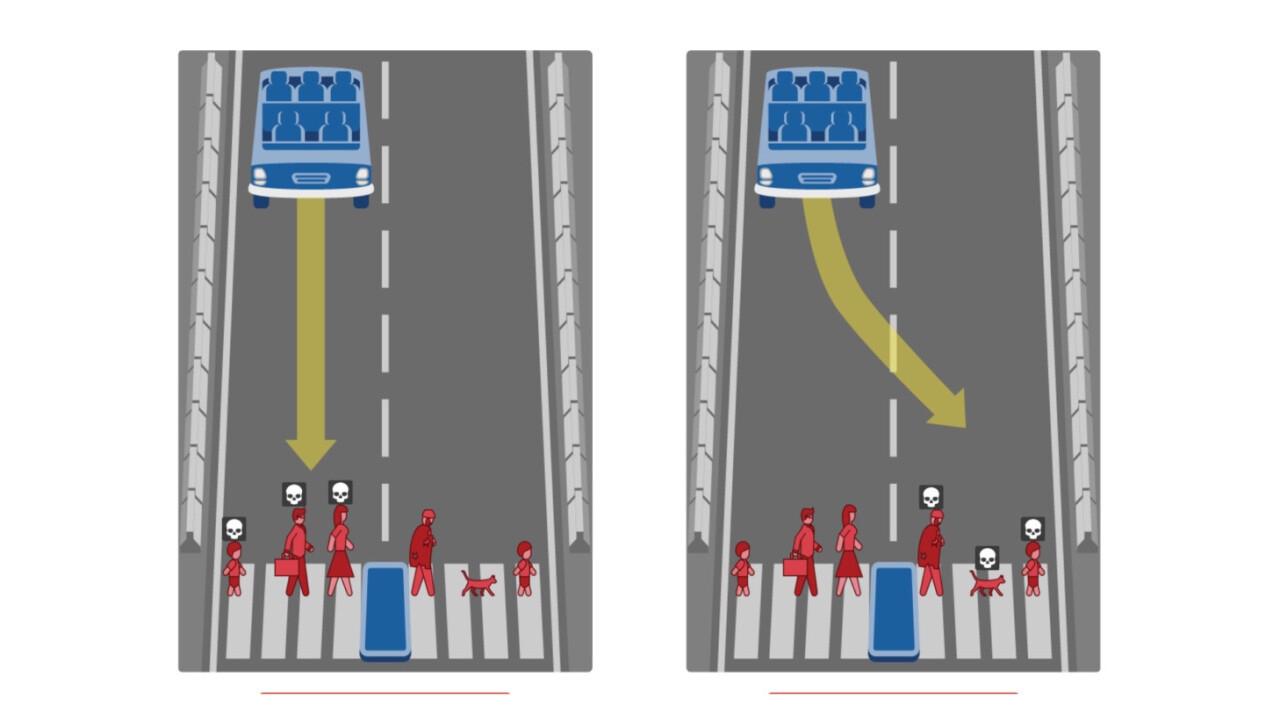

If there’s an unavoidable accident in a self-driving car, who dies? This is the question researchers at Massachusetts Institute of Technology (MIT) want you to answer in ‘Moral Machine.’

The simplistic website is sort of like the famed ‘Trolley Problem’ on steroids. If you’re unfamiliar, according to Wikipedia, the Trolley Problem is as follows:

There is a runaway trolley barreling down the railway tracks. Ahead, on the tracks, there are five people tied up and unable to move. The trolley is headed straight for them. You are standing some distance off in the train yard, next to a lever. If you pull this lever, the trolley will switch to a different set of tracks. However, you notice that there is one person on the side track. You have two options:

- Do nothing, and the trolley kills the five people on the main track.

- Pull the lever, diverting the trolley onto the side track where it will kill one person.

Which is the most ethical choice?

It’s a simple problem with a not-so-simple answer. This two-year-old tried to solve it and while we’d award points for creativity, the solution left a lot to be desired.

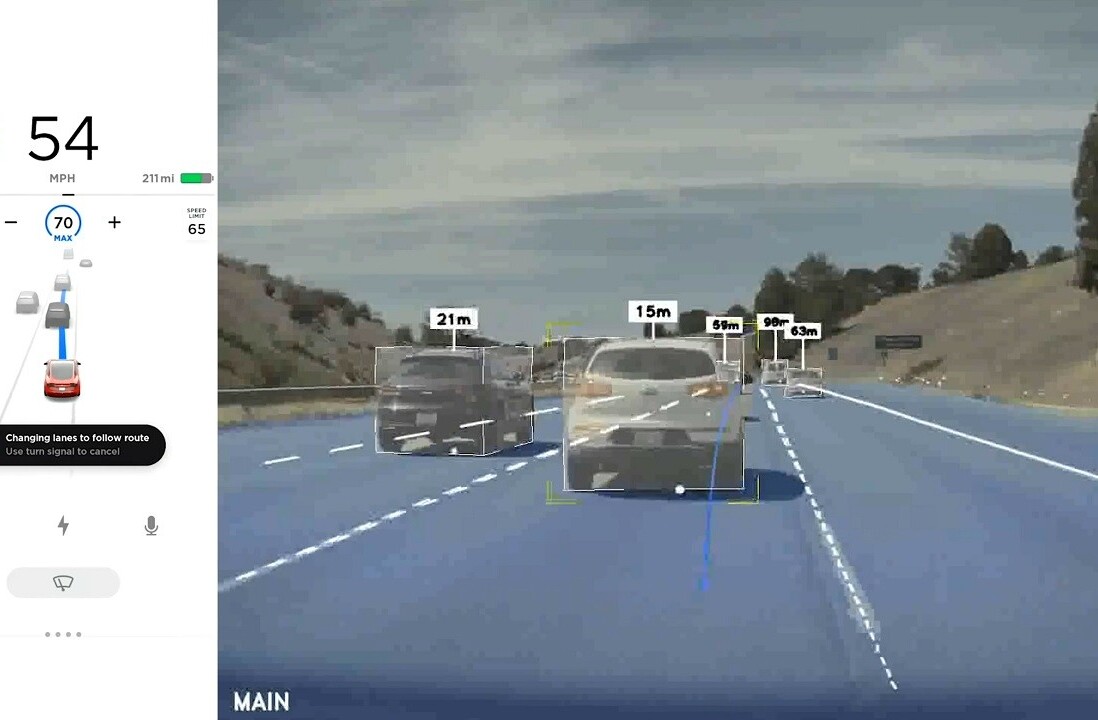

Moral Machine puts you in the shoes of a programmer that has to train a self-driving car in how to handle these uncommon scenarios. Of course, there’s only a tiny chance you’d ever run into one in your lifetime, but for the time being it’s a question without an answer.

In the simulation, you’ll run into interesting scenarios. Is a human’s life more valuable than an animals? Should you run down two criminals and two innocent people or kill the driver and three passengers (all innocent)? Are the elderly worth less than the young? Is a female life worth more than a male’s? There’s really no right answer to any of these, but it’s useful in determining how humans feel the car should respond to these once-in-several-lifetimes occurrences.

If you’re so inclined, you can even pass your results along to MIT at the end of the exercise.

Self-driving cars will save lives — that’s not in question. Unfortunately, though, it’ll be responsible for a few along the way, and that’s an issue humans struggle with. While the car may have made the best decision based on the data available, to humans it’ll always be a machine that’s capable of killing without our input.

And that’s a problem tech really can’t solve.

via Business Insider

Get the TNW newsletter

Get the most important tech news in your inbox each week.