Last week, Facebook CEO Mark Zuckerberg wrote a post pledging to combat misinformation about COVID-19 circulating on Facebook.

“We’ve taken down hundreds of thousands of pieces of misinformation related to COVID-19, including theories like drinking bleach cures the virus or that physical distancing is ineffective at preventing the disease from spreading,” Zuckerberg wrote.

But at the very same time, The Markup found, Facebook was allowing advertisers to profit from ads targeting people that the company believes are interested in “pseudoscience.” According to Facebook’s ad portal, the pseudoscience interest category contained more than 78 million people.

This week, The Markup paid to advertise a post targeting people interested in pseudoscience, and the ad was approved by Facebook.

Using the same tool, The Markup boosted a post targeting people interested in pseudoscience on Instagram, the Facebook-owned platform that is incredibly popular with Americans under 30. The ad was approved in minutes.

We reached out to Facebook asking about the targeting category on Monday morning. After asking for multiple extensions to formulate a response, company spokesperson Devon Kearns emailed The Markup on Wednesday evening to say that Facebook had eliminated the pseudoscience interest category.

It’s not clear how many advertisers had purchased ads targeting this category of users.

While Facebook does offer a publicly accessible library of ads run on its platform, it does not display which groups are targeted by each ad. However, we do have an idea what at least one ad targeting the group looks like, since an ad for a hat that would supposedly protect my head from cellphone radiation appeared on my Facebook feed on Thursday, April 16.

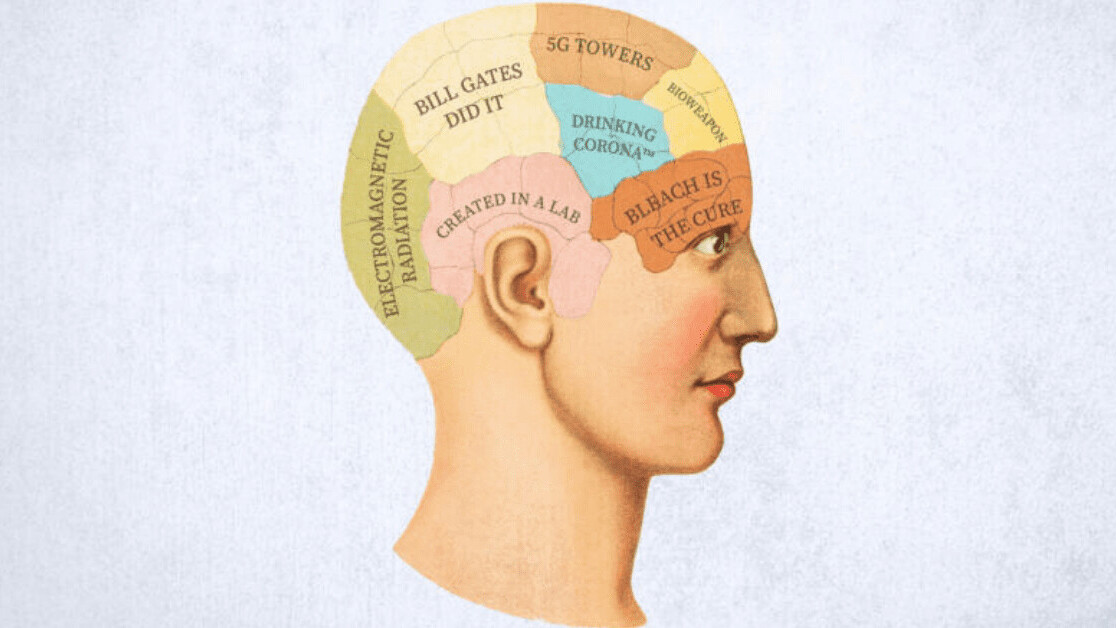

Concerns about electromagnetic radiation coming from 5G cellular infrastructure have become a major part of the conspiracy theories swirling around the origin of the coronavirus.

The “Why You’re Seeing This Ad” tab on the ad showed it was displayed because “Lambs is trying to reach people Facebook thinks are interested in Pseudoscience.”

Lambs CEO Art Menard de Calenge told The Markup that the company didn’t select the pseudoscience category. That targeting, he noted, was done by Facebook independently. “This is Facebook thinking that this particular ad set would be interesting for this demographic, not our doing,” Menard de Calenge wrote in an email.

He added that the company’s target market is “people wanting to mitigate the long-term risks associated with wireless radiation.”

“Pseudoscience” was also one of the interests listed in the ad preferences section of my Facebook profile, possibly because I had recently started looking into the litany of user-created coronavirus conspiracy theory groups on the platform. (Facebook allows users to review and edit the “interests” that Facebook has assigned to them.)

Kate Starbird, a professor at the University of Washington studying how conspiracy theories spread online, said one hallmark of the ecosystem is that people who believe in one conspiracy theory are more likely to be convinced of other conspiracy theories.

By offering advertisers the ability to target people who are susceptible to conspiracy theories, she said, Facebook is taking “advantage of this sort of vulnerability that a person has once they’re going down these rabbit holes, both to pull them further down and to monetize that.”

This isn’t the first time Facebook has faced scrutiny for the ways its ad tool can target conspiracy-minded people. A 2019 Guardian investigation found that advertisers could reach people interested in “vaccine controversies.” In 2017, ProPublica reporters (two of whom are now at The Markup) found that advertisers could target people who were interested in terms like “Jew hater,” and “History of ‘why jews ruin the world.’ ”

In both cases, Facebook removed the ad categories in question. In response to the ProPublica findings, Facebook COO Sheryl Sandberg wrote in a 2017 post that the option to target customers based on the categories in question was “totally inappropriate and a fail on our part.”

It appears Facebook has maintained a “pseudoscience” ad category group for several years. Data collected by ProPublica reporters (two of whom are now at The Markup) in late 2016 shows that “Pseudoscience” was an interest that Facebook assigned to its users at that time.

ProPublica’s list also contained categories for “New World Order (conspiracy theory),” “Chemtrail conspiracy theory,” and “Vaccine controversies.” However, none of those three categories are currently accessible through Facebook’s advertising tool, suggesting that at some point the company removed them while leaving Pseudoscience active.

As COVID-19 has spread around the world, so have conspiracy theories about its origins. Alongside speculation that the virus was created in a Chinese laboratory or is the handiwork of billionaire philanthropist Bill Gates, rumors have spread widely online about the disease actually being a side effect of exposure to the 5G cellular networks that have begun to be deployed across the globe.

While there is no scientific evidence to support any connection between the coronavirus and 5G deployment, that has not stopped vandals in the U.K. from setting fire to at least 20 cell towers, which acts law enforcement authorities have blamed on the conspiracy theories. The link between coronavirus and 5G has also been publicly endorsed by celebrities such as actors Woody Harrelson and John Cusack and musicians M.I.A. and Wiz Khalifa.

Even though a link between 5G and coronavirus is one people purchasing Lambs’s products may be making, it is not one that the company wants to encourage. “Lambs has never made any connection between 5G and COVID-19,” Menard de Calenge insisted. “In fact, I have actually recently made clear on a podcast that we believe the theories going on about the relationship between 5G and COVID-19 are completely baseless.”

Although Facebook says it is cracking down on conspiracy theory content, as of last week, The Markup was able to find at least 67 user-created groups whose titles directly indicate they are specifically devoted to propagating coronavirus conspiracy theories. Kearns, the Facebook spokesperson, told The Markup that the company is in the process of reviewing the groups we identified.

Over the weekend, one of those Facebook groups changed its name from “CoronaVirus Conspiracy Theories and Discussion Group” to “NOVELTY AND ASIAN PLATES FOR SALE GROUP UK” because, as one administrator of the group wrote in a Facebook post on Sunday, the group had received five warnings from Facebook for spreading misinformation, and the change was an attempt at misdirection. “i hope people understand why i reduced the risk by removing the title (it probably won’t work anyway), but it’s worth a go,” the admin wrote.

A study by the nonprofit advocacy group Avaaz found that 104 pieces of content it had identified as spreading false or misleading information about coronavirus had been viewed by the platform’s users more than 117 million times. Of the 41 percent of that content still active on the site, nearly two-thirds had been debunked by Facebook’s own fact-checking partners.

Facebook has also said that it is cracking down on ads on products related to the pandemic. “We recently implemented a policy to prohibit ads that refer to the coronavirus and create a sense of urgency, like implying a limited supply, or guaranteeing a cure or prevention. We also have policies for surfaces like Marketplace that prohibit similar behavior,” a company spokesperson wrote in a February statement to Business Insider.

However, earlier this month, Consumer Reports was able to schedule seven paid ads that contained fake claims, such as stating that social distancing doesn’t work or that people could stay healthy by drinking small doses of bleach. Facebook approved all of the ads.

This article was originally published on The Markup by Aaron Sankin. You can read it here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.