Did you know TNW Conference has a track fully dedicated to bringing the biggest names in tech to showcase inspiring talks from those driving the future of technology this year? Tim Leberecht, who authored this piece, is one of the speakers. Check out the full ‘Impact‘ program here.

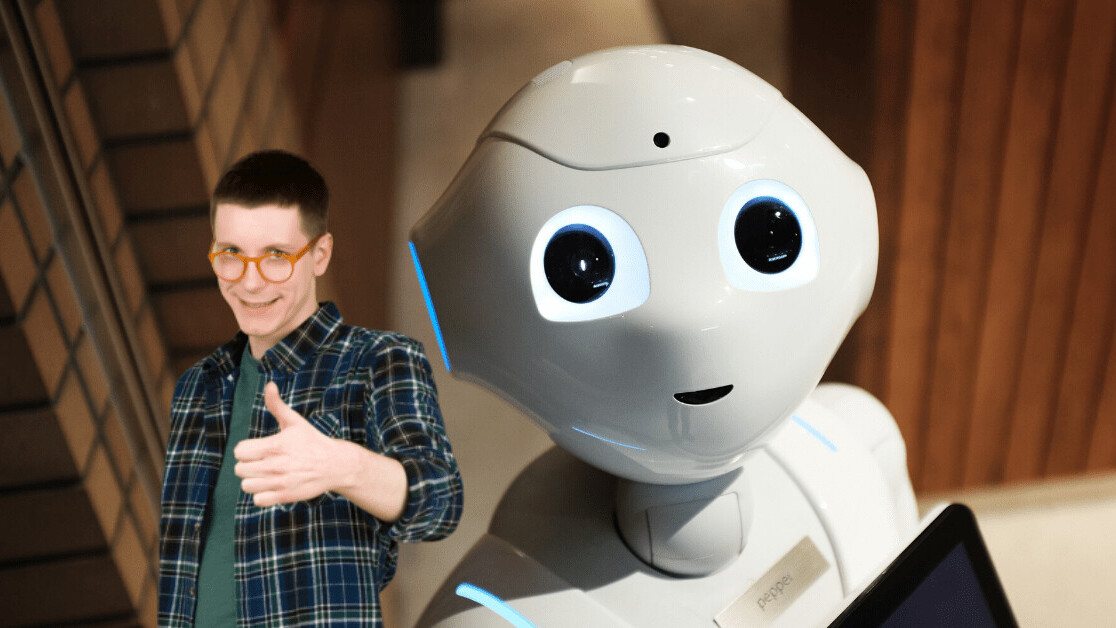

Kate Darling, a robotics researcher at the MIT Media Lab, conducted an experiment: she asked the participants to hang out with robots (which were dressed up as cute baby dinosaurs), give them names, stare at them, and talk to them. After a couple of hours of human-machine “quality time,” she then surprised the group with a harsh command: they must torture the robots, and, finally, kill them! Emotions were flying high. Some of the people in the room began to beat their robots reluctantly but aborted quickly. Some even broke out in tears. Everybody struggled, and in the end no one followed the order.

Darling tells this story to illustrate that we humans are perfectly capable of forming emotional attachments to machines. With several reports and experts indicating that so-called “soft” or social skills are becoming more important at the workplace for humans to differentiate against or augment robots, it is interesting to explore which of these skills might actually help us in our relationships with them.

Empathy

Recently, I visited the headquarters of the industrial robots manufacturer KUKA, and one of its marketing managers told me they sometimes ask clients to spend one-on-one time with the robot they were about to purchase, alone in a quiet room, to get used to each other’s presence. “We also suggest they touch the robot, and people are often surprised how warm it is,” he said.

As Darling points out, we humans tend to anthropomorphize not just robots, but objects in general. But with robots we also empathize. They can’t suffer, but we can suffer with and for them. Perhaps we are simply applying the Golden Rule (“Do unto others as you would have them do unto you”), perhaps out of fear that like in the movies the machines might recall and strike back at some point.

Interestingly, by assuming such a reciprocal relationship, we indirectly bestow personhood on them. It could also be that we are subconsciously reluctant to exert violence against robots so we don’t lower the barrier to exhibiting callous behavior towards fellow humans. In any case, it is complicated. When robots become the third party of the social fabric at the workplace, every collegial relationship will turn into a ménage-a-trois.

Ultimately, empathy with robots could also mean not just passive acceptance of their physical integrity, but a proactive honoring of their needs. Manuela Veloso, head of machine learning at Carnegie Mellon’s School of Computer Science, for instance, believes we will soon have to teach human workers to respond to requests from robots or even anticipate them to achieve a true human-machine symbiosis.

Conversational intelligence

Can robots develop empathy for us as well — or at least pretend to? Let’s take a look at chatbots, the disembodied and yet most immediate breed of robots that have become omnipresent in our daily interactions as consumers or employees. Seventy percent of millennials worldwide say they favor online customer support over interacting with a live human agent. And as the chatbot market is forecast to grow by more than 20 percent each year, 45 percent of end users already prefer chatbots as the primary mode of communication for customer service inquiries.

Last year, encouraged by these numbers, Haje Jan Kamps, a San Francisco-based serial entrepreneur, launched a web service named LifeFolder that offered users end-of-life planning advice in conversation with a chatbot named Emily. His argument was compelling: far beyond customer support, he claimed, there were certain conversations that humans would rather have with robots, especially those on sensitive, personal topics such as health or death. In speaking with a chatbot, users would appreciate not being judged by another human.

During the testing phase of their start-up, Kamps and his team made an interesting discovery: many users would pause the conversation with Emily and step back to reflect for several minutes, sometimes even hours, only then to resume it later.

It appeared as if the interaction with the chatbot had put the human user in control, making his or her statements more thoughtful than usual as there was no urgency to keep up the pace of the conversation. As we all know, it is at least awkward if not outright rude to interrupt a conversation with another person by saying “I need to think about this for a couple of hours, and then let’s continue.” Not so with a chatbot.

LifeFolder is now defunct — it turns out humans were not quite ready yet to mass-adopt such a bold value proposition — but, the new paradigm it introduced is here to stay. Whether it is end-of-life planning or mental health issues, the time-shifting capability of chatbot conversations — along of course with the troves of data from which these bots can draw — radically alters our concept of conversation and perhaps not only how we relate to robots but also one another.

Unlike humans, chatbots are innate masters of active listening. So what if they inspired us to take more time and be more concise and thoughtful in human-to-human conversations, too? Psychologist and bestselling author Esther Perel, in her keynote at SXSW in Austin last week, proclaimed that “relationships are our stories” and that we should “write well and edit often.” Our relationships with chatbots — and robots in general — might enhance our capacity to do so.

Trust

Robotics researcher Aaron Pereira from the Technical University in Munich explained to me that human trust in robots was built through consistent behavior, through the predictable repetition of action and reaction.

Before that can even occur though, first-glance familiarity is key, which is why robot designers — aside from safety considerations such as softer, rounder shapes to reduce the potential impact on the human body — are keen on providing their products with features that are humanoid or at least archetype-like and therefore instantly recognizable. However, they are careful not to fully match human features as this would cross the fine line to the “uncanny valley” of neither-machine-nor-human and come across as merely creepy.

Even if its physiognomy appears alien, a robot’s behavior can engender familiarity. A creative director with the IBM Watson team told me that her team was exploring making Watson sad or moody at times, so it would appear more human-like and thus more trustworthy.

Similarly, as Kate Darling shows in one of her talks, at one Japanese corporation robot and human workers join to perform the same set of rituals every morning at the beginning of the work day, such as waving their hands or dancing. Learning to move in harmony and feel as a single unit, they build collective muscle memory.

Actual physical muscular activity is key to building robots that can perform sensitive manual tasks (as a sweet revenge of human civilization, eating with a fork still remains a daunting challenge for them). It is fascinating to realize that at the heart of any firm or gentle touch, or a more or less powerful grip or lift, is the ability to experience tension.

For humans and robots alike there is no sensitivity without counter-force. The interplay of counteracting muscles is necessary for us humans to be able to execute a movement, and the same applies to robots. Robot manufacturer Festo has applied the principle of agonist (player) and antagonist (opponent) in all seven joints of its lightweight BionicCobot. As a result, the robot can move more naturally and the human can operate it more intuitively. Trust is a byproduct.

Push and pull is how we navigate intimacy in our human relationships as well, and with robots, too, it will be essential for our wellbeing to be able to increase or decrease proximity, and to protect a safe space for our privacy. This can be a physical “safety zone” or a temporal break in our interactions with robots at the workplace.

Humility

Most importantly, as we spend more and more time with our intrinsically motivated, hyper-efficient, and competitive robotic colleagues and keenly observe their ticks (or, rather, the lack thereof), we must defy the temptation to apply machine-like behavior to ourselves. The robot isn’t the enemy — robot-like perfection is. Surely there are benefits to making machines appear more human, but there aren’t any to making humans more like machines. We want the robots softer, while not becoming harder ourselves.

We often describe highly productive people as those who “work like a machine,” and anybody who’s ever spent ten minutes with a CEO can attest that at the upper strata of top management not only is the air thin, humanity is, too. High-performing business leaders are like athletes in their own right, and the idealized image they conjure of themselves goes even a step further and often resembles that of an infallible machine.

With so much at stake, particularly for publicly listed companies, and an understandable fear of misspeaking, they stick to talking points and run their own little automated routines in social settings. No wonder they can come across as “robots.”

Once our supervisors might indeed be actual machines, we may appreciate they’re no longer prone to erratic, inconsistent, or abusive behavior, but the flipside is that they’ll also no longer be able to show discretion, empathy, mercy — or humility.

Of all the soft skills needed to thrive in the robotic age, humility is the most important one. As humans, humility is our shelter from hubris and aggression. It implies that we always know our place in the vast universe around us, and as a consequence, can put the robots in theirs.

This article was originally published by Tim Leberecht, an author, entrepreneur, and the co-founder and co-CEO of The Business Romantic Society, a firm that helps organizations and individuals create transformative visions, stories, and experiences. Leberecht is also the co-founder and curator of the House of Beautiful Business, a global think tank and community with an annual gathering in Lisbon that brings together leaders and changemakers with the mission to humanize business in an age of machines.

Get the TNW newsletter

Get the most important tech news in your inbox each week.