Artificial intelligence is taking the world by storm. It is already manifesting in a plethora of industries and organizations more reluctant to adopt it than ever. Several niches of artificial intelligence like machine learning are being religiously used by practitioners to form better strategies, predict industry trends and bring innovative products to the market.

But, no matter what you plan to do with machine learning models, there is one thing you cannot ignore. Data is the fundamental unit of a ML model, and there’s nothing that it can do without it.

Machine learning works by learning a set of features and implementing them to produce new instances of information. However, the process is more difficult than it sounds. ML needs large amounts of datasets to learn any particular thing. For example, if you want a model to learn what a cat looks like from a picture, you’ve got to feed thousands of images in the model. This is the way they learn. Thank God as we progress into the future, data is one of the things that the world will have in abundance.

Ever since machine learning kick-started in the 1980s, several algorithms were developed. Even now, several modifications are being done to these algorithms to obtain better and vivid results from the model. However, there is one of the areas where there’s hardly been any progress. And that’s feeding the models with data. Today, if you pick any successful machine learning model, it would be easy to find that they have been trained on at least a few thousand samples.

The point is more the amount of data you feed to a model, more will the accuracy be of your results. It is also one of the reasons why practitioners limit themselves from performing quality assurance of machine learning models. All they do is keep training the machine on a large number of data and check whether they are obtaining the right results. Until they receive what they want as the output, they find no reason to test the model.

The irony of learning

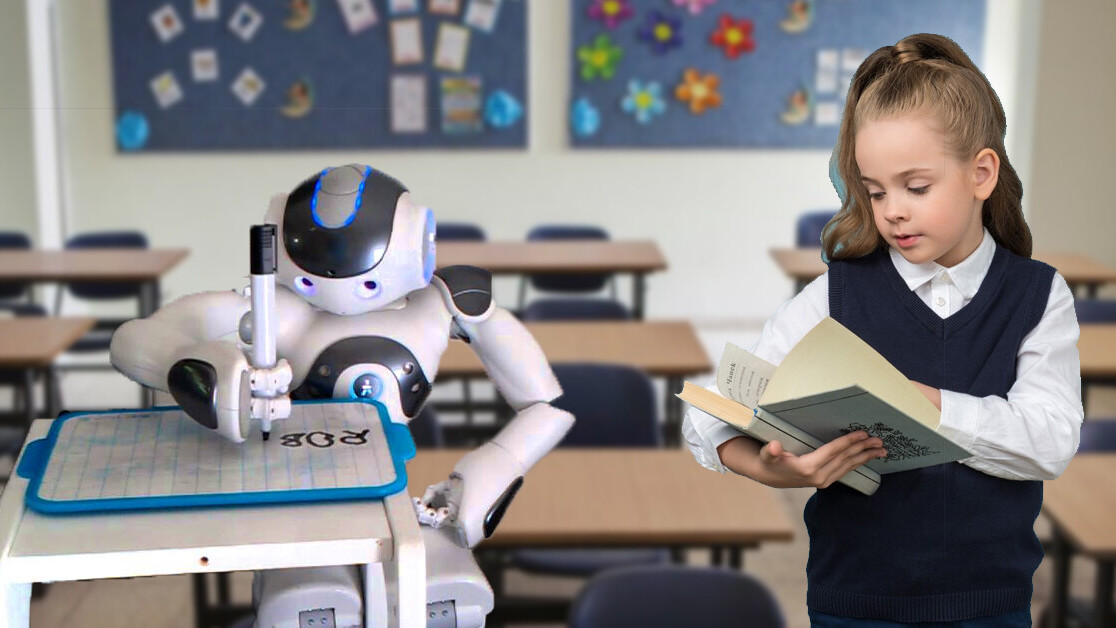

The irony of artificial intelligence and machine learning is that both are inspired by the way humans function. Neural network models in machine learning are designed to imitate the functioning of neurons in the brain. But the real question lies in asking ourselves, do we learn the way a machine does?

As a child when we saw a new toy, we were eager to learn and start playing with it. Take legos for an example. Children need one or two tutorials on how to start building legos, after which they know that they have to keep fitting the right pieces to build a tower. In another instance, if you show a child how to construct a tower that stands vertically and ask them to start building legos sideways, they would get stuck. They might pause to think about what the problem is at hand. But, with the existing learning they’ve received, it will be easy to apply it to building the legos horizontally in any of the sides.

Babies vs machines

When teaching kids, parents don’t show them thousands of chairs or their images, to help them understand what a chair is. All they do is tell them one or two times and the rest is automatically figured out. Similarly, when teaching how to build legos, parents illustrate the technique a limited number of times. In spite of this, the child can recognize similar objects and quickly apply the existing learning to other domains. How often have you seen a child confuse a chair with a cow? Exactly!

Machines on the other hand train on thousands and even millions of samples. Google’s auto-answer feature in emails is trained on at least a million data and continues to do so for increased efficiency. You can’t ask a machine to apply its existing training on an entirely different model. They get confused in instances where two things look identical. For example, an ML model might find it difficult to classify the image of a dog and a human being who has similar colored hair and style.

Today, with large datasets at hand, researchers from all across the world are claiming that ML models can be trained to learn almost everything, including the idea of common sense in human beings. But, in this process, they are forgetting years of credible research in the field of cognitive science that establishes common sense as an innate ability of human beings. It is nothing but the way we are programmed to grow up and learn. The innate or inherent capabilities of human beings are the ones that help us think clearly, vividly, and abstractly. And that’s what we call common sense.

Even though artificial intelligence and machine learning aim to bring these qualities to complex machines, it is far from actuality. The way machines learn is by finding statistical patterns in a piece of data. For example, take Google’s Deep Mind Alpha Zero. It can be trained to play a game of chess from scratch. If you’re wondering how is it able to do this, the logic is simple. When Alpha Zero plays a game, it gets a score. Overtime when it plays a million sets of the game, it learns to maximize the score. In other words, machines don’t even understand the mechanics of a game. All they do is find patterns that help them reach a desirable outcome.

Ongoing research at Stanford University suggests that babies see the world as a series of chaotic and poorly filmed videos which have a few identical objects in them. These objects might be a ball, dog, parent, etc. These objects move haphazardly at odd angles in a child’s vision. This is the exact opposite of how machines learn.

ML models are given a set of a clear and vivid dataset that helps them train for a particular task. They need to be explicitly told of their objective. The data for a child, on the other hand, is largely unsupervised. Parents do tell their children notions such as ‘Danger’ or ‘Good Job.’ But it is mostly because they want to keep them safe. Most of a child’s learning is unsupervised.

Machine learning’s unsupervised algorithms like clustering are prone to even more errors. They are extremely sensitive to their initialization and need large amounts of data with multiple iterations to function appropriately. Even if you provide a machine with large amounts of data, it can be easily fooled by adversarial examples. For example, if you input a set of jumbled pixels into the machine, it would classify it as the cat, if the underlying statistical pattern fits in that direction. Similarly, if you teach an ML model the idea of even numbers, it can barely extend to tell you about odd numbers.

The way babies learn is on account of an innate ability. Until now we haven’t been able to replicate this, even in complex machines. Consider artificial intelligence as a helicopter parent of the modern age that keeps a close eye on the learner and explicitly dictates them about right and wrong. As we move forward, real technological progress will be in terms of developing a generalized system of learning for machines. Ultimately, machines must take cues from children and learn to become curious.

This article was originally published on Towards Data Science by James Warner, a business intelligence analyst with knowledge on Hadoop/Big data analysis at NexSoftSys.com.

Get the TNW newsletter

Get the most important tech news in your inbox each week.