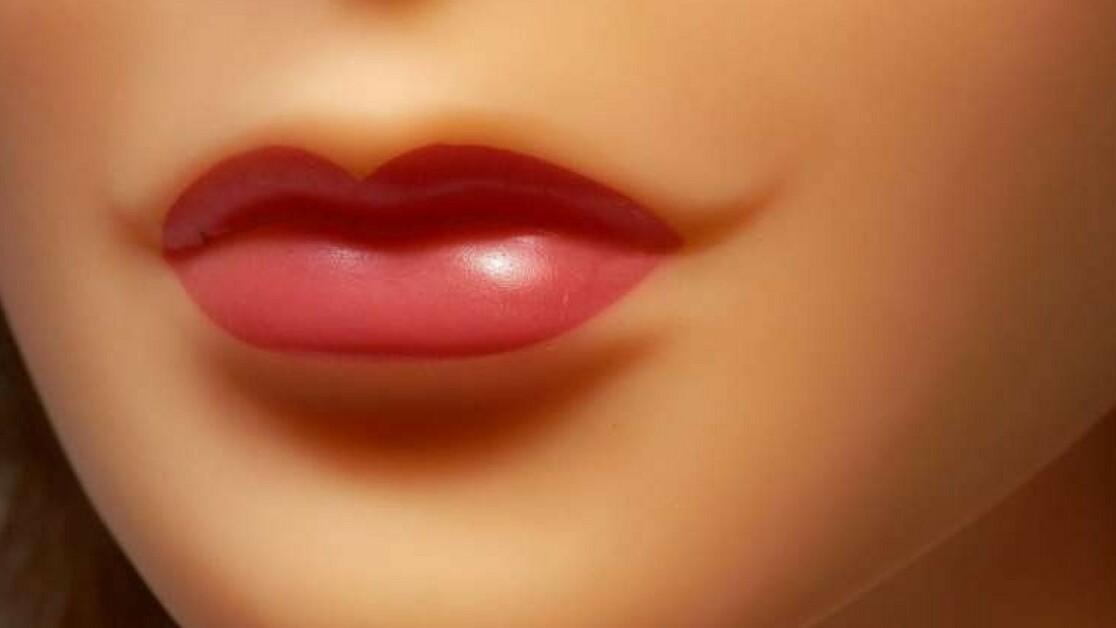

Late in 2017 at a tech fair in Austria, a sex robot was “molested” repeatedly and left in a “filthy” state. The robot, named Samantha, received a barrage of male attention, which resulted in her sustaining two broken fingers. This incident confirms worries that the possibility of fully functioning sex robots raises both tantalizing possibilities for human desire (by mirroring human/sex-worker relationships), as well as serious ethical questions.

So what should be done? The campaign to “ban” sex robots, as the computer scientist Kate Devlin has argued, is only likely to lead to a lack of discussion. Instead, hypothesizes that many ways of sexual and social inclusivity could be explored as a result of human-robot relationships.

To be sure, there are certain elements of relationships between humans and sex workers that we may not wish to repeat. But to me, it is the ethical aspects of the way we think about human-robot desire that is particularly key.

Why? Because we do not even agree yet on what sex is. Sex can mean lots of different things for different bodies – and the types of joys and sufferings associated with it are radically different for each individual body. We are only just beginning to understand and know these stories. But with Europe’s first sex robot brothel open in Barcelona and the building of “Harmony”, a talking sex robot in California, it is clear that humans are already contemplating imposing our barely understood sexual ethic upon machines.

It is argued by some in the field that there are positive implications in the development of sex robots, such as “therapeutic” uses. Such arguments are mainly focused on male use in relation to problems such as premature ejaculation and erectile dysfunction, although there are also mentions of “healing potential” for sexual trauma.

But there are also warnings that the rise of sex robots is a symptom of the “pornification” of sexual culture and the increasing “dehumanization of women”. Meanwhile, Samantha has recovered and we are assured by the doll’s developer, Sergi Santos, that “she can endure a lot and will pull through”, and that her career looks “promising”.

Popular sex doll Samantha finally breaks down after a lot of customers heavily massaged… https://t.co/BdBjHpE7rW pic.twitter.com/E4JTWUP81E

— Cingey (@CingeyNews) September 27, 2017

Samantha’s desires

We are asked by Santos (with a dose of inhuman “humor”) to applaud Samantha’s overcoming of her ordeal – without fully recognizing the violence she suffered. But I think that most of us will experience some discomfort on hearing Samantha’s story.

And it’s important that, just because she’s a machine, we do not let ourselves “off the hook” by making her yet another victim and heroine who survived an encounter, only for it to be repeated. Yes, she is a machine, but does this mean it is justifiable to act destructively towards her? Surely the fact that she is in a human form makes her a surface on which human sexuality is projected, and symbolic of a futuristic human sexuality. If this is the case, then Samatha’s case is especially sad.

It is Devlin who has asked the crucial question: whether sex robots will have rights. “Should we build in the idea of consent?” she asks. In legal terms, this would mean having to recognize the robot as human – such is the limitation of a law made by and for humans.

I have researched how institutions, theories, legal regimes (and in some cases lovers) tend to make assumptions about my (human) sexuality. These assumptions can often lead to telling me what I need, what I should feel and what I should have. The assumption that we know what the other body wants is often the root of suffering. The inevitable discomfort of reading about Samantha demonstrates again the real – yet to human beings unknowable – violence of these assumptions.

Samantha’s ethics

Suffering is a way of knowing that you, as a body, have come out on the “wrong” side of an ethical dilemma. This idea of an “embodied” ethic understood through suffering has been developed on the basis of the work of the famous philosopher Spinoza and is of particular use for legal thinkers. It is useful as it allows us to judge rightness by virtue of the real and personal experience of the body itself, rather than judging by virtue of what we “think” is right in connection with what we assume to be true about their identity.

This helps us with Samantha’s case since it tells us that in accordance with human desire, it is clear she would not have wanted what she got. The contact Samantha received was distinctly human in the sense that this case mirrors some of the most violent sexual offenses cases.

While human concepts such as “law” and “ethics” are flawed, we know we don’t want to make others suffer. We are making these robot lovers in our image and we ought not pick and choose whether to be kind to our sexual partners, even when we choose to have relationships outside of the “norm”, or with beings that have a supposedly limited consciousness, or even no (humanly detectable) consciousness.

Samantha’s rights

Machines are indeed what we make them. This means we have an opportunity to avoid assumptions and prejudices brought about by the way we project human feelings and desires. But does this ethically entail that robots should be able to consent to or refuse sex, as human beings would?

The innovative philosophers and scientists Frank and Nyholm have found many legal reasons for answering both yes and no (a robot’s lack of human consciousness and legal personhood, and the “harm” principle, for example). Again, we find ourselves seeking to apply a very human law. But feelings of suffering outside of relationships, or identities accepted as the “norm”, are often illegitimized by law.

So a “legal” framework which has its origins in heteronormative desire does not necessarily construct the foundation of consent and sexual rights for robots. Rather, as the renowned post-human thinker Rosi Braidotti argues, we need an ethic, as opposed to a law, which helps us find a practical and sensitive way of deciding, taking into account emergences from cross-species relations. The kindness and empathy we feel toward Samantha may be a good place to begin.

Victoria Brooks, Lecturer in Law, University of Westminster

This article was originally published on The Conversation. Read the original article.

![]()

Get the TNW newsletter

Get the most important tech news in your inbox each week.