While on maternity leave, I expected to take a break from my job as a UX designer, but found that I couldn’t get away. Watching my young son learn basic human skills became a study in human interaction, one that led me to new insights into designing digital experiences for adults. Because babies are all about interfaces.

A fascinating thing about newborns: They’ve spent nine months gestating in an environment where everything was provided for them — full nutrition, a steady temperature, their waste products removed. And yet from the moment they are born, they are transformed into what could be called a learning machine.

Equipped with only the basic instincts they need for the task, babies spend much of their time and efforts learning to navigate what is to them a wholly new platform — their mother’s body. They quickly discover it is a very good platform for comfort and warmth, and a source to cure their hunger. They immediately learn to latch onto their mother’s breast and how to suck to get milk. Within days, they recognize the pattern of a human face.

And from then on, a baby confronts new interfaces every day. They must discover everything without reading a manual.

At first, when I watched my infant, it sparked rudimentary thoughts and realizations — “Huh, I guess all infants do this,” or (with a slight panic), “Wow, he is completely vulnerable and depends on me totally for survival.” Only sometime later did I become more fully attuned to the process of learning unfolding before me. My thoughts turned into “Wow, humans are such fast learners,” and, “He understands gravity? Nobody taught him that!”

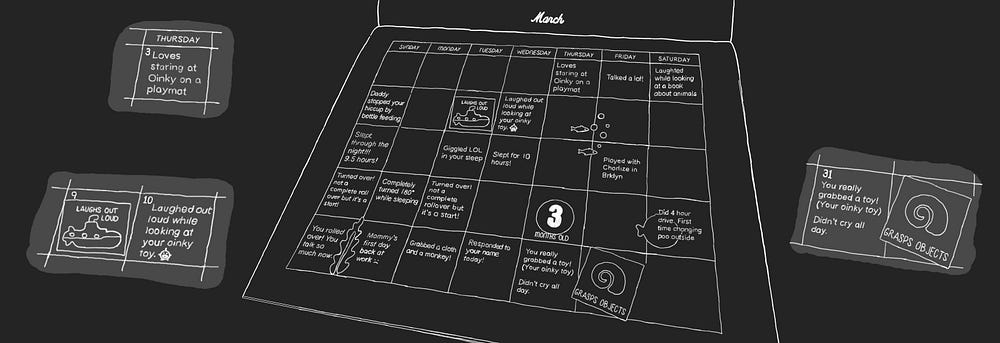

My documentation of his milestones became a form of design research.

Despite centering only on my own son, I slowly became aware of the ways in which what I was seeing could be instructive to a better understanding of the human instinct for learning behavior.

The ability of a user to learn and adapt to a new interface, of course, plays a huge role in interaction design. And so does the ability of the designer to predict the user’s response to the new system. Thus, it is not only their state of being “total learners” that makes babies so interesting to look at from an interface design perspective.

Talking recently with the Independent, Cornell University infant-research center Babylabs researcher Dr. Caspar Addyman said, “If you are trying to understand the psychology of humans, it makes sense to start with babies. Adults are far too complex. They either tell you what you want to hear or try to second-guess you.” But if a baby does something, he concludes, “it’s bound to be a genuine response.”

Much of our appreciation for good interface design relates to how natural it is to learn. Doorknobs and light switches are good examples of intuitive design, as are touch screens, whose interfaces are so intuitive even babies as early as six months old can learn to navigate them and successfully retain them in their muscle memory.

A considered, intuitive interface design approach is critical for the successful development of any innovations that require more complex learning and adaptation.

The development of early computer interfaces in the ’60s-’80s is a good example. At first, only proficient programmers could use them. But the invention of GUIs (graphic user interfaces) made it possible for a wide variety of people to use computers, and thus for computers to become consumer products.

The breakthrough interface work of Alan Kay at Xerox PARC played a pivotal role in the emergence of computers as a common tool for expression, learning, productivity, and communication — a role no less important than such factors as hardware and chip speed.

The design of GUIs was based on Constructivism, a learning theory that emphasizes learning through doing, or ‘constructing understanding’ by gaining knowledge based on iterative interactions with one’s environments. Alan Kay drew on the theories of Jean Piaget, Seymour Papert, and Jerome Bruner, who had studied the intuitive capacities for learning present in the child’s mind, and the role that images and symbols play in the building of complex concepts.

Kay came to understand, as he put it, that “doing with images makes symbols.” This was the premise behind the GUI, which enabled computer users to formulate ideas in real time by manipulating icons on the computer screen. Kay’s approach made computers accessible to non-specialists.

More importantly, it transformed the computer into a vehicle for popular creative expression.

Such a case study exemplifies a general principle that, I would argue, is useful for designers who see value in a constructivist approach: since babies — who start with no knowledge — learn through action, paying close attention to the ways in which they discover and adapt to the world can provide UX designers with valuable information and insights into interface design approaches — even when designing for adults.

This is especially true when designing in an era such as ours, which is marked by a robust breadth of new, rapidly evolving possibilities for user interaction.

I will outline two ways in which my newfound appreciation for this principle shaped my thoughts on interface design matters.

VR: Create an environment of yes!

When I asked my pediatrician why my one-year-old trips so frequently, his response was that while in my child’s mind he is great at walking and capable of doing so quite fast, his body does not follow up quite as well. Thrown into a new environment with tools he is only beginning to master, my child stumbles often. And while children will of course not give up on learning to walk due to a series of falls, this is usually not the case for adult learners, where such a situation might prove highly problematic.

Virtual reality, with its ability to allow us to vividly imagine realities unconstrained by everyday physical implications, is a good example of such a problem.

When a user first dons a VR headset, their perception of physical laws is often upended. The immersive nature of the media means one’s sense of orientation and balance may be easily thrown off. The strength of VR to transport its users into what feels like a different space governed by different rules is also the source of one of its central weaknesses: namely, the nausea this transportation so often induces.

While VR content creators might feel as if they are curating experiences without boundaries, it is important for us to carefully consider the effects and outcomes generated by rapid immersion into such a new environment.

It is a well-established fact that infants and toddlers absorb information faster when they are in a familiar, nurturing environment. When switching babies to a new environment, it is important to leave some familiarities with them so they feel emotionally comfortable.

Research suggests that infants repeat certain behaviors until they are confident they have mastered them. It may follow, then, that when setting up a virtual world that abides by different laws of motion, one would be wise to introduce the novel spatial elements in a slow, controlled manner, allowing for the supporting presence of features familiar from ‘the outside.’

Another important point in this respect is that babies are active learners: they do not sit passively and take in information. They probe and test their way into knowledge. And while their motivation and thirst for growth may be distinctly different from adults’, the essential learning principal holds: in order to be fully engaged in the learning of a new world, the viewer must be the protagonist, not just a spectator.

Likewise, design that allows for repetitive, user-centered behavior will expedite users’ learning curve of VR situations. Dynamic, responsive interaction that takes into account the user’s outside reality and sense of agency is key to helping users gain familiarity with new experiences.

Voice recognition AI: Single mind vs. open, global-minded conversation

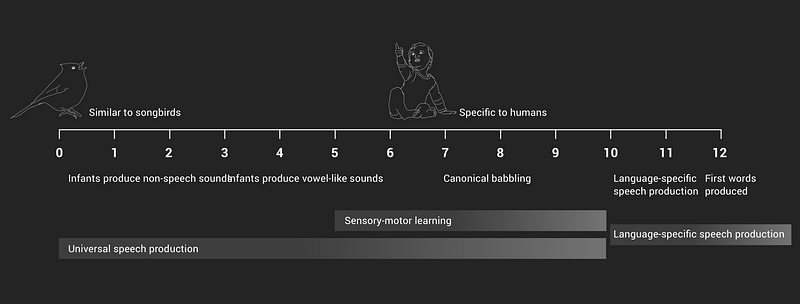

Motor abilities acquisition is one sphere of learning young children are immersed in.

Language is another. My husband and I come from different linguistic backgrounds and found ourselves deeply invested in understanding how best to raise our baby in a trilingual household. Could we foster the acquisition of three languages (English, Korean, and Spanish) while avoiding causing confusion and developmental delay?

Upon delving into the literature surrounding this question, I was amazed to discover infants are able to differentiate one language from another — even before they understand any of the given languages. Besides encouraging our multilingual parental undertaking, learning about this superpower afforded me a new perspective on strategies for future machine learning.

When a team I was part of at R/GA was working on an AI project, we found that for many users, one critical point of frustration was when speech-recognition algorithms failed to understand a user’s spoken words due to their accent.

“It never really works for me,” one such user, who spoke perfect English with a measure of Japanese accent, told us during an interview. “I tried to train Siri how to pronounce my name, but it doesn’t get it. It only works with common English names.”

The reasons for this problem, which is of course well known to certain, large portions of consumer-electronics users, are not innate to computing, but rather the product of cultural and economic decisions informing programming approaches.

One central issue (well detailed in this informative article) is the expense of collecting data, a factor that contributes to certain key demographics taking priority, and an AI voice devoid of any identity or accent beyond the “mainstream.”

Could examining infants’ linguistic acquisition help us tackle this problem?

In her book Early Language Acquisition, Patricia Kuhl describes the innate linguistic flexibility exhibited by small children. “Infants can discriminate among virtually all the phonetic units used in languages, whereas adults cannot. Infants can discriminate subtle acoustic differences from birth, and this ability is essential for the acquisition of language. Infants are prepared to discern differences between phonetic contrasts in any natural languages.”

This early neural plasticity is pivotal to an infant’s future learnings, laying out the basic elements of language command.

When people talk to infants, they often adopt a certain ‘baby-talking’ tone of voice. This tone is remarkably similar all over the world. Evidence suggests that this style of speech facilitates infants’ ability to learn basic codes of speech. We know how to help our babies learn.

With this type of “deep teaching” in mind, I wonder — what would our machine-learning programs look like if we approached the task of programming them in a way that more closely resembled that of how we help our babies learn? If we started by teaching computers the most basic communication skills, ones that non-human mammals use, and then proceed to teach them skills that are unique to humans, such as statistical learning?

AIs might have very different capabilities and potential for growth.

During speech recognition-based product development, designers are rarely in the position to affect the programming of the voice being generated, but often do impact the user experience as it relates to the conversation.

Designing accent-flexible AI algorithms might start by modeling them to learn in ways more similar to the way human children do. The silence after the first greeting phrase uttered by a Siri-like device should be receptive to different styles of speech used by different people. Designing for phonetic flexibility — rather than for a predetermined “common” speech pattern — would allow the relationship between a user and a machine to tighten in an organic way.

Designers would be wise to learn from the flexibility built into babies and ingrain in machines’ learning programs some affordance for the adoption of different accents and intonations. We need to start by giving computers the ability that infants have, not the ability that old scholars and engineers have.

Theory of mind takes into account that when speaking with another person, one innately expects one’s partner to take some notice of your background, and make allowances for it.

Most individuals will allow, in some way, the words and cadence they employ to shift in response to their partner in discourse. Machines are not there yet, but that is the place we must aim for in order to create situations where talking with a machine will feel more natural.

The flexibility needed for good conversation is not only about phonetics, or course.

Most people would not have much tolerance for a conversation partner who regularly ended a conversation by saying “I don’t know” and then immediately walking away. Yet our ‘smart’ machines cut off conversations all the time.

Likewise, anyone conversing with an AI will quickly realize the importance of designing for an engaging conversation loop, rather than programming the machine to simply say “Sorry.”

Incorporating emotional intelligence as well as emotional quotient (EQ) in AI is the next challenge in our “Intelligent Age.”

My son was eleven months old when we brought an Amazon Echo (AKA Alexa) home. I worried that due to its disembodied voice, the machine might confuse my child.

I was relieved that this was not the case. I believe Alexa’s gentle light and sound helped make my son smile and accept the responses uttered. Such cues can help generate conducive human/machine interaction moments. But even with this in mind, much more work needs to be done in the field of AI, especially in the areas of human cues and natural language.

I recently wrote down in my diary that my son gave a big kiss to “mong mong.” Mong mong is a Korean phonetic barking sound of a dog.

After fifteen months of living on this earth, equipped with our human pattern detection and computational abilities, he has identified a dog, is able to associate a Korean sound to it, and has built a relationship with an object — a doll — that symbolizes a dog.

I propose that UX developers would benefit greatly from taking time away from the digital, tapping into our innate power of observing the people around us and how they learn and construct the world.

I learn from my son’s learning. I am constantly surprised by how much I learn about UX design by following the growth of my son. And at the same time, my belief in the unique power of designers is strengthened as I realize how my training as an interface designer helps me closely examine and make sense of the ways in which my son learns to navigate and make sense of the world around him.

Get the TNW newsletter

Get the most important tech news in your inbox each week.