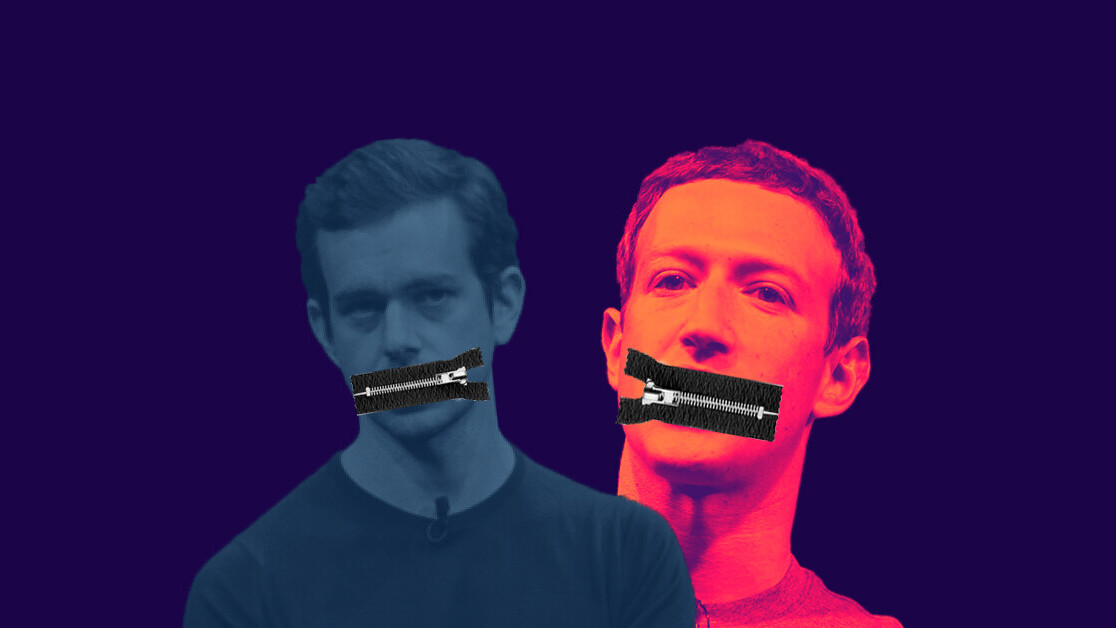

It’s a dark day for netizens around the UK: The government is considering implementing a new law that will practically make social media services like Facebook and Twitter responsible for moderating “harmful content” on their platforms, Reuters reports.

The country will impose a duty of care to ensure companies have systems in place to react to concerns over problematic content, the government explained. The measure is also expected to help improve the safety of online users, according to statements provided by government officials.

“As the internet continues to grow and transform our lives it is essential that we get the balance right between a thriving, open and vibrant virtual world, and one in which users are protected from harm,” Digital Minister Nicky Morgan and Interior Minister Priti Patel said.

At one point, the British government contemplated enlisting broadcast and telecoms regulator Ofcom to oversee the new regime.

For what it’s worth, the government seems to have its heart at the right place. “The new system aims to force tech giants to act on child exploitation, self-harming and terrorist content on the internet, but critics have pointed out that this could have knock-on effects,” the FT writes.

The duty of care will effectively apply to any online platform which runs on user-generated content, like comments, status updates, and videos. Any failed attempt at curbing what the government considers “harmful content” will result in huge fines for the companies, with bosses potentially held personally accountable.

As a staunch supporter of free speech, this development worries me. It almost feels as if the UK government is offloading responsibility to social media platforms. “This isn’t our problem, it’s yours,” is the sentiment I’m getting here.

I hope I’m wrong, but I’m afraid the newly introduced duty of care will inevitably ramp up censorship across online platforms. Faced with the threat of hefty fines, platforms, whose primary goal is to increase margins for shareholders, will have no option but to comply with any takedown requests citing “harmful content.”

What else can they do? Spend an unholy amount of time and money in legal fees defending a user’s right to express their opinion, and still risk having the government label that opinion “harmful content” (at which point it won’t be the user liable for their words, but the company)? No company is going to take that risk — and I can’t say I blame them for that.

The UK has had a complicated relationship with free speech in recent times. Back in 2016, Mark Meechan, more commonly known as Count Dankula, got in trouble with the law after releasing a satirical video in which he trained a dog to throw a Nazi salute. In the video, he also used language, which many deemed offensive.

In 2018, Meechan was arrested and convicted of being “grossly offensive” under the Communications Act 2003. He was also ordered to pay a fine of £800 (about $1,040). He refused to pay the fine, and instead donated the amount to the Glasgow Children’s Hospital Charity. Still, the money was eventually seized from his account by an arrestment order.

Meechan is hardly the only Briton who’s had to deal with the authorities for stuff he said online.

In 2019, 74-year-old Margaret Nelson was woken up by an unexpected call from Suffolk Police, asking her to explain a series of tweets she had recently posted. Apparently, some of the messages — one of which read “Gender is B.S.” — had upset transgender people, the officer said over the phone. Then they asked Nelson to tone it down, and delete the tweets.

Nelson refused. “I’m not going to keep quiet just because some people might get a bit upset,” Nelson told The Spectator. “I’m 74. I don’t give a fuck any more.”

Eventually, Suffolk Police were forced to issue an apology to Nelson, admitting it might’ve been a lapse of judgement to follow up on the complaints in the first place. “We accept we made a misjudgement in following up a complaint regarding the blog,” Suffolk Police told The Spectator. “As a result of this we will be reviewing our procedures for dealing with such matters.”

Maya Forstater wasn’t as fortunate as Nelson. The 45-year-old tax expert lost her role at a think tank after tweeting out her views on gender and biological sex, which many deemed “offensive and exclusionary.” “My belief […] is that sex is a biological fact, and is immutable,” Forstater had said.

A judge didn’t see things this way, though. “I conclude from […] the totality of the evidence, that [Forstater] is absolutist in her view of sex and it is a core component of her belief that she will refer to a person by the sex she considered appropriate even if it violates their dignity and/or creates an intimidating, hostile, degrading, humiliating or offensive environment,” a 26-page judgement read. “The approach is not worthy of respect in a democratic society.”

For the record, Forstater is not alone in her views. Many feminists share her concerns, including JK Rowling, author of Harry Potter and a self-proclaimed progressive, who came to the defense of Forstater. She was also promptly “canceled” for expressing her support for the tax expert.

Let me make one thing clear: There’s no denying the examples I’ve outlined are deeply controversial.

I firmly believe society should vigorously question such opinions. I also know not every opinion will stand up to careful scrutiny. Nor should it. While I draw the line at calls to action (especially ones calling for violence), what I do believe, however, is that the government has no place in telling people what opinions they should hold — even if they’re wrong. (

The UK government, though, seems to think it’s found a workaround — a particularly vicious one. By putting the onus on the companies that manage these platforms, it effectively pulls itself out of the equation. It lifts its hands from all responsibility of defining what constitutes “harmful content,” which is a particularly divisive topic these days.

Instead, it’s asking the companies decide what language is appropriate and not.

This move won’t make these platforms any safer or welcoming. It’ll simply lead to more censorship, both to people you and I agree and disagree with. It’ll also divide us even further.

This is no solution. It’s just another problem.

Get the TNW newsletter

Get the most important tech news in your inbox each week.