Errata: We mistakenly built the original story around a Reuters report (now updated) which claimed that Woven Planet did not use LiDAR and radars in its approach. We’ve since learned that the company does indeed utilize LiDAR and radars, and have updated the story to reflect that, both in the article content and the headline.

We sincerely regret the error.

Last May, Tesla stuck the middle finger to the entire auto industry, deeming the use of multiple sensors for autonomous driving functionalities… unnecessary. It ditched radars and initiated a camera-only approach, dubbed “Tesla Vision.”

The decision cost the company the safety recognition by the National Highway Traffic Safety Administration (NHTSA), and led to multiple owner complaints over possibly related “phantom breaking” issues.

Now, Woven Planet, Toyota’s self-driving subsidiary, will be the second company to adopt a vision-based strategy in pursuit of fully autonomous driving — but its approach differs significantly from Tesla’s.

To further train its autonomous system, Woven Planted highlighted the need for big amounts of data, which it can’t collect from its current small fleet of autonomous vehicles. That’s because the high cost of radar and LiDAR sensors doesn’t allow for an at scale deployment.

Low-cost cameras, on the other hand, could enable access to a huge corpus of data. But on the downside, they generally come with certain limitations. They have lower accuracy in terms of object distance and speed (especially during nighttime), they offer limited range, and they are less effective in bad weather conditions.

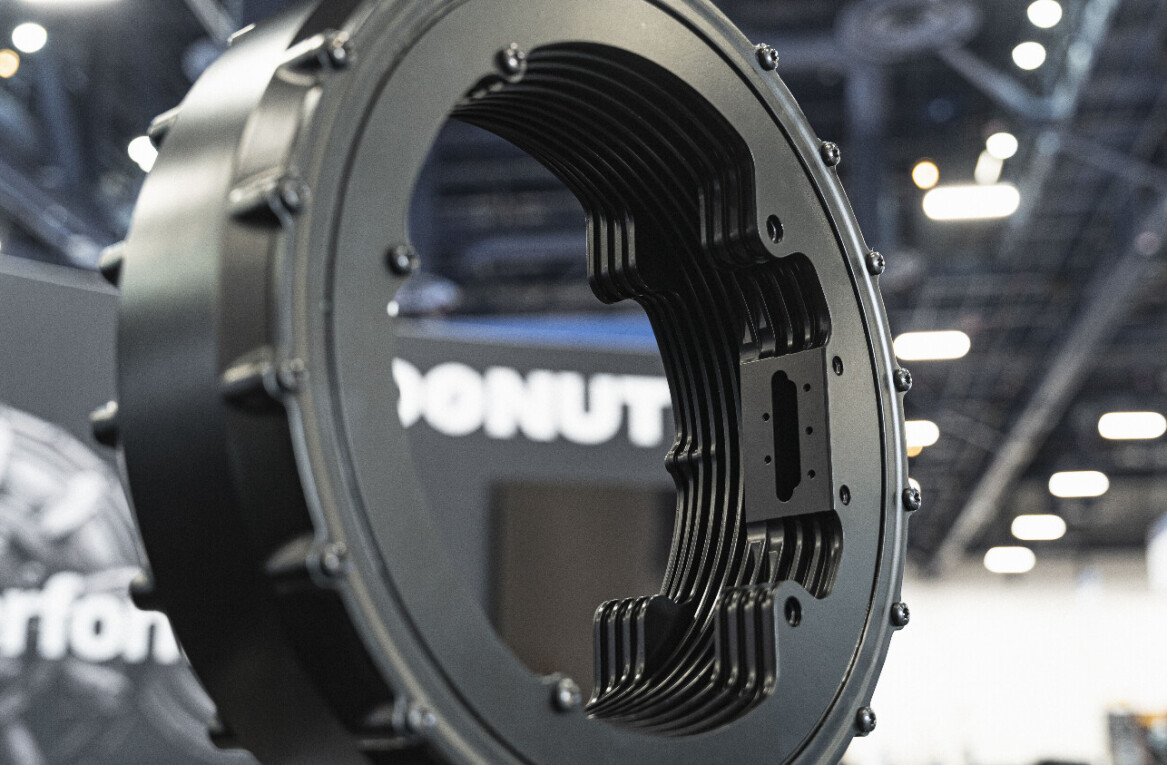

To solve this, Woven Planet developed a camera-only collection device — reportedly 90% cheaper than the sensors it was using before — to provide data alongside radars and LiDARs.

After improving the system’s perception algorithms and conducting experiments, the company found that using a majority of data coming from low-cost cameras increased its system’s performance to a level comparable to when the system was trained exclusively on high-cost sensor data.

Based on these findings, the company’s confident that it can effectively train its autonomous system, using camera data as the primary source.

Starting this year, Woven Planet will begin deploying the technology at scale, mounting the cameras on fleets of human-driven vehicles, so the system can observe and train itself on more human-driving data.

In the meantime, Toyota will still use multiple sensors including radars and LiDAR for its robotaxis and other autonomous vehicles on the road; this is currently deemed the safest approach, Michael Benisch, VP of Engineering at Woven Planet, said to Reuters.

Whether camera technology will eventually advance to the point that it overtakes the other sensors is unknown. What’s important for now is that Woven Planet isn’t following Tesla’s controversial footsteps. It’s not removing radar and LiDAR sensors completely, and it’s not deploying the tech to commercial vehicles to test it on consumers.

Get the TNW newsletter

Get the most important tech news in your inbox each week.