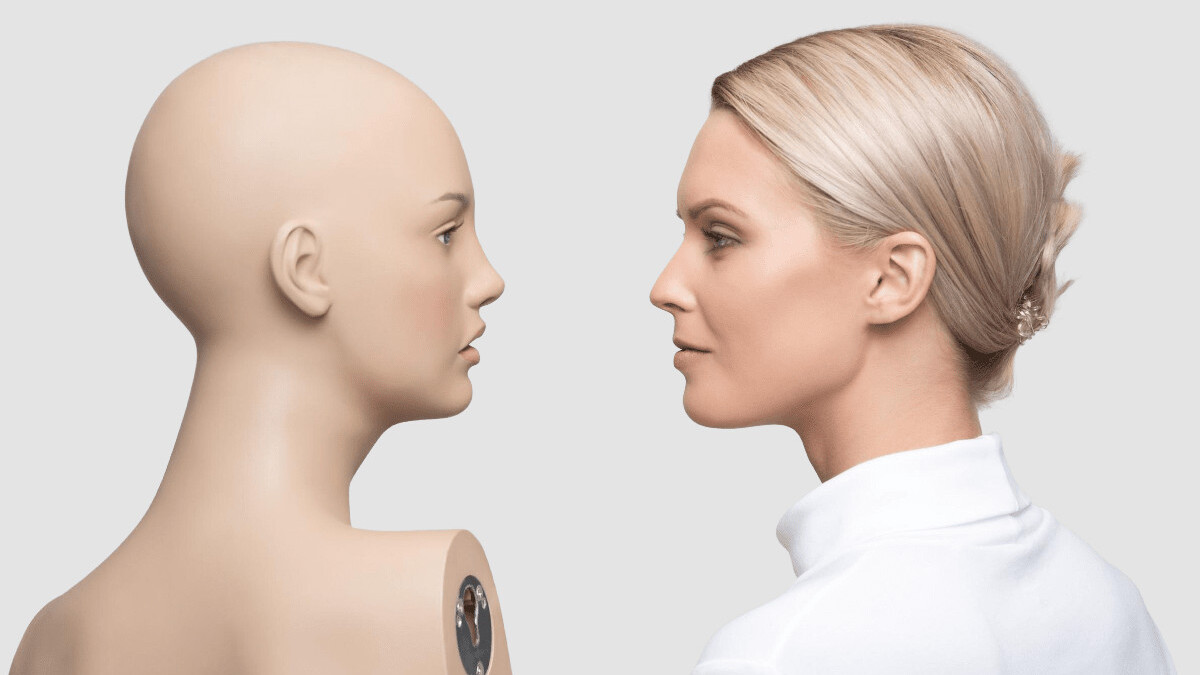

The machine learning models that can detect our face and movements are now part of our daily lives with smartphone features like face unlocking and Animoji. However, those AI models can’t predict how we feel by looking at our face. That’s where EmoNet comes in.

Researchers from the University of Colorado and Duke University have developed the neural net that can accurately classify images in 11 emotional categories. To train the model, researchers used 2,187 videos that were clearly classified into 27 distinct emotion categories including anxiety, surprise, and sadness.

The team extracted 137,482 frames from these videos and then excluded sets of a particular emotion that had less than 1000 samples. After the training, the team used 25,000 images to validate their results.

They concluded that AI can accurately predict emotions like craving, sexual desire, or horror. However, it couldn’t predict confusion, awe, or surprise with great precision. The researchers said that emotions such as joy, amusement, and adoration were confusing for the model as they had similar facial features.

To further improve the model, researchers called in 18 people and measured their brain activity while showing them 112 different images. Then they showed the same images to EmoNet and compared the results.

The team said this type of health can prove very helpful for mental health applications such as therapeutics, treatments, and interventions. It’s not a guarantee that this research can prove fruitful. A study published earlier this month – which observed and reviewed more than 1000 other studies – claimed that emotional recognition by AI can’t be trusted.

You can read more about EmoNet here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.