I’m a cisgender white male. I can unlock my phone, sign into my bank account, and breeze through border patrol check points using my face with 98 percent accuracy.

Facial recognition software is great for people who look like me. Sure, I still face the same existential dangers as everyone else: in the US and China we live in a total surveillance state. Law enforcement can track us without a warrant and put us into categories and groups such as “dissident” or “anti-cop,” without ever investigating us. If I show my face in public, it’s likely I’m being tracked. But that doesn’t make me special.

What makes me special is that I look like a white man. My beard, short hair, and other features remind facial recognition software that I’m the “default” when it comes to how AI categorizes people. If I were black, brown, a woman, transgender, or non-binary “the AI” would struggle or fail to identify me. And, in this domain, that means cutting-edge technology from Microsoft, Amazon, IBM, and others inherently discriminates against anyone who doesn’t look like me.

Unfortunately, facial recognition proponents often don’t see this as a problem. Scientists from the University of Boulder in Colorado recently conducted a study to demonstrate how poorly AI performs when attempting to recognize the faces of transgender and non-binary people. This is a problem that’s been framed as horrific by people who believe AI should work for everyone, and “not a problem” by those who think only in unnatural, binary terms.

It’s easy for a bigot to dismiss the tribulations of those whose identity falls outside of their world-view, but these people are missing the point entirely. We’re teaching AI to ignore basic human physiology.

Researcher Morgan Klaus Scheuerman, who worked on the Boulder study, appears to be a cis-male. But because he has long hair, IBM’s facial recognition software labels him “female.”

And then there’s beards. About 1 in 14 women have a condition called hirsutism that causes them to grow “excess” facial hair. Almost every human, male or female, grows some facial hair. However, at a rate of about 100 percent, AI concludes that facial hair is a male trait. Not because it is, but because it’s socially unacceptable for a woman to have facial hair.

In 20 years, if it suddenly becomes posh for women to grow beards and men to maintain a smooth face, AI trained on datasets of binary images would label those with beards as women, whether they are or not.

It’s important for people to understand that AI is stupid, it doesn’t understand gender or race anymore than a toaster understands thermodynamics. It just tries to understand how the people developing it see race and gender – meaning those who set its rewards and success parameters determine the threshold for accuracy. If you’re all white, everything’s alright.

If you’re black? You could be a member of Congress, but Amazon’s AI (the same system used by many law enforcement agencies in the US) is likely to mislabel you as a criminal instead. Google’s might think you’re a gorilla. Worse, if you’re a black woman, all of the major facial recognition systems have a strong chance of labeling you as a man.

But if you’re non-binary or transgender, things become even worse. According to one researcher who worked on the Boulder study:

If you’re a cisgender man, or a cisgender women, you’re doing pretty okay in these systems. If you’re a trans woman, not as well. And if you’re a trans man… looking at Amazon’s Rekognition… you’re at about 61 percent. But if we step beyond people who have binary gender identities… a hundred percent of the time you’re going to be classified incorrectly.

Facial recognition software reinforces the flawed social constructs that men with long hair are feminine, women with short hair are masculine, intersex people don’t matter, and the bar for viability in an AI product is “if it works for cis-gender white men, it’s ready for launch.”

It’s easy to ignore this problem if it doesn’t affect you, because it’s hard to see the “dangers” of facial recognition software. Black people and women can use Apple’s Face ID, so we assume that this onboard example of machine learning represents the database-connected reality of general recogntion. It does not.

FaceID compares the face it sees to a database that consists of just you. General detection, such as finding a face in the wild, is done through programmable thresholds. This just means that Amazon’s Rekognition, for example, can be set to 99 percent confidence (it won’t make a decision if it’s not 99 percent sure), but then it becomes useless as it’ll only work on white cisgender men and women with perfect portrait photos and great lighting. Law enforcement agencies lower the accuracy threshold far below Amazon’s recommended minimum setting so that the system starts making “guesses” about non-whites.

Cops, politicians, banks, airports, border patrol, ICE, UK passport offices, and thousands of other organizations and government entities use facial recognition every day despite the fact it creates and automates cisgender white privilege.

If Microsoft released a version of Windows that demonstrably and qualitatively worked better for blacks, Asians, or Middle-Easterners, it’s likely the outrage would be enough to shake the trillion-dollar company’s iron-like vise on the technology world. Never mind that almost all AI technology that labels or categorizes people into subsets based on inherent human features such as sex, gender, and race exacerbates secondary systemic bigotry.

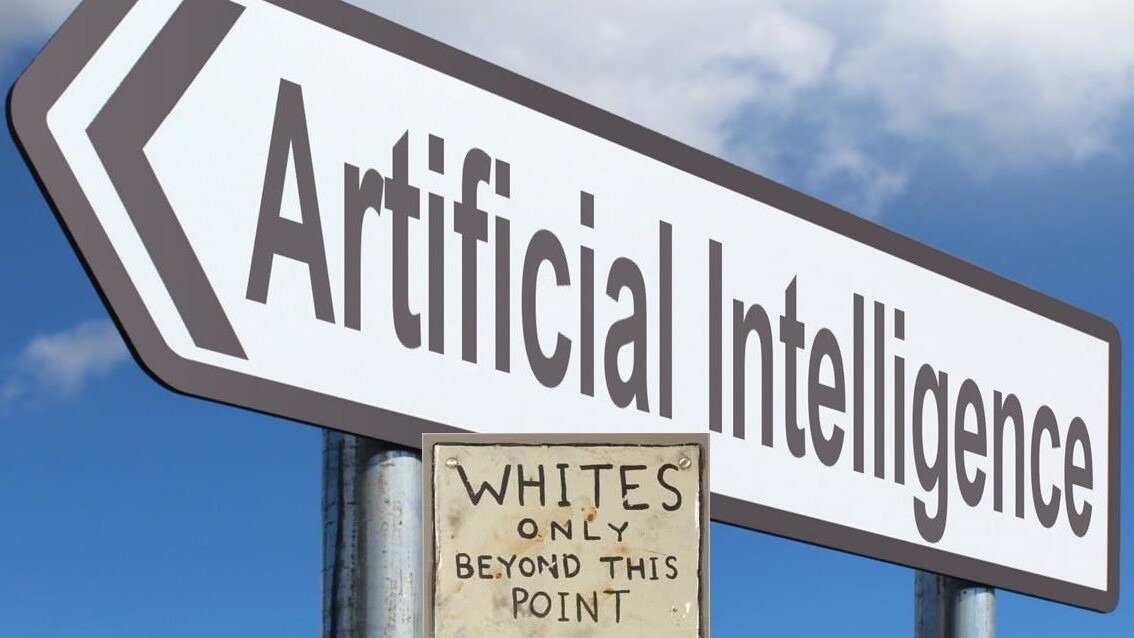

Facial recognition software designed to make things generally more efficient is a ‘cisgender whites only’ sign barring access to the future. It gives cisgender white men a premium advantage over the rest of the world.

There’s certainly hope that, one day, researchers will figure out a way to combat these biases. But, right now, any government or business deploying these technologies for broad use risks intentionally using AI to spread, codify, and reinforce current notions of bigotry, racism, and misogyny.

Get the TNW newsletter

Get the most important tech news in your inbox each week.