Data centres gobble up roughly 2% of global electricity, which translates to around 1% of energy-related greenhouse gas emissions.

Streaming Netflix, storing stuff in the cloud, and meeting up on Zoom are just some of the online activities fuelling machines’ appetite for energy. But perhaps the biggest culprit of all is artificial intelligence.

AI models require immense amounts of computational power to train and run, particularly for machine learning and deep learning tasks. Consequently, the International Energy Agency (IEA) predicts energy use from data centres will double by 2026.

Either way you slice it, data centre energy use is a looming climate problem. So what can we do about it?

Other than pressing pause on digitalisation, there are two key solutions. The first is powering data centres with renewable energy. Tech giants have made lofty claims about cutting emissions from their data centres, but big question marks remain. More on that later.

The second, and something that a growing number of startups are working on, is extracting the most value from every kilowatt — aka energy efficiency.

Immersion cooling

Big tech companies like Nvidia are investing in more energy-efficient hardware, such as specialised AI chips designed to reduce power consumption for specific tasks. But, to really tackle data centres’ energy use you have to look at cooling.

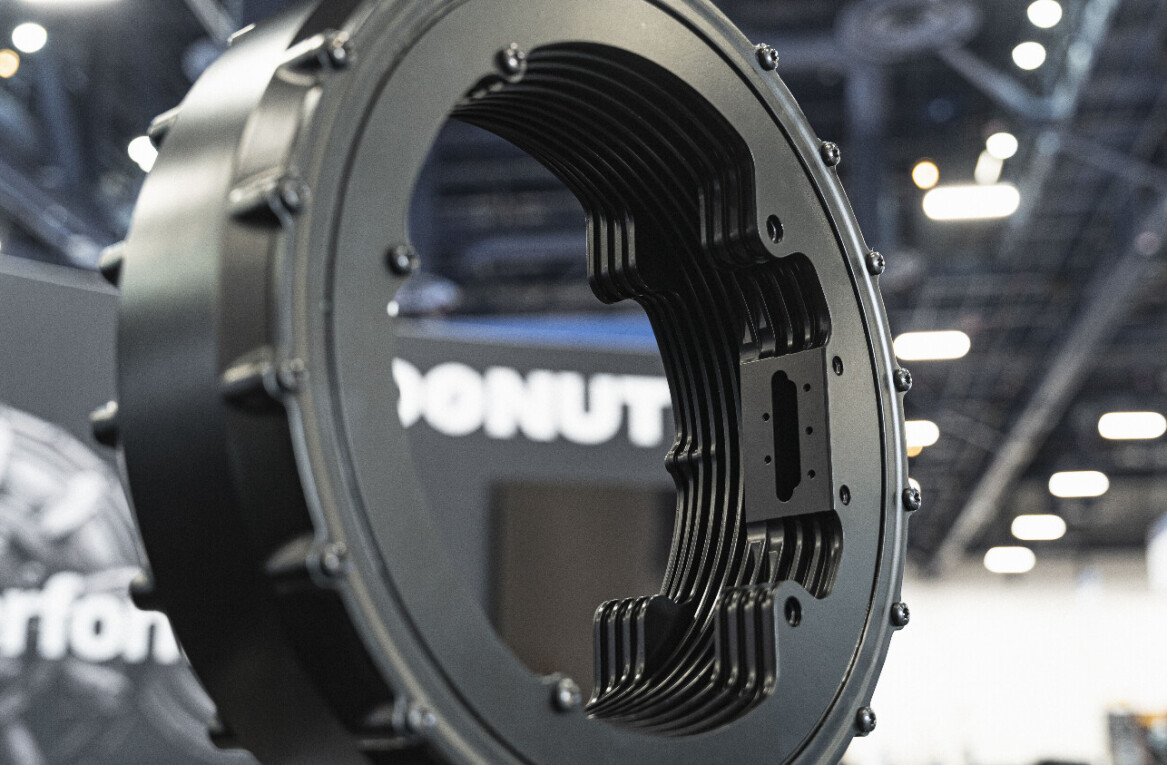

Cooling down servers alone accounts for around 40% of data centre energy use. Startups like Netherlands-based Asperitas, Spain’s Submer and UK-based Iceotope believe they have an answer — throw the servers in water.

Well, it’s not technically water at all, but a non-conductive, dielectric liquid that absorbs the heat from the servers much better than air. The heat is then transferred from the liquid to a cooling system. This method keeps the servers cool without needing fans or air conditioners.

According to a study by the University of Groningen, immersion cooling, as it is known, can cut the energy consumption of cooling a data centre in half. Immersion cooling also allows you to stack the servers closer to one another, cutting space requirements by up to two-thirds.

Barcelona-based Submer, which has raised over $50mn in funding, claims its tech can cut up to 99% of data centre cooling costs. The startup, and many others, are looking to tap this emerging market that by one estimate could swell by 35 times over the next 12 years.

While startups like Submer are looking to reduce the energy required to cool down data centres, other companies are pursuing ways to put the heat to good use.

Repurposing data centre heat

What do AI and swimming pools have in common? They both devour huge amounts of energy, of course. London-based startup Deep Green has found a clever way to marry the two.

Deep Green instals tiny data centres at energy-intensive sites like leisure centre facilities. Its system turns waste heat from the computers into hot water for the site.

In return, cold water from the centre is used to cool the cloud servers. The idea is that the host site gets free heating generated by Deep Green’s servers processing data, which in turn gets free cooling.

German startup WINDCores is also looking to localise data centres. But instead of swimming pools, it is putting mini data centres inside wind turbines. The servers are powered almost exclusively by the wind and transfer data via existing fibre-optic cables.

In Norway, a trout farm is being powered by waste heat from a nearby data centre, while in Stockholm, Sweden, some 10,000 apartments receive heat provided by data centre operator DigiPlex.

All of these weird and wonderful solutions will need to scale up fast if they are to make a dent in data centres’ thirst for electricity. In January, Deep Green raised a whopping £200mn to heat between 100-150 swimming pools across the UK.

But ultimately, we also need to start using computing power more wisely.

“I find it particularly disappointing that generative AI is used to search the Internet,” Sasha Luccioni, a world-renowned computer scientist, recently told AFP. Luccioni says that, according to her research, generative AI uses 30 times more energy than a traditional search engine.

Striking a balance

In August, Dublin rejected Google’s application to build a new data centre, citing insufficient capacity in the electricity grid and the lack of significant on-site renewable energy to power the facility. Data centres swallowed up 21% of Ireland’s electricity last year.

The decision in Dublin is “likely to be the first of many” that will ultimately force new data centre builds to generate more clean energy either on-site or nearby, said Gary Barton, a research director at data analytics firm GlobalData.

Scrutiny over the potential costs of the AI-fuelled data centre boom come amid mounting criticism that tech giants are overinflating their progress on climate change.

A recent investigation by the Guardian newspaper found that from 2020 to 2022 the real emissions from the company-owned data centres of Google, Microsoft, Meta, and Apple were about 662% higher than officially disclosed.

Balancing the energy demands of data centres with climate and infrastructure constraints will be “crucial” for governments and service providers going forward, said Barton.

In the future, putting data centres in space or powering them using fusion energy might solve some of these problems, but for now making sure data centres (genuinely) run on green power and run more efficiently will be critical to strike this balance.

Get the TNW newsletter

Get the most important tech news in your inbox each week.