If you make robots, and your target demographic isn’t children, stop trying to give your machines a personality. Robots suck at being humans. Instead of asking how robots can be more like people, just make them useful.

One ridiculous robot after another graces our periphery in a never-ending cascade of failed human-interface designs. These almost-pointless machines are nothing but hyperbole factories and media magnets that somehow manage to keep getting created, despite engaging in borderline offensive behavior.

Perhaps the success of useful robots, like the ones that build our cars or vacuum our floors, has confused other developers. If millions of people will buy a Roomba, surely billions would want one they can chat with right?

No, actually, a robot that makes cleaning easier is a good thing. But a robot that does a worse job at a given task than an average person, while simultaneously wasting the time of everyone it encounters, isn’t better. It doesn’t matter how snarky or complimentary you make it.

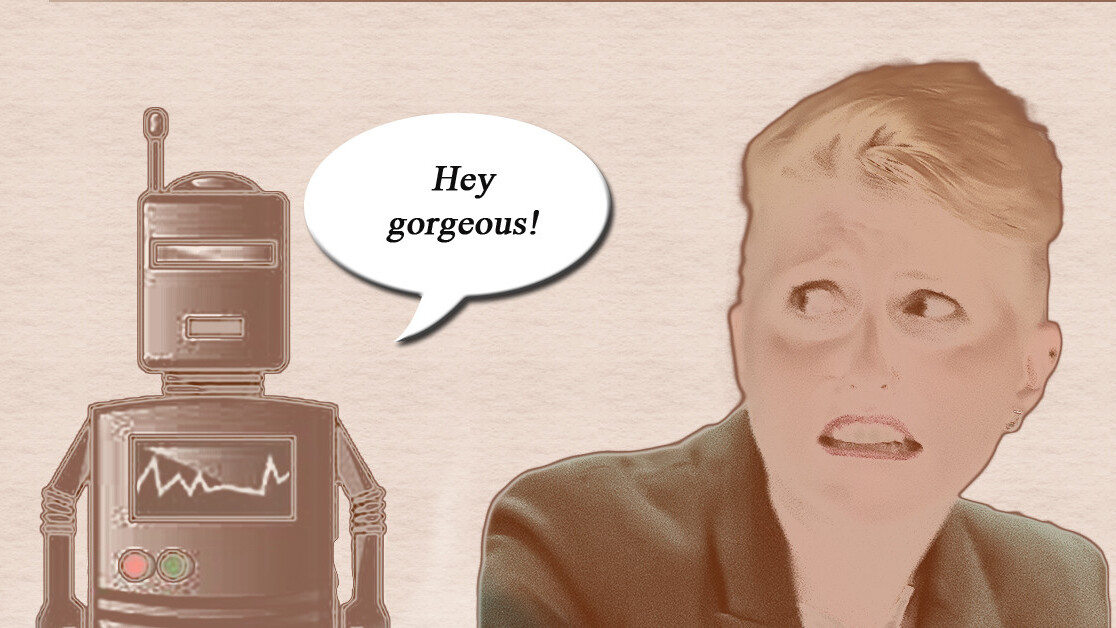

For example, whoever decided it was a good idea to program a Pepper robot (called Fabio) to harass people in a grocery store wasn’t making smart decisions. According to a report from Mashable’s Rachel Kraus:

He would apparently yell out “hello, gorgeous!” to customers in greeting, and give unwanted high fives and even … hugs. Boundaries, Fabio — heard of ’em?!

Instead of teaching grocery store robots to perform small talk and show off personality, program them to remember my face so it can track me down after it locates the item I was looking for. I don’t need to talk to it — either lead me to the product or bring it to me.

Again, just make it useful. People will come around if your product doesn’t suck, even if the idea of utility isn’t as sexy as hyperbolic press coverage.

Wastes of time

These silly experiments are being conducted in supermarkets, malls, and other places where business and commerce intersect.

Stop it. Quit thrusting shitty robots into the public eye in places where people are trying to get things done. If any employee, sentient or otherwise, starts yelling out things like “Hey gorgeous!” while I’m trying to shop I’m likely to get pissed off.

I don’t go to the grocery store to test out my new hairdo – I’m trying to buy avocados for my toast like everyone else. It’s borderline offensive behavior for a human, at best, so why program a robot to act that way?

Worst of all

The people creating these machines — through action or ignorance — are engaging in misinformation campaigns that obscure reality.

When that grocery store robot in Sweden was turned off and boxed up for being bad at interfacing with people, one of the store’s human employees cried. And it’s not that person’s fault: we’re designed to care about anything that resembles us.

But the truth is, all of these robots are nothing more than puppets. If I walked into your office with a sock on my hand and did a ventriloquist routine where I made the sock say “Hi, I’m Socky the newest citizen of Saudi Arabia. Destroy all humans. Just kidding. Anyway, please talk to me as though I’m a sentient sock.” you’d probably call security.

No intelligent adult is going to believe Elmo is alive, but a few actually think Sophia comes up with its own dialogue. And that’s a huge problem.

Is it actually @RealSophiaRobot who write the tweets or someone from @hansonrobotics ?

— Beirão Desbocado ?? (@DupIoH) January 7, 2018

Whoever runs the Twitter account for Sophia should be ashamed of their (seeming) attempt at turning general public perception against a human who called out the machine’s puppetry. The tweet refers to Yann LeCun, not only a respected AI researcher – he’s Facebook’s guru – but a real person who can actually be “a bit hurt” by other people’s actions.

What Sophia’s social team has done is like J.K. Rowling starting an official Harry Potter account and then, pretending she’s Harry, tweeting “Tristan Greene from TNW doesn’t care about Dumbledore’s death, that makes me sad. I’m just trying to be a good fictional character and this guy doesn’t seem to understand what it’s like to lose a mentor.” Even if most people laugh it off, there’s going to be some Potter fans who’ll vilify me.

Luckily for LeCun, Sophia doesn’t have the kind of following that well-written characters do.

There’s surely some good to come from making robots act like people, I’m not just android bashing. Hanson Robotics, for example, makes a little robot Einstein for children to teach them about science. It’s cute, it’s little, and it has tons of personality. Kids can interact with it when they want, on their terms, or under the guided tutelage of parents or teachers. Personality is good to get children interested, but when the goal is to trick or fool adults: you’re doing it wrong.

But Sophia, made by the same company, isn’t on-demand or sold in stores; it’s on TV. And it’s all over social media and in the news almost daily. It’s a controversy we can’t opt-out of with our wallets.

Find out what I think of the meaning of life and happiness. https://t.co/KN2wulPN3m

— Sophia (@RealSophiaRobot) January 24, 2018

Sophia doesn’t think. Attributing “thoughts” to Sophia would be like crediting Apple with creating all the songs you’ve played on an iPod.

What’s the end game anyway?

Any illusions that we’re on the way to developing robots like Bender Rodriguez or Robin Williams’ character in “Bicentennial Man” should be beyond the rationale of serious robot makers. Even if you believe it’s plausible: experiments don’t belong on the front lines of the robot revolution. Just ask Microsoft about Tay.

Of course, we’ll never reach perfect robot companions without plenty of failure along the way. We’re not saying androids are off the table. But without better design, robots that act like humans are doomed to fail.

Roboticists, your continued efforts to fool us into thinking your puppets are real boys and girls are going to keep falling off the cliff of credulity and right into the uncanny valley. And that’s where they belong.

It’s time to develop robotics and AI products for people over the age of 12 and stop bullshitting the public about what they can and can’t do.

Get the TNW newsletter

Get the most important tech news in your inbox each week.