Intel’s AI division is one of the unsung heroes of the modern machine-learning movement. It’s talented researchers have advanced the state of AI chips, neuromorphic computing, and deep learning. And now they’re turning their sights on the unholy grail of AI: the hive mind.

Okay, that might be a tad dramatic. But every great science fiction horror story has to start somewhere.

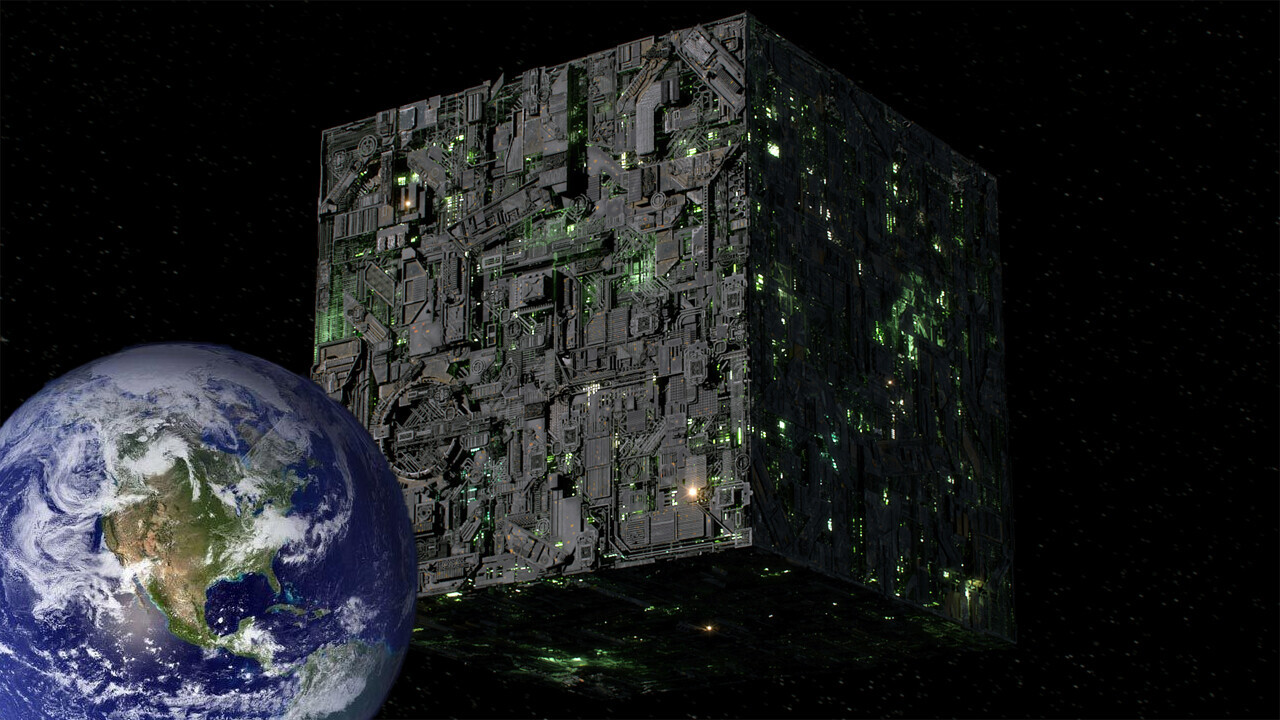

And Intel’s amazing advances in the area of multiagent evolutionary reinforcement learning (MERL) could make a great origin story for the Borg – a sentient AI that assimilates organic species into its hive mind, from Star Trek.

MERL, aside from being a great name for a fiddle player, is Intel’s new method for teaching machines how to collaborate.

Per an Intel press release:

We’ve developed MERL, a scalable, data-efficient method for training a team of agents to jointly solve a coordination task. … A set of agents is represented as a multi-headed neural network with a common trunk. We split the learning objective into two optimization processes that operate simultaneously.

The new system is complex and involves novel machine-learning techniques, but the basic ideas behind it are actually fairly intuitive.

AI systems don’t have what the French call une raison d’exister. In order for a machine to do something, it needs to be told what to do.

But, often, we want AI systems to do things without being told what to do. The whole point of a machine learning paradigm is to get the machine to figure things out for itself.

However, you still need to make the AI learn the stuff you want it to and forget everything else.

For example, if you’re trying to teach a robot to walk, you want it to remember how to move its legs in tandem and forget about trying to solve the problem by hopping on one foot.

This is accomplished through reinforcement learning, the RL in MERL. Researchers tweak the AI’s training paradigm to ensure it’s rewarded whenever it accomplishes a goal, thus keeping the machine on task.

If you think about AI in the traditional sense, it works a lot like a single agent (basically, one robot brain) trying to solve a giant problem on its own.

So, for an AI brain responsible for making a robot walk, the AI has to figure out balance, kinetic energy, resistance, and what the exact limits of its physical parts are. This is not only time-consuming – often requiring hundreds of millions of iterative attempts – but it’s also expensive.

Intel’s MERL system allows multiple agents (more than one AI brain) to attack a larger problem by breaking it down into individual tasks that can then be handled by individual agents. The agents collaborate in order to speed up learning across each task. Once the individual agents train up on their tasks, a control agent utilizes the sum of training to organize a method by which the entire goal is accomplished – in our example, making a robot walk.

If this system was people instead of AI, it’d be like the hit 1980s cartoon Voltron, where individual pilots fly individual vehicles but they come together to form a giant robot that’s more powerful than the sum of its parts.

But since we’re talking about AI, it’s probably more helpful to view it more like the aforementioned Borg. Instead of a single AI brain controlling all the action, MERL gives AI the ability to form a sort of brain network.

One might even be tempted to call it a non-sentient hive mind.

Get the TNW newsletter

Get the most important tech news in your inbox each week.