Typing is one of the most common things we do on our mobile phones. A recent survey suggests that Millennials spend 48 minutes each day texting, while boomers spend 30 minutes.

Since the advent of mobile phones, the way we text has changed. We’ve seen the introduction of autocorrect, which corrects errors as we type, and word prediction (often called predictive text), which predicts the next word we want to type and allows us to select it above the keyboard.

Functions such as autocorrect and predictive text are designed to make typing faster and more efficient. But research shows this isn’t necessarily true of predictive text.

A study published in 2016 found predictive text wasn’t associated with any overall improvement in typing speed. But this study only had 17 participants – and all used the same type of mobile device.

In 2019, my colleagues and I published a study in which we looked at mobile typing data from more than 37,000 volunteers, all using their own mobile phones. Participants were asked to copy sentences as quickly and accurately as possible.

Participants who used predictive text typed an average of 33 words per minute. This was slower than those who didn’t use an intelligent text entry method (35 words per minute) and significantly slower than participants who used autocorrect (43 words per minute).

Breaking it down

It’s interesting to consider the poor correlation between predictive text and typing performance. The idea seems to make sense: if the system can predict your intended word before you type it, this should save you time.

In my most recent study on this topic, a colleague and I explored the conditions that determine whether predictive text is effective. We combined some of these conditions, or parameters, to simulate a large number of different scenarios and therefore determine when predictive text is effective – and when it’s not.

We built a couple of fundamental parameters associated with predictive text performance into our simulation. The first is the average time it takes a user to hit a key on the keyboard (essentially a measure of their typing speed). We estimated this at 0.26 seconds, based on earlier research.

The second fundamental parameter is the average time it takes a user to look at a predictive text suggestion and select it. We fixed this at 0.45 seconds, again based on existing data.

Beyond these, there’s a set of parameters which are less clear. These reflect the way the user engages with predictive text – or their strategies if you like. In our research, we looked at how different approaches to two of these strategies influence the usefulness of predictive text.

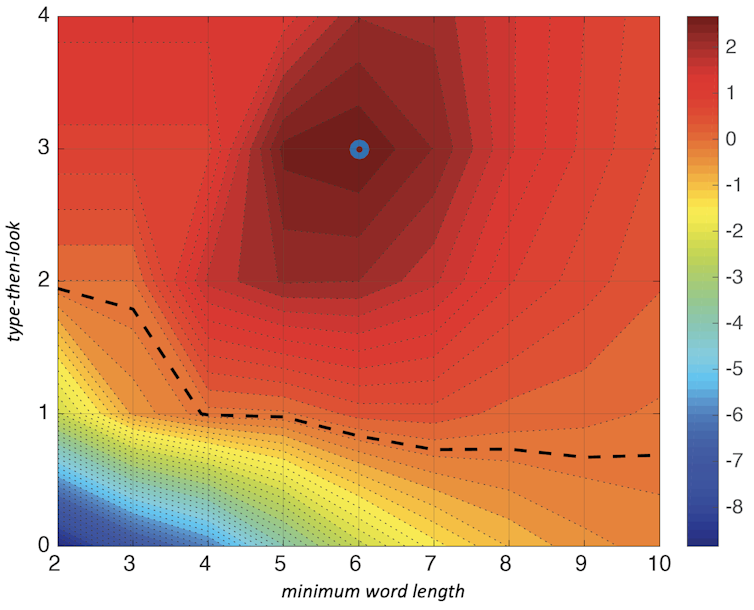

The first is minimum word length. This means the user will tend to only look at predictions for words beyond a certain length. You might only look at predictions if you’re typing longer words, beyond, say, six letters – because these words require more effort to spell and type out. The horizontal axis in the visualisation below shows the effect of varying the minimum length of a word before the user seeks a word prediction, from two letters to ten.

The second strategy, “type-then-look”, governs how many letters the user will type before looking at word predictions. You might only look at the suggestions after typing the first three letters of a word, for example. The intuition here is that the more letters you type, the more likely the prediction will be correct. The vertical axis shows the effect of the user varying the type-then-look strategy from looking at word predictions even before typing (zero) to looking at predictions after one letter, two letters, and so on.

A final latent strategy, perseverance, captures how long the user will type and check word predictions for before giving up and just typing out the word in full. While it would have been insightful to see how variation in perseverance affects the speed of typing with predictive text, even with a computer model, there were limitations to the amount of changeable data points we could include.

So we fixed perseverance at five, meaning if there are no suitable suggestions after the user has typed five letters, they will complete the word without consulting predictive text further. Although we don’t have data on the average perseverance, this seems like a reasonable estimate.

What did we find?

Above the dashed line there’s an increase in net entry rate while below it, predictive text slows the user down. The deep red shows when predictive text is most effective; an improvement of two words per minute compared to not using predictive text. The blue is when it’s least effective. Under certain conditions in our simulation, predictive text could slow a user down by as much as eight words per minute.

The blue circle shows the optimal operating point, where you get the best results from predictive text. This occurs when word predictions are only sought for words with at least six letters and the user looks at a word prediction after typing three letters.

So, for the average user, predictive text is unlikely to improve performance. And even when it does, it doesn’t seem to save much time. The potential gain of a couple of words per minute is much smaller than the potential time lost.

It would be interesting to study long-term predictive text use and look at users’ strategies to verify that our assumptions from the model hold in practice. But our simulation reinforces the findings of previous human research: predictive text probably isn’t saving you time – and could be slowing you down.![]()

Article by Per Ola Kristensson, Professor of Interactive Systems Engineering, University of Cambridge

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.