NASA has unveiled images of the first-ever craters on Mars discovered by AI.

The system spotted the craters by scanning photos by NASA’s Mars Reconnaissance Orbiter, which was launched in 2005 to study the history of water on the red planet.

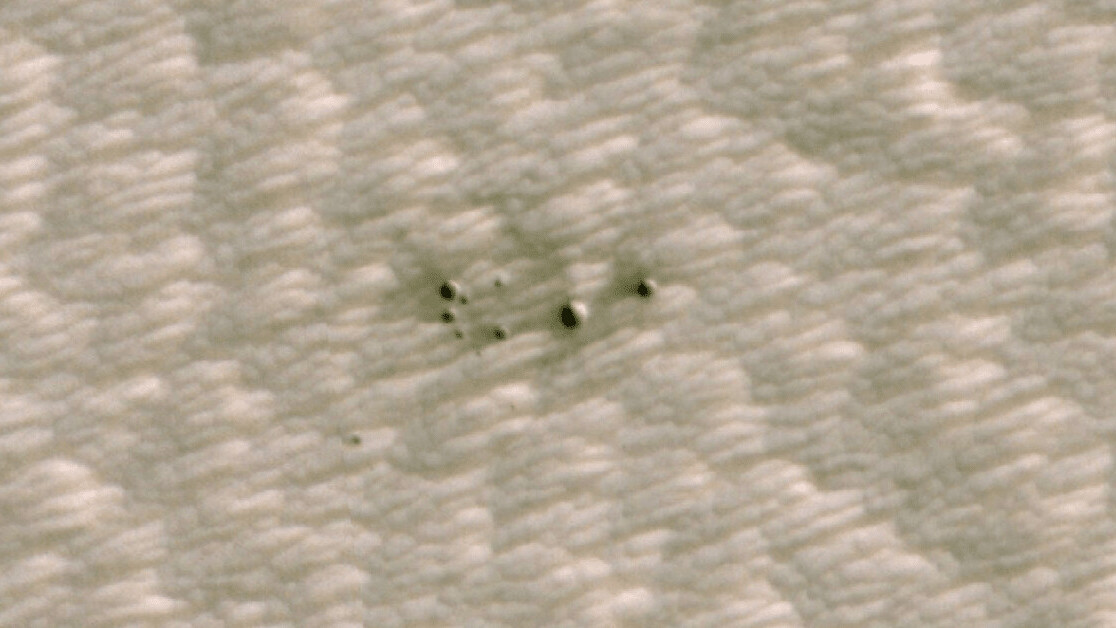

The cluster it detected was created by several pieces of a single meteor, which had shattered into pieces while flying through the Martian sky at some point between March 2010 and May 2012. The fragments landed in a region called Noctis Fossae, a long, narrow, shallow depression on Mars. They left behind a series of craters spanning about 100 feet (30 meters) of the planet’s surface.

The largest of the craters was about 13 feet (4 meters) wide — a relatively small dent that is tricky for the human eye to spot.

[Read: Are EVs too expensive? Here are 5 common myths, debunked]

Scientists would normally search for these craters by laboriously scanning through images captured by the NASA Mars Reconnaissance Orbiter’s Context Camera with their own eyes. The system takes low-resolution pictures of the planet covering hundreds of miles at a time, and it takes a researcher around 40 minutes to scan a single one of the images.

To save time and increase the number of future findings, scientists and AI researchers at NASA’s Jet Propulsion Laboratory (JPL) in Southern California teamed up to develop a tool called the automated fresh impact crater classifier.

They trained the classifier by feeding it 6,830 Context Camera images. This dataset contained a range of previously confirmed craters, as well as pictures with no fresh impacts to show the AI what not to look for.

They then applied the classifier to the Context Camera’s entire repository of about 112,000 images. This cut the 40 minutes it typically takes a scientist to check the image down to an average of just five seconds. However, the classifier still needed a human to check its work.

“AI can’t do the kind of skilled analysis a scientist can,” said JPL computer scientist Kiri Wagstaff. “But tools like this new algorithm can be their assistants. This paves the way for an exciting symbiosis of human and AI ‘investigators’ working together to accelerate scientific discovery.”

Finally, the team used NASA’s HiRISE camera, which can spot features as small as a kitchen table, to confirm that the dark smudge they had spotted was indeed a cluster of craters.

The classifier currently runs on dozens of high-performance computers at the JPL. Now, the team wants to develop similar systems that can be used on-board Mars orbiters.

“The hope is that in the future, AI could prioritize orbital imagery that scientists are more likely to be interested in,” said Michael Munje, a Georgia Tech graduate student who worked on the classifier.

The researchers believe the tool could paint a fuller picture of meteor impacts on Mars, which could contain geological clues about life on the planet.

Get the TNW newsletter

Get the most important tech news in your inbox each week.