It is hardly surprising that Artificial Intelligence was a major focus of Microsoft’s Build 2017 conference. In fact, given the rapid advancements in all areas of AI technology and the raging debates about if – or rather, when – robots will take over our jobs, it would be surprising if one of the world’s biggest technology companies weren’t thinking about these problems in a big way.

When Harry Shum – Executive Vice President of Microsoft’s AI and Research group – took to the main stage on Monday, he talked about how soon it will be almost impossible to imagine a technology that doesn’t tap into the power of AI in one way or another. What made this possible, he continued, was the convergence of three forces – increased cloud computing power, algorithms running off deep neural networks, and access to massive datasets. This means that AI does indeed have the potential to disturb every single industry and process out there.

But while the disruption does seem inevitable, companies like Microsoft are betting they can make it a positive one, talking about the possibilities it brings to amplify human ingenuity, augmenting people’s capabilities and helping them to be more productive.

“I’m really glad we’re having all these conversations about the disruptive power of AI, and it’s a good thing that we’re being so thoughtful about the ways in which we’re designing these systems, because it’s crucial that they are designed for people, to help them do their jobs better,” says Lili Cheng distinguished engineer, general manager FUSE labs.

Microsoft has been creating building blocks for the current wave of AI breakthroughs for more than two decades, and now it wants to leverage that accumulated research with the massive amounts of data available to them through the Microsoft Graph portfolio of products and Azure’s computing power, moving towards its vision of the “Intelligent Cloud”.

“One of the things we’ve done with our bot framework and the cognitive services is that we componentized things so that they can be reused into other context. For Cognitive Services there are 29 and you can choose whichever one(s) you choose,” explains Cheng.

So by adding a few lines of code, developers can mix, match and customize AI functionalities to suit their needs, spanning functions such as translation, video deconstruction and search, gesture recognition and real-time captioning.

Broadly speaking, therefore, Cognitive Services are plugin functionalities that developers can use to enable systems within their apps to hear, speak, understand and interpret human needs. For example, LUIS (Language Understanding Intelligent Service) helps developers to integrate language models to understand users using either prebuilt or customized models. While the Custom Vision Service makes it easy to create your own image recognition service.

The overarching idea is to move towards what the company calls “conversational AI,” (where instead of humans having to learn about computers, computers will be able to understand humans) and thus enable more fluid and natural interactions between humans and machines.

Clearly when one thinks of conversational AI this includes Cortana – Microsoft’s personal intelligent assistant which is already used by 145 million people across the world, and the company is certainly focusing heavily on making it easier for developers to reach this growing audience by working in partnership with companies like HP, Intel and Harman on IoT devices such as Invoke intelligent speaker.

However, this is an extension of the Cognitive Services offering the company launched back in 2015, which has since attracted over 568,000 developers from more than 60 countries to sign up. Microsoft now wants to redefine itself with AI by infusing it into every product and service it offers, from Xbox to Windows, from Bing to Office.

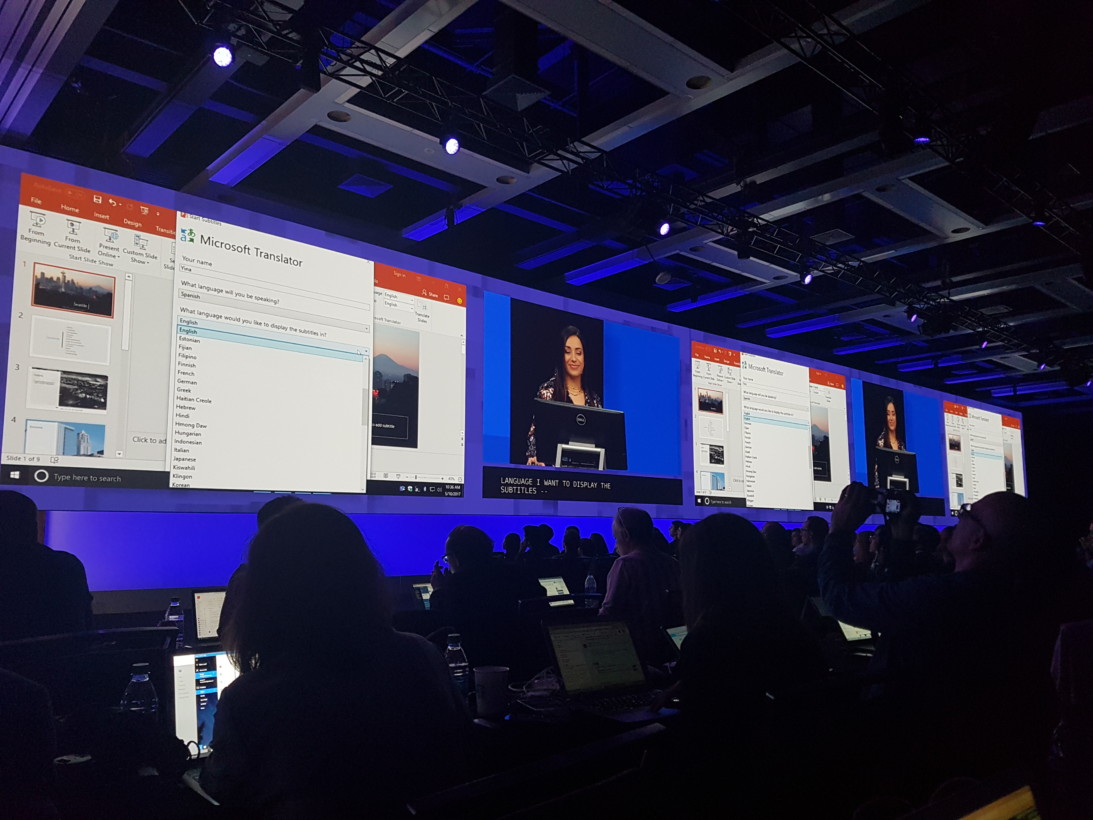

An example of this was the demo showcased during the keynote which showed how Microsoft’s translation APIs could provide real-time translation in multiple languages during any PowerPoint presentation. It not only embeds live transcriptions of the presenter into a specific language, but also generates an unique link which lets attendees get further live translations into their own preferred languages – all in real time. Other such functionalities include Project Prague – an early stage SDK that matches captured user movement against an extensive library of hand poses, allowing the developer to tie app actions to gestures and create a more intuitive user experience through gesture interaction.

The ease with which developers can incorporate these elements into their apps, together with this growing emphasis on natural interaction, means that AI has the potential to become a much more creative tool across the board, not something that can only be leverage and understood by Data Scientists. This democratization of AI is in fact a cornerstone of Microsoft’s vision, and something Cheng is very keen to enable:

“You need to make people laugh, and you need creativity and you need all this stuff because that’s what being human is about. This better be a creator platform for writers and other non-technical people. AI can’t be just for people with PhDs we want people who are writers, artists or builders to be able to use those tools to get their jobs done better. We’re super committed to that. There’s a lot more to AI than writing code, and I think that has to be our goal, to make people want to make the things they live in all day,” she concludes.

Get the TNW newsletter

Get the most important tech news in your inbox each week.