While in recent years Microsoft has developed a reputation for having to play catch-up in many parts of its business, from mobile to search and even on the desktop, there’s actually a healthy amount of blue-sky thinking about the future that goes on at the company.

Microsoft Research consists of a network of labs around the world employing around 1,000 researchers in total. At the company’s Think Next event in Tel Aviv, Israel this week, I met Professor Shahram Izadi, who is based in the UK’s hardware innovation capital, Cambridge. Izadi and his team work on interactive 3D technologies that look to build on the likes of Kinect and take them to another level.

When Izadi joined Microsoft, the buzz area was multitouch, back in the days before the iPhone brought that technology to the masses. “It was the first wave of natural human interfaces, it was an exciting way of directly interacting with computing content,” he says. However, the advent of the Kinect has seen his work move into the field of full-body interaction.

Izadi clearly has a lot of fun with his work (“A lot of the time it feels like play,” he admits). He is largely concerned with exploring what technology might be like in five or ten years’ time rather than trying to develop the next hit piece of kit.

Connecting to Kinect

Izadi’s team recently unveiled KinÊtre, a technology that lets humans ‘possess’ 3D models. You can take any 3D digital object and map it to your own body. So, you could set your legs to control the legs of a chair, for example. A Kinect camera then tracks your movement and allows you to make the chair ‘walk’ in a human-like way. The result is as entertaining as it is impressive, often giving objects a quality that resembles the dancing furniture in Disney’s Beauty and the Beast.

Izadi’s team recently unveiled KinÊtre, a technology that lets humans ‘possess’ 3D models. You can take any 3D digital object and map it to your own body. So, you could set your legs to control the legs of a chair, for example. A Kinect camera then tracks your movement and allows you to make the chair ‘walk’ in a human-like way. The result is as entertaining as it is impressive, often giving objects a quality that resembles the dancing furniture in Disney’s Beauty and the Beast.

Building on earlier technology developed by Izadi’s team, KinÊtre supports 3D objects created by scanning the shape of a real-life object with a Kinect camera.Pre-created 3D models can also imported. As the video below shows, two people can even team up to ‘possess’ a horse.

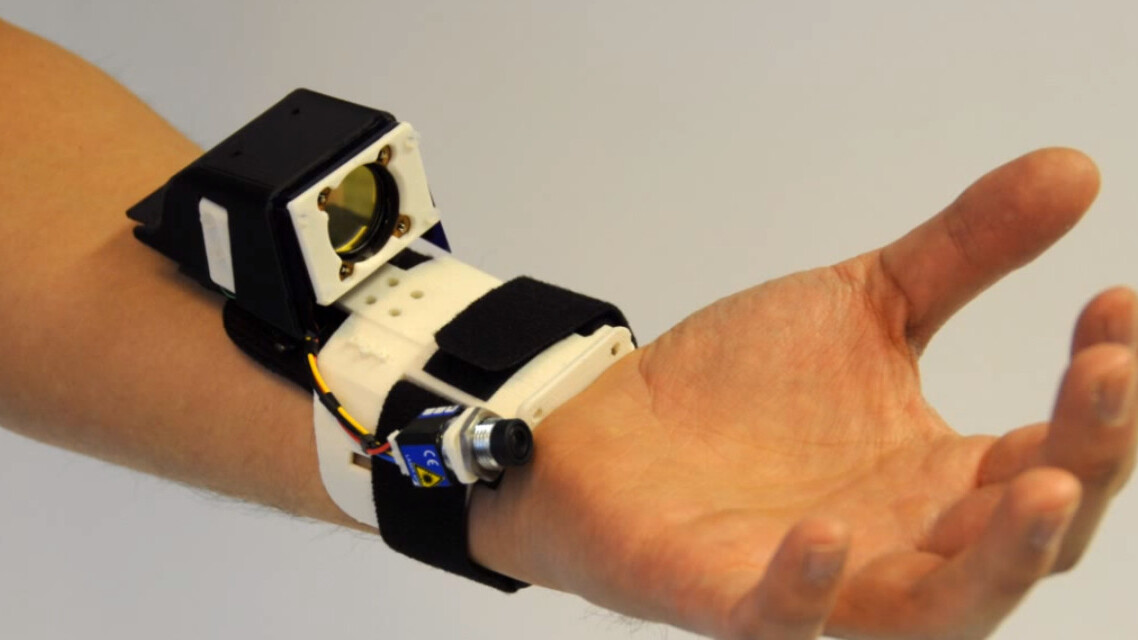

The latest research revealed by the Cambridge team is Digits, a more subtle, intimate take on the kind of gesture control offered by Kinect. It utilises a small camera worn on a wrist strap, similar to a Kinect camera but tracking 2D movement rather than 3D.

The camera picks up on finger movements that can be used to control software. Imagine an app that translates sign language into written text, for example, or if you could control the slides of a presentation using simple finger movements. There are more examples in this video.

“I think that’s where the future is heading,” Izadi says. “Computers don’t really have a sense of space, they don’t have a notion of the world around them – in particular, the user that they’re interacting with. What we’re trying to do is give computers that sense of space, so they can understand the 3D geometry of the environment, and also understand in a little more detail the user that’s interacting with them.”

Where are we going?

With the likes of Google’s Project Glass and Microsoft’s Digits system promising to more intimately connect the physical world with the digital one, are we embarking on a path that will lead to the ‘cyborgisation’ of humans? “What we’re doing is augmenting the senses of humans, whether it’s your visual sense, tactile sense or audio sense,” says Izadi.

“What we’re trying to do is bring some sort of augmented reality to real-world life, and I think that’s a vision that a lot of people have thought about for twenty-odd years and it’s finally about to happen because of the technology advances that are around the corner, so it’s an exciting time.

“I think we’re a way off human cyborgs, which has a kind of doomsday feel to it, but definitely augmented reality is the next big thing.”

The potential of this technology isn’t limited to consumer electronics and computing either. Izadi is personally excited about the potential for Kinect-style technology to be used in other fields. “One project that I think would be really cool would be if you could use 3D scanning technologies underwater to understand the evolution of the coral and sealife, and use that for understanding climate change.”

We may still be a long way off from computer body implants and cyborgs (which personally I think would be rather cool), but technology of the kind being developed by Izadi’s team is pointing to an interesting future in the meantime.

You can listen to our conversation in the recording embedded below.

Microsoft paid for The Next Web’s trip to Think Next but has no influence at all over anything we publish.

Get the TNW newsletter

Get the most important tech news in your inbox each week.