The 20th century turned out to be an era of exponential growth in the field of machine learning. The 3000-year-old ancient game of ‘Go’ that computer scientists predicted will take another decade to crack was made possible by Google Brain teams AlphaGo AI, defeating multiple-time world champion Lee Sudol.

And, by the way, this Chinese game has more combinations than predicted atoms in the universe or, in short, this game can’t be won just by running through all the possible moves, what IBM Blue did in 1997, defeating world champion Gary Kasparov.

Then, the rise of OpenAI’s bot in DOTA2 and other fun (potentially harmful) stuff like Deepfake. Research communities are thriving in ML, from 100 papers submitted annually 10 years ago, to 100 per day in 2019 on arXiv alone.

But, keeping everything aside, the point is that ML is highly math-intensive.

While libraries like TensorFlow and PyTorch have made a significant contribution in making ML reachable to all the developers out there, we still have a steep learning curve to know how to create models, train them, and save it to later use it for our tasks.

That is where ml5.js comes in, a library based on TensorFlow.js, which was launched last year in March, taking the vision even further.

Why ml5.js

“ml5.js aims to make machine learning approachable for a broad audience of artists, creative coders, and students. The library provides access to machine learning algorithms and models in the browser.” — Official developers

In the browser. Yes! No installation required, which takes you away from the pain of installing multiple data-science libraries and making sure everything works in harmony with the versions you’ve installed, which, believe me, is at times no easy task.

But, what do I need?

- Download the code from this GitHub repo. It has two folders, one for detecting pose using a webcam as input, and the other via video file as input.

- VS Code (optional) to read the code.

A brief intro to human pose estimation

Let’s take a small example. We want to use machine learning to find pictures with human faces in a folder with all the pictures taken during your latest vacation trip.

So, we take a neural network, which is a machine learning model (awesome beginner video to understand what it is), trained it with a lot of data with random human faces in it, and then used the same model to detect human faces in our folder.

Neural networks these days come in a lot more flavors than Baskin and Robbins have in ice cream. (If you are wondering, it’s 31.) Some are good at dealing with images, some with textual data, some with time-series like sound, and so on.

In our case, we use a convolutional neural network, a.k.a CNN, to deal with images.

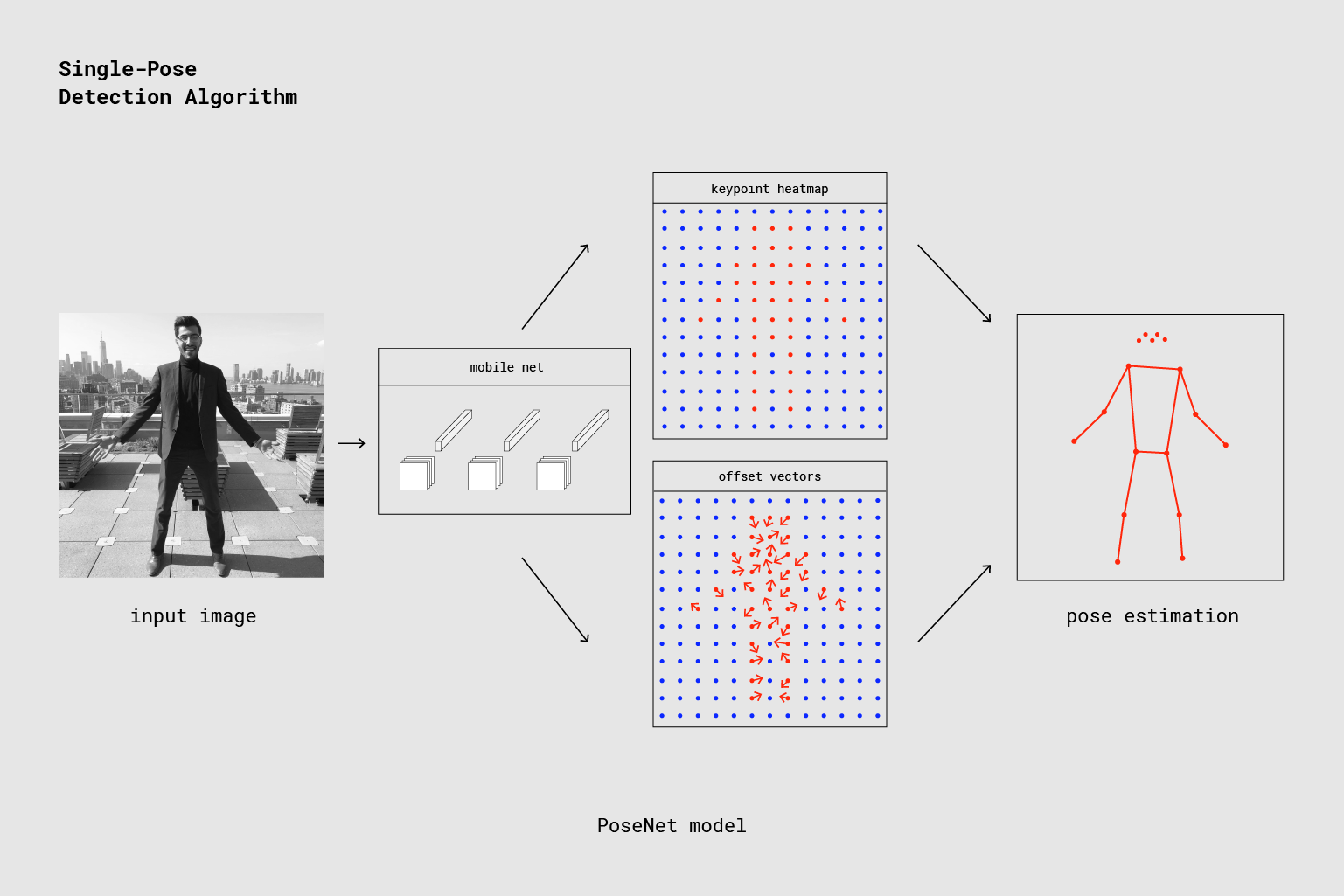

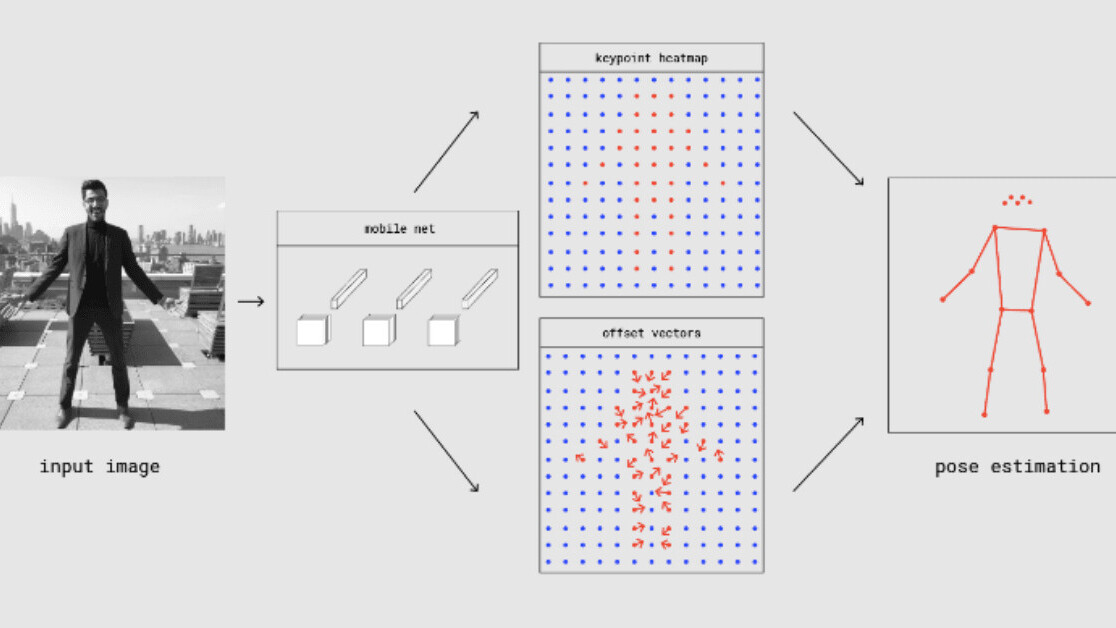

ml5.js is a wrapper around TensorFlow.js, which also provides the PoseNet model. A ready-to-use model that has pre-trained CNN inside it and takes an image as input and outputs a keypoint heatmap and offset vectors.

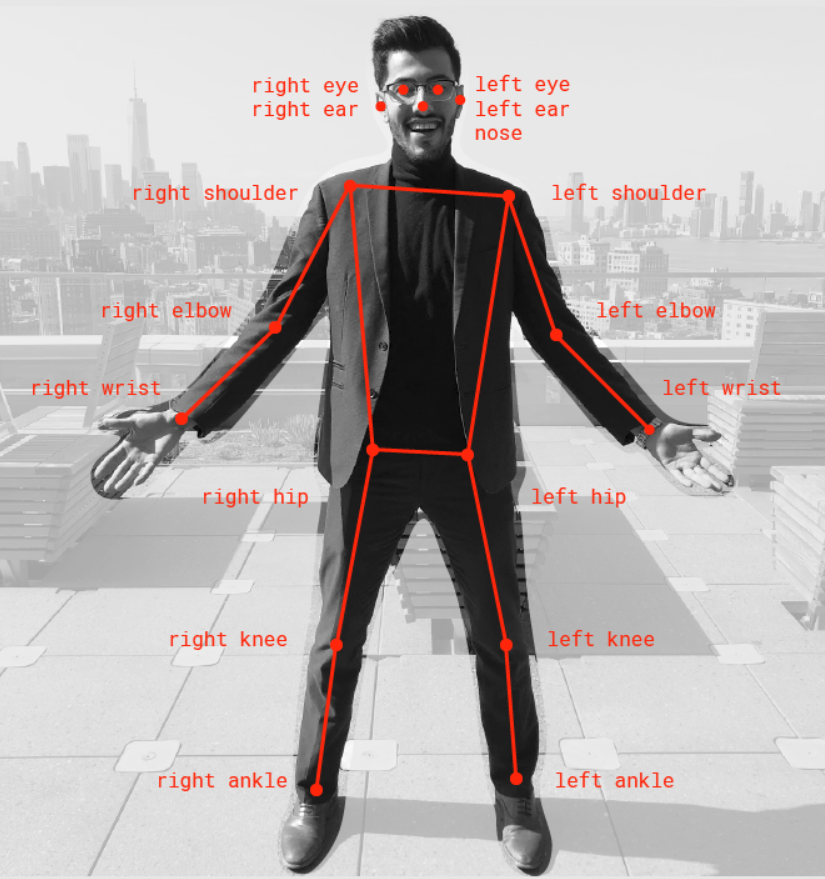

By using these representations and a little bit of math magic, we finally find the 17 keypoints, as shown in the image, to detect a complete human pose.

The model also returns how confident it is, by giving a keypoint confidence score to each of the 17 points on a scale of 0–1 (where 1 is 100% confident and 0.56 means 56%, respectively).

It also returns the overall pose confidence score of detecting human pose in an image, also on a scale of 0–1.

Coding time

We will use a webcam as the video input to our pose estimation model and show the output on our main page index.html.

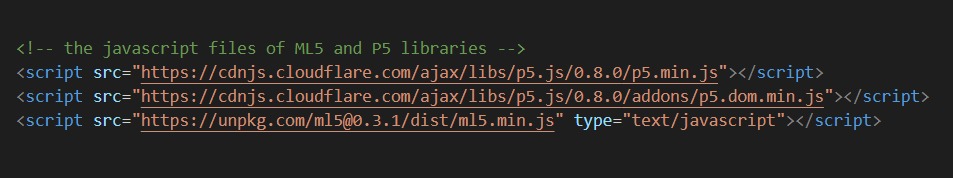

We are using two libraries here:

ml5.jsfor creating and running our ML model.p5.jsfor getting the webcam video feed and displaying output in our browser.

I’ve added extensive documentation inside the code, explaining every single line. Here, we will discuss the main crux which is the majority of the code anyway.

Our code consists of two files:

poseNet_webcam.js, our JavaScript code.index.html, the main page to show output.

PoseNet_webcam.js

p5.js runs two functions:

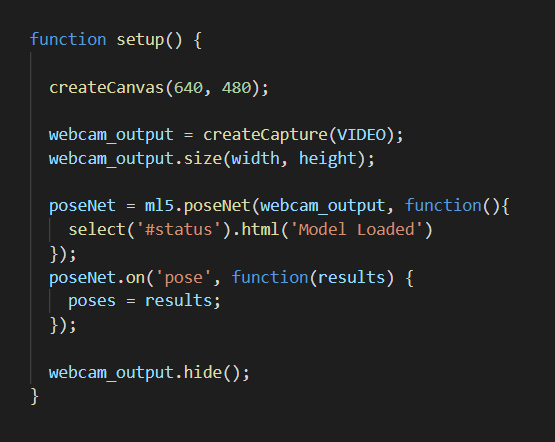

function setup(). The first function that is executed and runs only once. We will do our initial setup in it.function draw(). This function is called on repeat forever (unless you plan on closing the browser or pressing the power button).

createCanvas(width, height) is provided by p5 to create a box in the browser to show our output. Here, canvas has width: 640px and height: 480px.

createCapture(VIDEO) is used to capture a webcam feed and return a p5 element object, which we will name webcam_output. We set the webcam video to the same height and width of our canvas.

ml5.poseNet() creates a new PoseNet model, taking as input:

- Our present webcam output.

- A callback function, which is called when the model is successfully loaded. Inside our

index.htmlfile, we have created an HTML paragraph with an IDstatusshowing the current status text to the user. We change that text to Model Loaded for the user to know, as the model takes a bit to load.

poseNet.on() is a trigger or event listener. Whenever the webcam gives a new image, it is given to the PoseNet model.

The moment pose is detected and output is ready. It calls function(results), where results is the final output of keypoints and scores given by the model.

We store this in our poses array, which is globally defined and can be used anywhere in our code. webcam_output.hide() hides the webcam output for now, as we will modify the images and show the image with detected points and lines later.

All we have left to do is to show the image with all the detection results stored in poses in the browser.

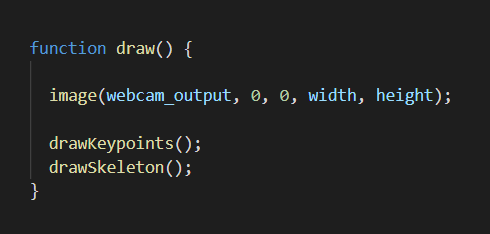

As we know, the draw() function runs in a loop forever. Inside this, we call the image() function to display our image (as we have our video image-by-image) in the canvas.

It takes five arguments:

input image. The image we want to display.x position. The x-coordinate of the top-left corner of the image in respect to the canvas.y position. The y-coordinate of the top-left corner of the image in respect to the canvas.width. The width to draw the image.height. The height to draw the image.

We then call drawKeyPoints() and drawSkeleton() to draw the dots and lines on the current image. draw() does this in an infinite loop, hence showing a continuous output to the user, which makes it look like a video.

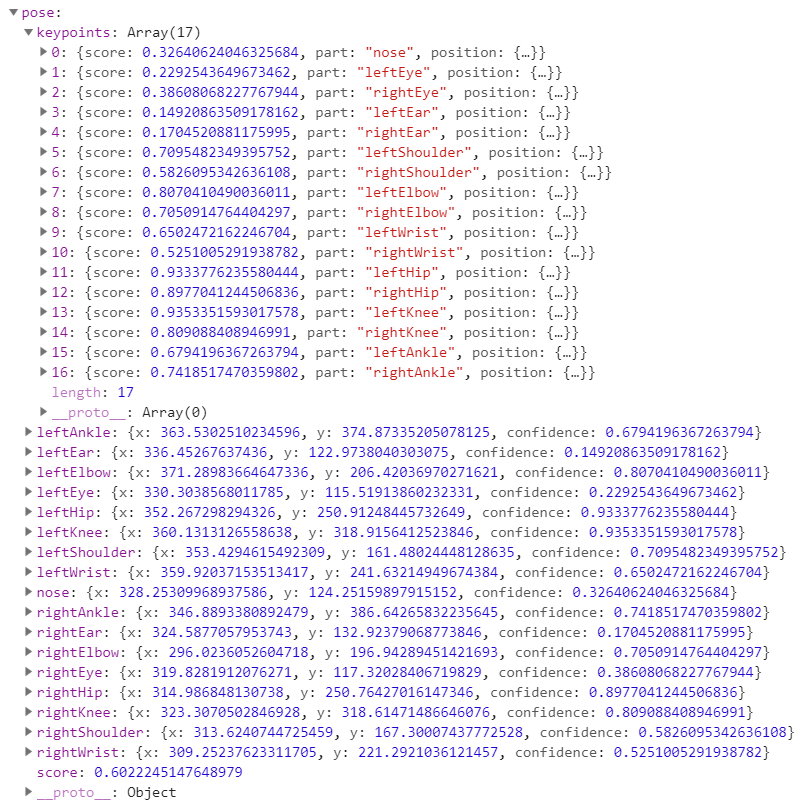

As you can see above, PoseNet returns a JavaScript object as output, consisting of many key-value pairs. This is the pose key-value out of the pose and skeleton values, provided for each person in an image.

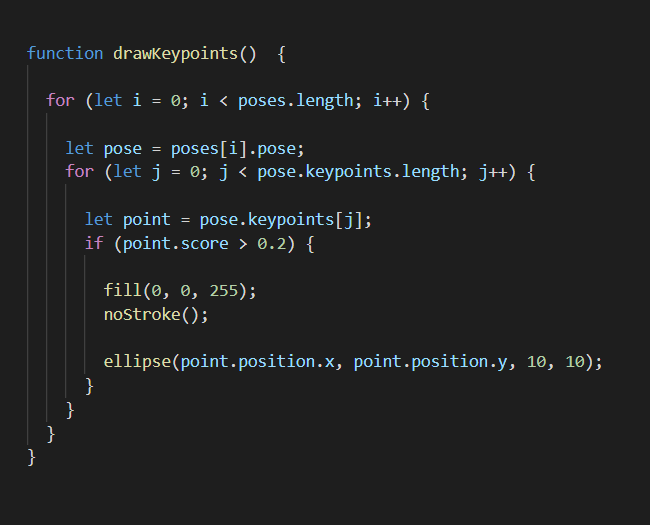

We have a function to draw detected points on the image. Remember, we saved all the results from the PoseNet output in the poses array. Here, we loop through every pose or person in an image and get its keypoints.

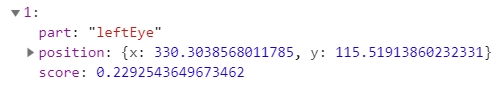

We loop through every point that is a body part in the keypoints array, which further has:

part. The name of the part detected.position. x and y values of a point in the image.score. Accuracy of detection.

We only draw a point if the accuracy of detection is greater than 0.2. We call fill(red, green, blue), taking RGB intensity value ranging from 0 to 255 to decide the color of a point, and noStroke() to disable drawing the outline that p5 draws by default.

Then, we call ellipse(x_value, y_value, width, height) to draw an ellipse at the desired position but we keep the width and height very small, which makes them look like a dot (exactly what we wanted).</p>

Similarly, as our variable poses has multiple pose‘s in it, it also has multiple skeleton values with their own type of key-value pairs, which is handled by drawSkeleton() drawing lines instead of points.

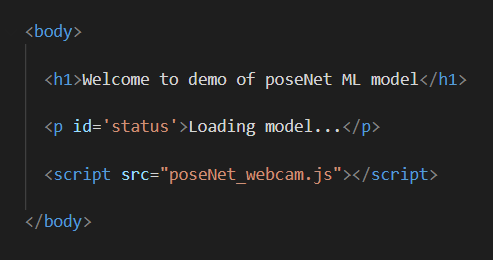

index.html

This is the main page where we display our output. We add all our libraries using script tags.

We show a cute welcome intro to the user. As model loading takes time, we show the ‘Loading model…’ message. If you remember, we change it to ‘Model Loaded’ once our model is loaded using the reference on an ID, called status.

At last, we put our own JS code inside the body. Run the index.html file to see the output. Make sure you allow webcam access when prompted.

That’s it! You can always go to the ml5.js reference page, which has many more ready-to-use mode and code snippets for various cool ML projects, dealing with a wide variety of things like text, images, and sound.

Kartik Nighania is a a machine learning enthusiast who loves computer vision more than NLP. He previously worked in the field of robotics especially drones which haunts me to this date. In love with Kaggle.

Get the TNW newsletter

Get the most important tech news in your inbox each week.