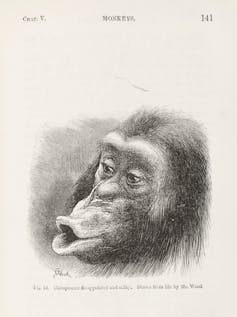

Sarah, “the world’s smartest chimp,” died in July 2019, just before her 60th birthday. For the majority of her life, she served as a research subject, providing scientists with a window into the thoughts of homo sapiens’ nearest living relative.

Sarah’s death provides an opportunity to reflect on a foundational question: can we really know what non-human animals are thinking? Drawing on my background as a philosopher, I argue that the answer is no. There are principled limitations to our ability to understand animal thought.

Animal thought

There is little doubt that animals think. Their behavior is too sophisticated to suppose otherwise. But it is awfully difficult to say precisely what animals think. Our human language seems unsuited to express their thoughts.

Sarah exemplified this puzzle. In one famous study, she reliably chose the correct item to complete a sequence of actions. When shown a person struggling to reach some bananas, she chose a stick rather than a key. When shown a person stuck in a cage, she chose the key over the stick.

This led the study’s researchers to conclude that Sarah had a “theory of mind,” complete with the concepts intention, belief, and knowledge. But other researchers immediately objected. They doubted that our human concepts accurately captured Sarah’s perspective. Although hundreds of additional studies have been conducted in the intervening decades, disagreement still reigns about how to properly characterize chimpanzees’ mental concepts.

The difficulty characterizing animals’ thoughts does not stem from their inability to use language. After Sarah was taught a rudimentary language, the puzzle of what she was thinking simply transformed into the puzzle of what her words meant.

Words and meanings

As it turns out, the problem of assigning meanings to words was the guiding obsession of philosophy in the 20th century. Among others, it occupied W.V.O. Quine, arguably the most influential philosopher of that century’s second half.

A Harvard professor, Quine is famous for imagining what it would take to translate a foreign language — a project he called radical translation. Ultimately, Quine concluded that there would always be multiple equally good translations. As a result, we could never precisely characterize the meaning of the language’s words. But Quine also noted that radical translation was constrained by the structure of language.

Quine imagined a foreign language completely unrelated to any human language, but here, I’ll use German for illustration. Suppose a speaker of the foreign language utters the sentence: “Schnee ist weiss.” Her friends smile and nod, accepting the sentence as true. Unfortunately, that doesn’t tell you very much about what the sentence means. There are lots of truths and the sentence could refer to anyone of them.

But suppose there are other sentences that the foreign speakers accept (“Schnee ist kalt,” “Milch ist weiss,” etc.) and reject (“Schnee ist nicht weiss,” “Schnee ist rot,” etc.), sometimes depending on the circumstances (for example, they accept “Schnee!” only when snow is present). Because you now have more evidence and the same words pop up in different sentences, your hypotheses will be more tightly constrained. You can make an educated guess about what “Schnee ist weiss” means.

This suggests a general lesson: insofar as we can translate the sentences of one language into the sentences of another, that is largely because we can translate the words of one language into the words of another.

But now imagine a language with a structure fundamentally unlike that of any human language. How would we translate it? If translating sentences requires translating words, but its “words” don’t map onto our words, we wouldn’t be able to map its sentences onto our own. We wouldn’t know what its sentences mean.

Unknown grammars

The thoughts of animals are like the sentences of an unfamiliar language. They are composed of parts in a way that is completely unlike the way that our language is composed of words. As a result, there are no elements in the thoughts of animals that match our words and so there is no precise way to translate their thoughts into our sentences.

An analogy can make this argument more concrete.

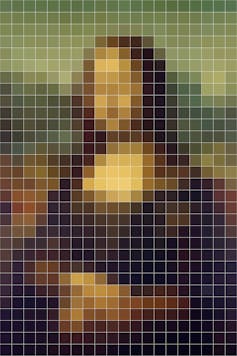

What is the correct translation of the Mona Lisa? If your response is that this is an ill-posed question because the Mona Lisa is a painting and paintings can’t be translated into sentences, well… that’s exactly my point. Paintings are composed of colors on a canvas, not from words. So if Quine is right that any halfway decent translation requires matching words to words, we shouldn’t expect paintings to translate into sentences.

But does the Mona Lisa really resist translation? We might try a coarse description such as, “The painting depicts a woman, Lisa del Giocondo, smirking slyly.” The problem is that there are ever so many ways to smirk slyly, and the Mona Lisa has just one of them. To capture her smile, we’ll need more detail.

So, we might try breaking the painting down into thousands of colored pixels and creating a micro description such as “red at location 1; blue at location 2; ….” But that approach confuses instructions for reproduction with a translation.

By comparison, I could provide instructions for reproducing the content on the front page of today’s New York Times: “First press the T key, then the H key, then the E key, … .” But these instructions would say something very different from the content of the page. They would be about what buttons should be pressed, not about income inequality, Trump’s latest tweets or how to secure your preschooler’s admission into one of Manhattan’s elite kindergartens. Likewise, the Mona Lisa depicts a smiling woman, not a collection of coloured pixels. So the micro description doesn’t yield a translation.

Nature of thought

My suggestion, then, is that trying to characterize animal thought is like trying to describe the Mona Lisa. Approximations are possible, but precision is not.

The analogy to the Mona Lisa shouldn’t be taken literally. The idea is not that animals “think in pictures,” but simply that they do not think in human-like sentences. After all, even those animals, such as Sarah, who manage to laboriously learn rudimentary languages never grasp the rich recursive syntax that three-year-old humans effortlessly master.

Despite having considerable evidence that Sarah and other animals think, we are in the awkward position of being unable to say precisely what they think. Their thoughts are structured too differently from our language.

This article is republished from The Conversation by Jacob Beck, Associate Professor, Department of Philosophy, York University, Canada under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.