If a picture’s worth a thousand words, then a video’s worth a thousand pictures. For as long as videos have existed, we’ve trusted them as a reliable form of evidence. Nothing is as impactful as seeing something happen on video (e.g. the Kennedy assassination) or seeing someone say something on video, especially if it’s recorded in an inconspicuous manner (e.g. Mitt Romney’s damning “47 percent” video).

However, that foundation of trust is slowly fading as a new generation of AI-doctored videos finds their way into the mainstream. Famously known as “deepfakes,” the synthetic videos are created using an application called FakeApp, which uses artificial intelligence to swap the faces in a video with those of another person.

Since making its first appearance earlier this month, FakeApp has gathered a large community and hundreds of thousands of downloads. And a considerable number of its users were interested in using the application for questionable uses.

Creating fake video is nothing new, but it previously required access to special hardware, experts and plenty of money. Now you can do it from the comfort of your home, with a workstation that has a decent graphics card and a good amount of RAM.

The results of deepfakes are still crude. Most of them have obvious artifacts that give away their true nature. Even the more convincing ones are distinguishable if you look closely. But it’s only a matter of time before the technology becomes good enough to even fool trained experts. Then it’s destructive power will take on a totally new dimension.

The capabilities and limits of deepfakes

Under the hood, deepfakes is not magic, it’s pure mathematics. The application uses deep learning application, which means it relies on neural networks to perform its functions. Neural networks are software structures roughly designed after the human brain.

When you give a neural network many samples of a specific type of data, say pictures of a person, it will learn to perform functions such as detecting that person’s face in photos, or in the case of deepfakes, replace someone else’s face with it.

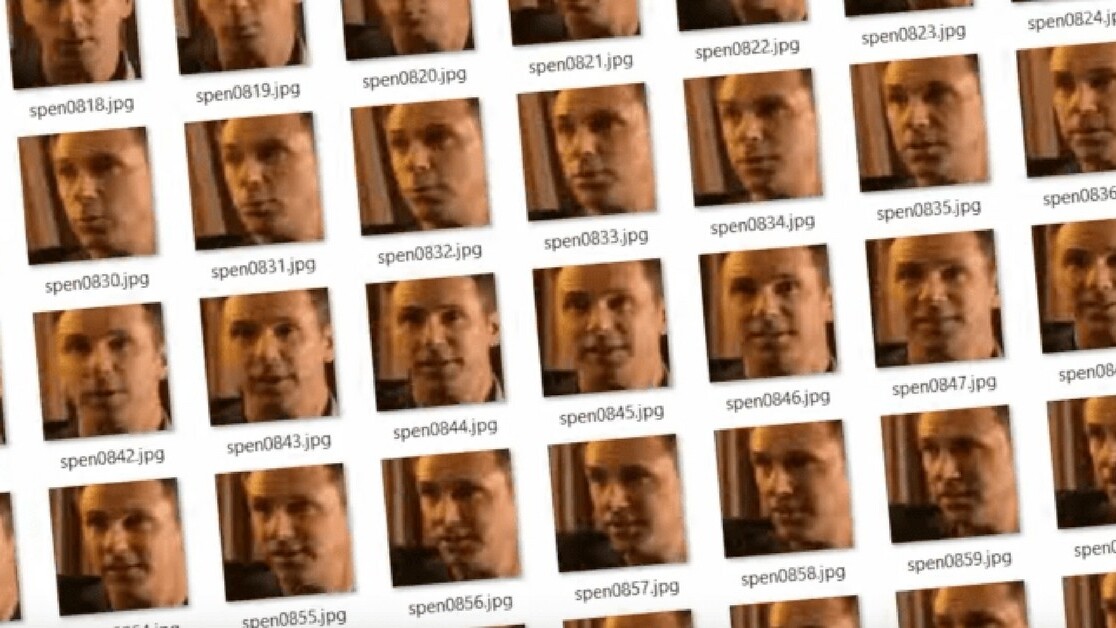

However, deepfakes suffers from the same problems that all deep learning applications do: It relies too much on data. To create a good fake video, deepfakers need a lot of face pictures from the person in the original video (which is not a problem if they created it themselves) and the person whose face will be placed in the final production. And they need the pictures to be from various angles and with decent quality.

This means that it will be hard to make a deepfake from a random person because the deepfaker will have to somehow gather thousands of pictures from the target. But celebrities, politicians, athletes and other people who regularly appear on television or post a lot of pictures and videos online are ripe for deepfaking.

The future of AI forgery

Shortly after the FakeApp was published, a lot of fake porn involving celebrities, movie stars and politicians surfaced because of the abundance of online photos and videos available.

The backlash that those videos have caused in the media and in discussions by experts and lawmakers give us a preview of the disruption and chaos that AI-generated forgery can unleash in a not-too-distant future. But the real threat of deepfakes and other AI-doctored media has yet to emerge.

When you put deepfakes next to other AI-powered tools that can synthesize human voice (Lyrebird and Voicery), handwriting and conversation style, the negative impact can be immense (but so’s the positive, but that’s the topic for another post).

A post in the Lawfare blog depicts a picture of what the evolution of deepfakes could entail. For instance, a fake video can show an authority or official doing something that can have massive social and political repercussions, such as a soldier killing civilians or a politician taking a bribe or uttering a racist comment. As a machine learning expert told me very recently, “Once you see something, unseeing it is very hard.”

We’ve already seen how fake news negatively impacted the U.S. presidential elections in 2016. Wait until bad actors have deepfakes to back their claims with fake videos. AI synthesizing technologies can also usher in a new era of fraud, forgery, fake news and social engineering.

This means any video you see might have been a deepfake; any email you read or text conversation you engage in might have been generated by natural language processing and generation algorithms that have meticulously studied and imitated the writing style of the sender; any voice message you get could be generated by an AI algorithm; and all of them aim to lure you into a trap.

This means you’ll have to distrust everything you see read or hear until you can prove its authenticity.

How to counter the effects of AI forgery

So how can you prove the authenticity of videos and ensure the safe use of AI synthesizing tools? Some of the experts I spoke to suggest that raising awareness will be an important first step. Educating people on the capabilities of AI algorithms will be a good measure to prevent the bad uses of applications like FakeApp having widespread impact—at least in the short term.

Legal measures are also important. Currently, there’s no serious safeguards to protect people against deepfaking or forged voice recordings. Putting heavy penalties on the practice will raise the costs for creating and publishing (or hosting) fake material and will serve as a deterrent against bad uses of the technology.

But these measures will only be effective as long as humans can tell the difference between fake and real media. Once the technology matures, it will it be near-impossible to prove that a specific video or audio recording has been created by AI algorithms.

Meanwhile, someone might also take advantage of the doubts and uncertainty surrounding AI forgery to claim that a real video that portrays them committing a crime was the work of artificial intelligence. That claim too will be hard to debunk.

The technology to deal with AI forgery

We also need technological measures to back up our ethical and legal safeguards against deepfakes and other forms of AI-based forgery. Ironically, the best way to detect AI-doctored media is to use artificial intelligence. Just as deep learning algorithms can learn to stitch a person’s face on another person’s body in a video, they can be trained to detect the telltale signs that indicate AI was used to manipulate a photo, video or sound file.

At some point, however, the faking might become so real that even AI won’t be able to detect it. For that reason, we’ll need to establish measures to register and verify the authenticity of true media and documents.

Lawfare suggests a service will track people’s movements and activities to set up a directory of evidence that could be used to verify the authenticity of material that is published about those people.

So, for instance, if someone posts a video that shows you were at a certain location at a certain time, doing something questionable, you’ll be able to have that third-party service verify and either certify or reject the claim by comparing that video’s location against your recorded data.

However, as the same blog post points out, recording so much of your data might entail a greater security and privacy risk, especially if it’s all collected and stored by a single company. It could lead to wholesale information theft, like last year’s Equifax data breach, or it could end up in Facebook’s Cambridge Analytica scandal all over again. And it can still be gamed by bad actors, either to cover up their crimes or to produce evidence against others.

A possible fix would be to use the blockchain. Blockchain is a distributed ledger that enables you to store information online without the need for centralized servers. On the blockchain, every record is replicated on multiple computers and tied to a pair of public and private encryption keys. The holder of the private key will be the true owner of the data, not the computers storing it.

Moreover, blockchains are resilient against a host of security threats that centralized data stores are vulnerable to. Distributed ledgers are not yet very good at storing large amounts of data, but they’re perfect for storing hashes and digital signatures.

For instance, people could use the blockchain to digitally sign and confirm the authenticity of a video or audio file that is related to them. The more people add their digital signature to that video, the more likely it will be considered as a real document. This is not a perfect solution. It will need added measures to weigh and factor in the qualification of the people who vote on a document.

For the moment, what’s clear is that AI forgery may soon become a serious threat. We must speculate on a proper, multi-pronged approach that makes sure we prevent malicious uses of AI while also allowing innovation to proceed. Until then, IRL is the only space you can truly trust.

This story is republished from TechTalks, the blog that explores how technology is solving problems… and creating new ones. Like them on Facebook here and follow them down here:

Get the TNW newsletter

Get the most important tech news in your inbox each week.