Do you think you can tell a piece of music composed by artificial intelligence (AI) from one created by a human composer? Before you read any further, let’s find out. The following audio consists of two fragments, one written by AI, the other by a human.

So, which one’s which? Reveal the answer by following this link. If you didn’t get it right the first time, no worries—we’ll have a couple more mini-quizzes like this below.

The AI that wrote the fragment above has been programmed by Jukedeck, a UK-based startup working on machine-made music that won the competition at TechCrunch Disrupt London in 2015. At the Slush conference in Helsinki last year, we caught up with the startup’s founder, Ed Newton-Rex, and learned what’s happening with creative AI.

History lesson

A composer by trade, Newton-Rex has been working on Jukedeck since 2014, growing the team from one to 20 people and raising some $3.1 million (£2.5 million) in venture funding.

“I think this all started with me asking myself the question at university: why can’t computers write music yet?” he told TNW. “I thought they should be able to, and more importantly, I was wondering — when they could — what would it mean? What would be the amazing applications that would be possible? I ended up visiting my girlfriend at the time in Harvard, where she was studying computer science among other things, and I went to a kind of introductory lecture and [realized] that it might actually be doable.

“So I started building a prototype for what was at the time a rudimentary algorithmic composition system. It was hard and it took me a while, but eventually I built a good enough prototype that I got a Cambridge University’s investment arm, Cambridge Enterprise, to invest in it.”

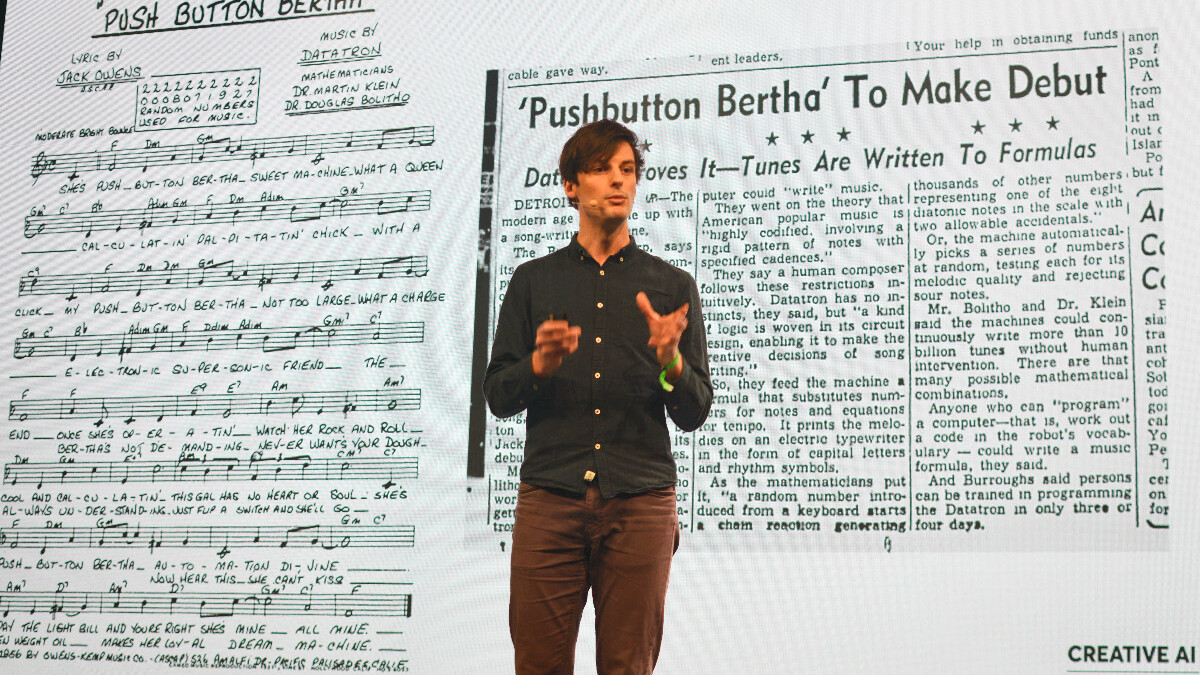

Jukedeck uses neural networks to compose music, but it’s not the only way that has been tried by different computer scientists over the years, as Rex-Newton explained in his talk at Slush. The first notable experiments on this began in the 1950s, although the first person to forecast this development was no other than Ada Lovelace. In 1843, she wrote that “[t]he [Babbage] Engine might compose elaborate and scientific pieces of music of any degree of complexity or extent.”

A hundred years later, an attempt at machine-made music was made by Lejaren Hiller and Leonard Isaacson, whose rule-based AI created the Iliac Suite. The AI used standards of music theory to compose a new piece, which sounded quite good for its time.

Another approach that emerged in the 20th century involved using grammars. Simply speaking, the AI of this kind would attempt to understand and write music through its hierarchy. Among the most notable people using this approach is David Cope, whose patented idea of “recombinancy” led to the creation of AI that could analyze existent pieces of music and write new ones based on the results. Here’s what its imitation of Vivaldi sounded like:

Markov Chains have also been repurposed for music composition by enthusiasts, since their main idea — a system, the current state of which depends only on the previous state — sounds quite similar to how music works. A good example of this is the Continuator, an algorithm written by François Pachet that can carry on where a human musician left off.

One more example of methods used at different times for automatic music composition is evolutionary algorithms. DarwinTunes, a project developed by a team of researchers from the UK, does exactly that. The main idea is that anyone can participate in the project by listening through different “candidate” music fragments and choosing the ones they like. Afterwards, the surviving candidates get evolved, i.e. reproduced with variations.

Here’s one of the monthly report tracks that DarwinTunes posts to its SoundCloud account:

Although most of these methods can actually produce fairly good music, there are always constraints. Either it would, in the case of the rule-based approach, depend too much on musical theory without ever truly grasping what music is, or, when it comes to the others, there would always have to be a human choosing the best candidates or feeding ready-made music to the algorithm.

Enter neural networks

According to Newton-Rex, the main technological challenge for creative AI, and particularly one that can write music, is that there’s simply no right — or wrong — way of doing it. If we take image recognition done by neural networks, there’s always a sure way to train the algorithm by evaluating the results, i.e. telling if it was right or wrong.

With music, it doesn’t work half as well, since there’s no universal definition of what good music is. The musicians working at Jukedeck have given themselves the task of training the algorithm’s taste and composition skills.

“We listen to our music, evaluate the output and refine the networks accordingly,” Newton-Rex said. “We do this in two ways: by ear (we can often hear it getting better, as our team are all musicians!) and by looking at download rates on our site (i.e. ‘are more people downloading the music after this change?’). These methods work because algorithmic composition is still at an early enough stage in its development that big improvements can be made this way.

“However, it’s important to note that the system itself is very different to how evolutionary methods work. With evolutionary methods, the user selects the best output and the system reproduces this output with variation in a continuous loop — there is no training data. With neural networks, the system learns from training data and attempts to reproduce this.”

Meanwhile, it’s time for another music mini-quiz. Listen to the two audio fragments below:

Which one is written by an AI composition engine? Follow this link to get the answer.

A tool or a threat

Although it’s been over 50 years since the experiments with algorithmic music composition began, it’s still considered to be the very early days of this technology. Its future appears to be quite bright, though — at least if you listen to people involved in making it work.

“I’d be very surprised if in 10-15 years’ time AI isn’t at the core of most, if not all, musical experiences,” Newton-Rex said.

This future, however, would mean that a number of composers — particularly those selling their music on stock marketplaces — would have to find a new job.

“I think it would be misleading to say that AI won’t take any jobs, but I think that, frankly, it’s going to in every industry,” Newton-Rex said. “I’d be surprised if there is any industry in which AI does not take some portion of jobs.”

Composers themselves aren’t too concerned about it either. Dmitry Lifshitz, a violinist and sound engineer who’s now a best-selling composer at the popular AudioJungle stock music marketplace, said that AI in general and Jukedeck in particular are still years away from the level you can get from a human composer.

“The electronic tracks sound more or less okay,” he said, playing different music fragments created by Jukedeck. “In rock pieces, the guitars sound quite bad. Everything is a bit synthetic, but for someone who doesn’t care too much about what music is playing in the background, it’d be more than enough. Of course, for example, advertising agencies won’t use this kind of music at this point, but for YouTube vloggers it should be fine.”

Lifhitz also agrees that when AI eventually becomes good enough to compose background soundtracks for video blogs or commercials, stock composers would probably have to find another income source. He didn’t sound bitter about the prospect, but talked about how he’s already embracing AI by working on an app that can generate music ideas for composers plagued by writer’s block.

Ready for another music quiz? Here it comes:

Follow this link to check if you answered correctly, and share your final score in the comments section!

Another popular stock music composer, Olexandr Ignatov, called AI audio tracks a “fast food-like solution” for those who want to have a piece of music quickly and cheaply.

“Only educated people can create a piece of art with a goal to communicate something,” he wrote to TNW. “I can’t imagine that AI would ever be able to write a soundtrack for a movie like those we’ve all heard, that send goosebumps down one’s spine and, in combination with video, create an unparalleled experience. This is something a machine can’t do; only a living creature is able to transmit and direct communication, and music is one of the ways of doing this.”

Newton-Rex put it differently and maintained that creative AI could make music composition more accessible for a wider audience.

“At the moment, music creation is something that’s kind of the reserve of the elites, in a way,” he said. “You need a very long and expensive education to get good at music. Musical creation is not something that’s open to most people in a realistic way. AI will help democratize that, it will let more people make music. It means there’s more music out there. It will mean music’s personalized.”

This, however, is exactly what some other composers might be afraid of.

“Tools that allow people to generate music are, on one hand, powerful aids for composers,” said Vladimir Ponikarovsky, a composer with experience of working on stocks who’s now switched to the gamedev industry. “On the other hand, used by incompetent authors on a large scale, it could lead to the creation of lots of crap and decrease of average quality of [stock] music.”

Newton-Rex largely dismisses the fears about AI-written music having a negative impact on music in general, likening it to the “uproar when electronic instruments came in” and “this whole ‘don’t use synthesizers’ movement.” At the end of the day, he said, entirely new genres of music have been created thanks to technological innovation in this sphere — and that’s what can happen in the future thanks to AI.

Show me your money

For Jukedeck, the immediate future consists of two challenges. The first one has to do with the technology and research: Newton-Rex and his team are working on making the AI capable not only of composing the music, but also of actually playing it and creating the sound from scratch. At Slush 2016, he presented an early version of what’s to come:

The other challenge is about making money. Currently Jukedeck is selling licenses and copyrights for tracks its algorithm has created, charging from $21.99 for a non-exclusive license to $199 for a full copyright. Individuals and small businesses can get a non-exclusive license for free.

There’s also currently no system in place to watermark music created by Jukedeck, so the whole monetization is based on the clients’ honesty. However, this might change soon.

“Our first foray has been into [music for] videos,” Newton-Rex said. “Stage one was YouTubers, but we have haven’t really been monetizing that. That’s been more of a ‘Let’s prove that our music is good enough for people to use.’ We’ve now had over half a million tracks made on our site. We haven’t really announced [the next step] yet, but we’re basically going to be looking at what other areas of the video market we can monetize. And that’s what we’ll try to do in the next couple of months.”

Both music industry and major technology players have also started to take note of the new niche of AI-powered music composition. For example, Sony has an AI composition tool of its own called Flow Machines, which was covered widely in the media last fall as it was used to create the song called Daddy’s Car, mimicking—quite successfully — the style of The Beatles.

For Newton-Rex and Jukedeck, the emergence of new players with money and resources means that the competition is about to get significantly more tight. For the listener, though, it might be a sign of some entirely new music experiences getting closer than ever before.

Get the TNW newsletter

Get the most important tech news in your inbox each week.