The debate has grown in recent years over the role that social media algorithms play in spreading conspiracy theories and extreme political content online. YouTube’s recommender algorithm has come under particularly severe scrutiny. A number of exposés have detailed how it can take viewers down a radicalization rabbit hole.

While YouTube has certainly extended the reach of conspiracy theorists, it’s difficult to assess the objective role of algorithms in these radicalization processes. But my own research has observed the way certain radical communities that congregate at the fringes of the web have managed to essentially manufacture conspiracy theories. These have, in turn, trended on social media.

In 2019, YouTube dramatically cleaned up its platform after coming under pressure from journalists. It removed lucrative ad revenue and deleted entire channels – most notoriously the Infowars channel of the US talk-radio host Alex Jones.

While a recent research paper on this topic noted a corresponding overall decrease in conspiracy theory videos on YouTube, it also observed that the platform continued to recommend conspiratorial videos to viewers who had previously consumed such material. The findings indicate that plenty of potentially objectionable content remains on YouTube. However, they don’t necessarily support the argument that viewers are guided by algorithms down rabbit holes of ever-more conspiratorial content.

Read: [Why conspiracy theories flourish during pandemics]

By contrast, another recent study of YouTube’s recommender algorithm found that conspiracy channels seemed to gain “zero traffic from recommendations.” While this particular study’s methodology generated some debate back and forth, the fact is that an accurate understanding of how these social media algorithms work is impossible. Their inner workings are a corporate secret known only to a few – and possibly even to no humans at all because the underlying mechanisms are so complex.

Not just cultural dopes

The presumption that audiences are the passive recipients of media messages – that they are “cultural dopes” easily subject to subliminal manipulation – has a long popular history in the field of media and communications studies. It’s an argument that’s often popped up in conservative reactions to heavy metal music and video game violence.

But by focusing on audiences as active participants rather than passive recipients we arguably gain greater insights into the complex media ecosystem within which conspiracy theories develop and propagate online. Often these move from the subcultural fringes of the deep web to a more mainstream audience.

Conspiracy theories are an increasingly important method of indoctrination and extremist radicalization. At the same time, their adversarial logic also maps onto a populist style of political rhetoric that pits the general will of the people against a corrupt and aging establishment elite.

A much more extreme version of this dynamic is also characteristic of right-wing anger against the perceived dominance of a “globalist liberal elite.” Such anger galvanized parts of the trolling subculture associated with certain forums, message boards, and microblogging socialnetworks, in support of the presidential candidacy of Donald Trump.

A common rhetorical technique used on the far-right political discussion forum of the anonymous message board 4chan has been to lump together all manifestations of this liberal, globalist elite into a singular nebulous “other.” Whether a perfidious individual, a shadowy organization, or a suspect way of thinking, this conspiracy is imagined as something which undermines the interests of the ultra-nationalist community. These interests also tend to coincide with those of Trump as well as of the white race in general.

This far-right online community has an established record of propagating hatred, and it has also produced two extremely bizarre and extremely successful pro-Trump conspiracy theories: Pizzagate and QAnon.

Pizzagate

Unlike the black boxes of corporate social media algorithms, 4chan datasets are easily captured and analyzed, which has allowed us to study these conspiracy theories in order to identify the processes that brought them about. In both cases these conspiracy theories can be understood as the product of collective labor by amateur researchers congregating within these fringe communities who build up a theory by a process of referencing and citation.

Pizzagate was a bizarre theory connecting the presidential campaign of Hillary Clinton to a child sex ring supposedly run out of a pizza parlor in Washington DC. It developed on 4chan in the course of a single day, shortly before the November 2016 US election. What made Pizzagate new and unusual was how it seemed to emerge from the fringes of the web, at a safe distance from Trump’s own campaign.

Algorithms surely did play a part in spreading #Pizzagate. But more crucial to legitimizing it was the way elements of the story filtered through popular social media channels on Twitter and YouTube, including the Infowars channel of Alex Jones

QAnon

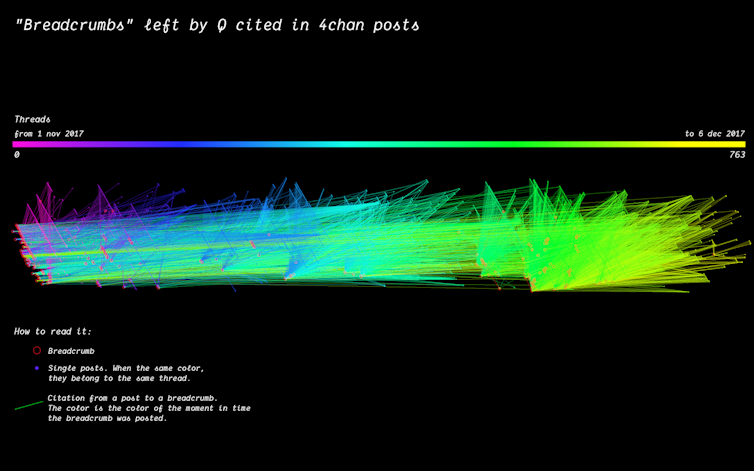

A year later, at the outset of the investigation by Robert Mueller into alleged Russian collusion in the Trump campaign, a new conspiracy theory once again emerged from 4chan. It reworked some elements of the Pizzagate narrative and combined it together with “deep state” conspiracy theories. What would in time simply become known as QAnon initially grew from a series of 4chan posts by a supposed government official with “Q level” security clearance.

Often referred to by readers as “breadcrumbs”, these posts tended to simply ask open-ended questions – such as “who controls the narrative?” “what is a map?” and “why is this relevant?”. Like medieval scholars engaged in the interpretation of metaphysical texts, readers have constructed elaborate illuminated manuscripts and narrative compilations. One of these is currently an Amazon #1 bestseller in the category of “censorship.”

The message here is that by focusing on the role of algorithms in amplifying the reach of conspiracy theories, we should be careful not to fall back on a patronizing framework that imagines people as passive relays rather than active audiences engaged in their own kind of research which propagates radically alternative interpretations of events.

The theory that social media algorithms lure people into conspiracy theories is difficult to definitively prove. But what’s clear is that a conspiratorial subculture with roots extending into the deep web now increasingly appears just below the surface of average people’s seemingly “normal” media consumption. In the end, the real problem is less one of manipulation by algorithms than of political polarisation.![]()

This article is republished from The Conversation by Marc Tuters, Department of Media & Culture, Faculty of Humanities, University of Amsterdam under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.