Creating machines that have the general problem-solving capabilities of human brains has been the holy grain of artificial intelligence scientists for decades. And despite tremendous advances in various fields of computer science, artificial general intelligence still eludes researchers.

Our current AI methods either require a huge amount of data, or a very large number of hand-coded rules, and they’re only suitable for very narrow domains. AGI, on the other hand, should be able to perform multiple tasks with little data and specific instructions.

While approaches to creating AGI have shifted and evolved over the decades, one thing has remained constant: The human brain is proof that general intelligence does exist. The brain can solve problems in a flexible and data-efficient way.

And if we can discover how the human brain parses information and solves problems, we might have a blueprint for what could later become general AI.

Studying the mechanisms of the brain is the focus of neuroscience, a field that has become increasingly entwined with artificial intelligence in the past decades. Collaboration between neuroscientists and computer scientists has led to tremendous advances in AI and can be pivotal to achieving AGI.

In a paper published in the peer-reviewed scientific journal Frontiers in Neuroscience, scientists at Vicarious, a San Francisco–based AI company, provide insights and a framework on how the human brain extracts and processes information from the world, and how this process differs from current AI technologies.

While not the first work that explores the synergies between neuroscience and AI, the paper provides an interesting perspective on organic intelligence.

Led by AI and neuroscience researcher Dileep George, the Vicarious scientists draw lessons from CAPTCHA tests to present clues about the brain’s information-processing mechanisms.

How does the mind develop common sense?

“Efficient learning and effective generalization come from inductive biases, and building Artificial General Intelligence (AGI) is an exercise in finding the right set of inductive biases that make fast learning possible while being general enough to be widely applicable in tasks that humans excel at,” the Vicarious AI researchers write.

Human and animal brains are proof that such biases exist. Every brain has evolved and become optimized to solve problems specific to the body it occupies in a flexible way.

But instead of reverse-engineering the circuits of the brain, the researchers suggest looking at the mechanisms of the mind from a functional perspective. Research shows that humans owe their superior and generalizable intelligence to the neocortex, the outer layer of their brain found in mammals.

“Functionally, the neocortex, in combination with the hippocampal system is responsible for the internalization of external experience, by building rich causal models of the world. In humans and other mammals, these models enable perception, action, memory, planning, and imagination,” the researchers write.

Building rich models of the world is what allows us to reason about causes and effects, deal with “what if” counterfactual scenarios, and solve different problems without being instructed on every single instance. This is a key requirement of general intelligence

“From the moment we are born, we begin using our senses to build a coherent model of the world. As we grow, we constantly refine our model and access it effortlessly as we go about our lives,” the AI researchers write.

For instance, without having ever seen a baseball match, you can look at the following scene and reason about what causes the ball to change direction and what would happen if the ball was flying lower or higher than the bat. This is because we have a solid understanding of how the world works and how objects interact with one another.

“Common sense arises from the distillation of past experience into a representation that can be accessed at an appropriate level of detail in any given scenario,” the authors of the paper write. And this is exactly what is missing from current AI technologies.

But deep learning, the current leading branch of AI that is often compared to the brain, is more akin to the crude form of intelligence found in very basic organisms, the researchers observe. Deep neural network can optimize their parameters for very narrow tasks, such as detecting cancerous nodules in CT scans, converting voice to text, or beating professionals at complicated video games. But they lack the rich model-building capabilities of the human brain.

An example, which the authors of the paper have centered their research on, are CAPTCHAs. Deep learning algorithms can be trained to solve CAPTCHA challenges, but they require millions of labeled examples, and they can’t deal with situations that deviate from their training examples.

And although scientists continue to make incremental advances by creating bigger neural networks, there hasn’t been any serious breakthrough in creating models that can generalize their capabilities.

“The lesson from evolutionary history is that general intelligence was achieved by the advent of the new architecture—the neocortex—that enabled building rich models of the world, not by an agglomeration of specialized circuits,” the AI researchers write. “What separates function-specific networks from the mammalian brain is the ability to form rich internal models that can be queried in a variety of ways.”

Learning from the brain

In their paper, the AI researchers present a triangular framework to understand intelligent behavior through known properties of the world, the physical structure of the brain, and algorithms. Explaining observations from all three angles can provide better guidance in creating AI algorithms with general problem-solving capabilities.

“The triangulation strategy is about utilizing this world-brain-computation correspondence: When we observe a property of the brain, can we match that property to an organizational principle of the world? Can that property be represented in a computational framework to produce generalizations and learning/inference efficiency?” the authors of the paper write.

The researchers further note that pure machine learning models deal with algorithms and data without considering insights learned from the brain.

One of the key properties of the brain is a “generative model” that allows us to internally visualize things and reason about the world at the abstract and conceptual level. This generative model helps us to fill the gaps in visual scenes and reason about natural language. For instance, when you hear the sentence “Sally hammered a nail in the floor,” you automatically imagine the process, and you don’t need to be explicitly told that Sally was holding the nail vertically.

The goal of the generative model is not to recreate a photorealistic scene. Instead, it should be able to compose the scene in terms its components and their relations.

An AI algorithm that has such properties could be able to perform tasks such as classification (what object a scene contains), segmentation (which pixels belong to which object), occlusion reasoning (detect objects that are partially occluded), reasoning, and more. Current deep learning systems can be trained to perform one but not all these tasks.

The Recursive Cortical Network (RCN)

Dileep George and Miguel Lázaro-Gredilla, two of the paper’s authors, were among a group of AI researchers that developed the Recursive Cortical Network (RCN) in 2017. RCN draws insights from neuroscience and handles recognition, segmentation, and reasoning in a unified way.

According to the tests the researchers conducted at the time, RCNs were able to solve text-based CAPTCHAs with a small training dataset and with much more flexibility than deep learning models.

The researchers drew insights from neuroscience and the world to develop the RCN algorithm. For instance, experiments show that the human visual system prioritizes shapes and contours over textures. And this is because objects generally maintain their shape, even if their color and texture change under different lighting conditions.

Your mind’s bias for shape and contours is why you don’t need labeled examples to recognize the following odd objects.

“Contour-surface factorization could be a general principle that is used by the cortex to deal with natural signals, and this bias could have been something discovered by evolution,” the researchers observe.

Deep neural networks, on the other hand, have other biases. For instance, a convolutional neural network can be trained to detect QR codes with very high accuracy, a feat that is beyond the capabilities of most humans. But the same deep learning model trained to detect objects in images would struggle when faced with many real-world situations.

“A QR code is not a natural signal of the kind the human visual system has an innate bias toward,” the AI researchers observe, adding that the capabilities of CNNs to classify QR codes could be indicative of their lack of human-like biases.

Another interesting property discussed in the paper is hierarchical composition. The human visual system tends to see the world as a composition of nested objects. This is also a key property of the world. For instance, trees are composed of limbs, leaves, and roots, regardless of the shape of each component. And we can distinguish these parts even in a tree that we are seeing for the first time. Other AI researchers, including deep learning pioneer Geoffrey Hinton, are exploring hierarchical composition as a means to generalize computer vision capabilities.

“By mirroring the hierarchical structure of the world, the visual cortex can have the advantage of gradually building invariant representations of objects by reusing invariant representations for object parts. Hierarchical organization is also suitable for efficient learning and inference algorithms,” The authors write.

Also worth noting is our visual system’s sensitivity to context and level of detail. We deal with the high variability of the world through feedback mechanisms that take into account local as well as global features. For instance, it is hard to detect what the below photo is…

…but when the same patch of pixels is viewed against other surrounding details, we can understand what the picture represents.

“Any local observation about the world is likely to be ambiguous because of all the factors of variation affecting it, and hence local sensory information needs to be integrated and reinterpreted in the context of a coherent whole. Feedback connections are required for this,” the AI researchers write.

Context and feedback can solve many other problems, such as occlusion resolution in CAPTCHAs.

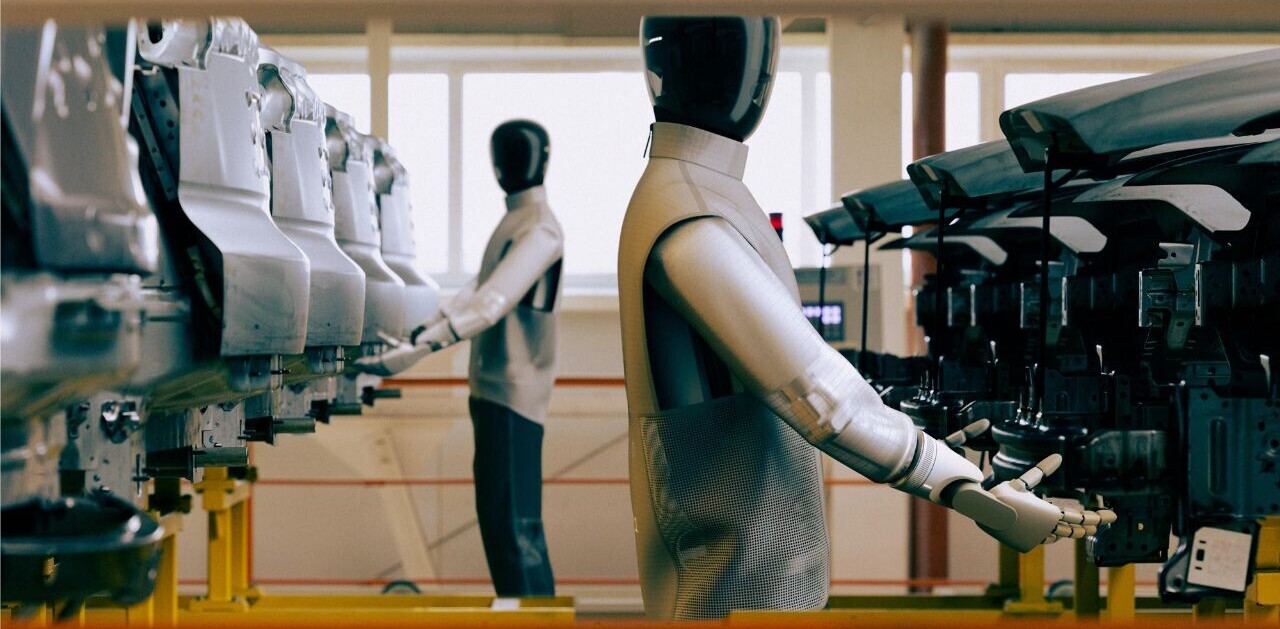

While the long-term goal is AGI, RCN, which has been created based on these principles, is already used in various domains. “We are deploying RCN on robots in warehouses and factories. Vicarious offers robots as a service for solving picking, packing, and assembly problems in high-changeover settings,” George told TechTalks in written comments, adding that the data-efficiency of RCN is a big advantage.

Putting it all together

The work presented by the researchers at Vicarious is one of several efforts that aim to find pathways for codifying true intelligence. Another paper published earlier this year discussed the “dark matter of computer vision” in terms of intuitive functionality, physics, intent, causality, and utility (FPICU).

There are also interesting developments in testing and measuring the level of intelligence in AI systems, including the Abstract Reasoning Corpus (ARC) by Francois Chollet, the creator of the Keras deep learning library. ARC challenges AI systems to learn to solve problems at the abstract level with very few examples.

Dileep George and his colleagues suggest that solving CAPTCHAs in a flexible and data-efficient way would be a good sign that an AI algorithm can solve multiple tasks and is getting us closer to the ultimate goal of AGI.

“Solving text-based captchas was a real-world challenge problem selected for evaluating RCN because captchas exemplify the strong generalization we seek in our models—people can solve new captcha styles without style specific training,” the researchers write.

George and his colleagues will be extending their research to other domains. “We are extending RCN to temporal domains, and then coupling it with concept learning, and finally language. We are also expanding the situations in which RCN is applied in robotics,” he says.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.