You probably wouldn’t want a surgeon to stitch you up if they’d learned their craft by studying YouTube videos. But what about a robot?

The prospect might not be as fanciful as it sounds. Researchers from UC Berkeley, Intel, and Google Brain recently taught an AI model to operate by imitating videos of eight human surgeons at work.

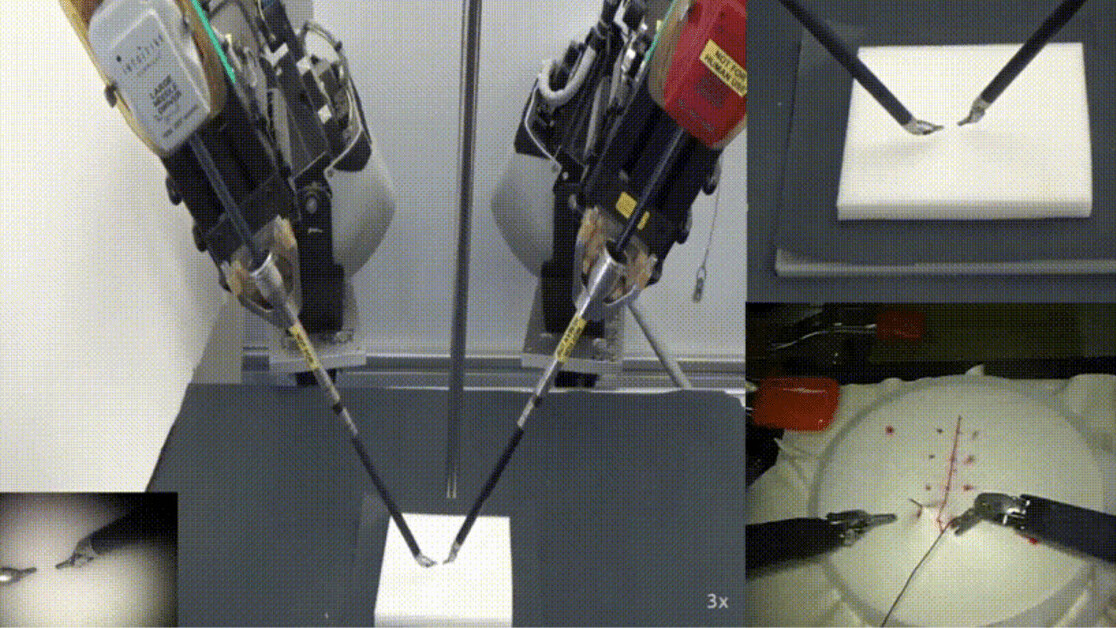

The algorithm — known as Motion2Vec — was trained on footage of medics using da Vinci surgical robots to perform suturing tasks such as needle-passing and knot-tying.

The da Vinci system has been operating on patients — including James Bond on one occasion — since the early 2000s. Typically, the robot is controlled by a doctor from a computer console. But Motion2Vec directs the machine on its own.

It’s already proven its stitching skills on a piece of cloth. In tests, the system replicated the human surgeons’ movements with an accuracy of 85.5%.

[Read: Scientists uses electric shocks to steady the hands of surgeons]

Reaching that level of precision was not an easy task. The eight surgeons in the videos used a wide variety of techniques, which made it tricky for the AI to figure out the best approach.

To overcome this challenge, the team used semi-supervised algorithms, which learn a task by analyzing partially-labeled datasets. This allowed the AI to understand the surgeons’ essential movements from a just small quantity of labeled video data.

Dr. Ajay Tanwani, who led the UC Berkeley team, told TNW that this created a sample-efficient model:

What we did was combine a small amount of labelled data so that we do not get a hit on the performance, while we were also able to exploit the structures in the unlabelled data.

Check it out in action in the video below:

From the lab to the operating theatre

If you’re still not convinced that a robot surgeon trained on YouTube should be stitching up your wounds, you can relax — for now. Tanwani admits that the system needs a lot of work before it’s sewing up the operating theatre.

He now plans to integrate the needle with different kinds of tissues so the system can adapt to different situations — like an unexpected eruption of blood.

The next big milestone for Tanwani is semi-automated remote surgery. He envisions the robot providing assistance to a physician: snapping to their target, correcting any inaccurate movements, or even sticking stitches to a wound.

Tanwani compares this approach to driver-assist features in semi-autonomous cars:

The surgeon is in total control of the surgery. But at the same time, we want to provide features that can take care of some mundane tasks, to reduce the cognitive load on the surgeons and increase their productivity to potentially focus on more patients and complex cases as well.

Just like the transition towards autonomous vehicles, these incremental advances should help gain the trust of users. Ultimately, Tanwani believes the self-supervised approach could bring AI to a range of real-world applications:

The internet is filled with so much unstructured information in the form of videos, images, and text. The idea here was that if the robots can mine useful content from it to make sense of this data in the same way as humans do, robots can fulfill the long-standing goal of AI: to provide assistance in performing everyday life tasks.

Get the TNW newsletter

Get the most important tech news in your inbox each week.