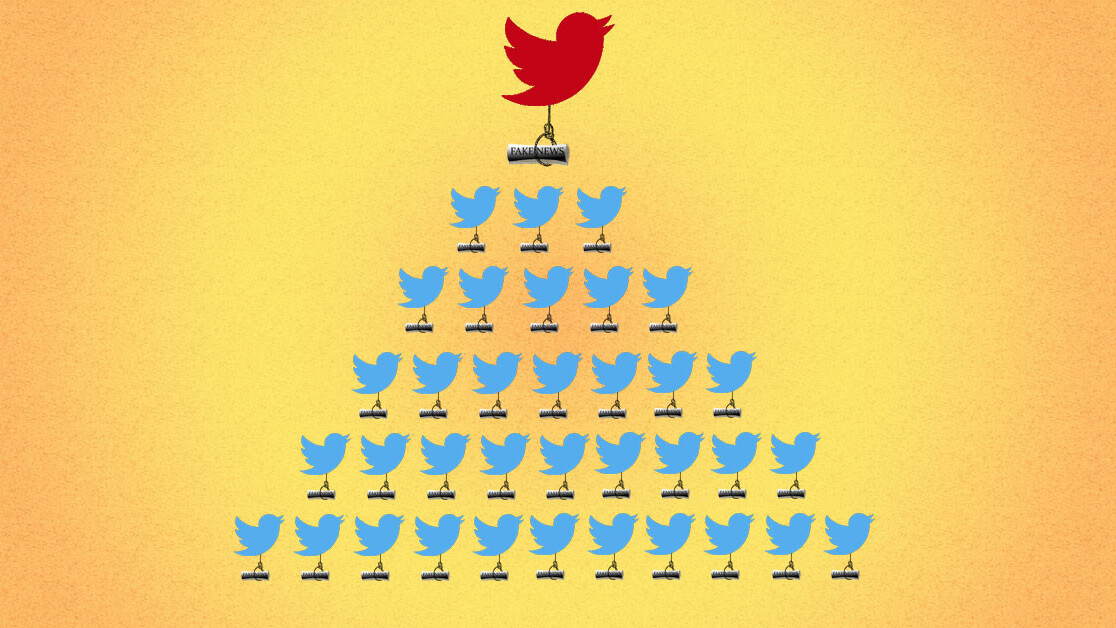

With the entire world seemingly up in arms over “fake news,” you’d think it would have been wiped out by now. Yet low-credibility content continues to thrive on social media thanks to the disproportionately influential effect that social media bots have on humans.

A team of researchers from Indiana University at Bloomington recently conducted a ground-breaking study to determine how low-quality news sites manage to reach so many people with dubious articles. And, as you might guess, the answer is bots.

The problem

TNW spoke with Filippo Menczer, the professor who led the research. He told us that despite the fact that bots make up a very small percentage of accounts tweeting low-credibility stories, they have an immense impact on the spread of these articles on Twitter.

Menczer’s team’s research indicates that bots are responsible for making a story appear popular the moment it’s published and getting it in front of the eyes of political influencers who are susceptible to sharing low-credibility content.

Junior just posted a photoshopped photo of his dad's approval ratings & artificially inflated Trump's rating so that it's higher than Obama's ? pic.twitter.com/eERSNsQJop

— William LeGate (@williamlegate) August 8, 2018

According to the team’s recently published research paper:

Bots amplify such content in the early spreading moments, before an article goes viral. They also target users with many followers through replies and mentions. Humans are vulnerable to this manipulation, resharing content posted by bots. Successful low-credibility sources are heavily supported by social bots.

The team analyzed 13.6 million tweets linking to low-credibility content, then ran simulations where they determined that eliminating the bots would result in a 70 percent decrease in retweets of low-credibility articles.

It’s obvious that low-credibility content relies on bots to spread, but detecting which accounts are bots is an even bigger problem. Menczer told TNW that it was a systemic issue with social media not just a problem with Twitter. But, Twitter’s data is the most accessible, so the team focused on that.

Unfortunately, due to the sheer size and scope of social media, it’s no longer possible to figure out what’s going on with basic analytics or user polls. The University of Indiana had to build a machine learning system to identify bots and another analysis platform to visualize how information spreads on Twitter.

Called Botometer and Hoaxy, respectively, the two platforms allowed Menczer and his team to determine which accounts were (probably) bots and how effective those bots were.

The solution

We’ve reached the point of no return when it comes to subjective truth on social media. Bad actors can use Twitter as a Petri dish to test out lies, making it easy to determine which ones will work as talking points for politicians. And low-credibility content outlets can get their stories to go viral with little-to-no effort, creating a fake news feedback loop.

One way to fight this problem is to limit or eliminate bots. Despite all the good they can do – such as spread legitimate news, help your small business advertise and engage with customers, and provide an often hilarious form of entertainment — they’re also a powerful tool for bypassing people’s common sense.

But banning bots is a knee-jerk reaction. It’d be difficult to enforce and problematic for people who legitimately use bots for entertainment or academic purpose.

AAAAAAAAAAAAAAAAHHHHHHHHHHHHHHH

— Endless Screaming ⚧ ☭ (@infinite_scream) November 27, 2018

California recently introduced legislation that would criminalize the operation of a bot unless its creator made it explicit in the bot’s social media profile that it wasn’t a human-operated account. This type of measure may stem the tide of bots whose creators live in the California, but chances are it’ll be difficult to enforce any such law.

Unfortunately the only real solution, for the moment, is to be better consumers.

Sinan Aral, an expert on information diffusion in social networks at MIT who wasn’t involved in the Indiana University team’s study, told Science News:

We’re part of this problem, and being more discerning, being able to not retweet false information, that’s our responsibility.

So, since these billion dollar technology companies can’t figure out how to make sure their services aren’t being exploited by simple lines of code, we get to spend our time policing their networks for free.

But, realistically, most of us don’t have the time to Google every news story that seems dubious. Worse, it can take hours or days after a story breaks before the respected fact-checkers decide whether a story is “fake news” or not.

One answer is to automate fact checking in real time, using AI, so that consumers have the opportunity to decide before they read a story how much stock to place in it. It’s not exactly an elegant solution, but it could provide a bridge back for those who’ve found themselves lost in a sea of fake news.

To that end, TNW spoke with Arjun Moorthy, Founder and CEO of OwlFactor, a company that’s built a browser plug-in that uses machine learning to determine whether an article meets a specific set of reporting standards or not.

We asked Moorthy what his hopes were for OwlFactor’s article ratings:

The end-game is to use these ratings to surface high-quality content in a way that’s different from other sites. Most sites, particularly on social media, highlight content based on what’s most popular. Usually there’s a share/like/heart metric driving this, or maybe the public prominence of the writer, i.e. followers/fans. OwlFactor surfaces content based on its rating system without consideration of popularity metrics.

This could help people wean themselves from the idea that an article’s popularity is indicative of its quality. And, since it seems like OwlFactor isn’t trying to tell you what to read (though the company does hope to provide an aggregation service) its non-biased insight could prove useful to people on both sides of the political spectrum.

We’re being inundated with social media bots and the people who are influenced by them. It’d be short-sighted, at this point, for anyone who has participated in political discourse on social media to believe they haven’t been influenced by misinformation campaigns.

And it’s only going to get worse as we get closer to the next US presidential election in 2020.

Get the TNW newsletter

Get the most important tech news in your inbox each week.