Some of Google’s top scientists today discussed the future of artificial intelligence and the message was one of tempered expectations – something we hadn’t seen much of at the Google I/O event.

The field of artificial intelligence exists in two states which, upon first glance, appear diametrically opposed. In one, here in 2018, we have computers that can usually figure out what a cat looks like with only a few hints – something most toddlers can get right with near-perfect accuracy. Yet in the other state, fully autonomous vehicles and superhuman AI-powered diagnostic tools for doctors are functionally available now.

Figuring out what’s possible today, when it comes to artificial intelligence, is a full-time job in and of itself. Which is why it’s important to start with a fundamental question.

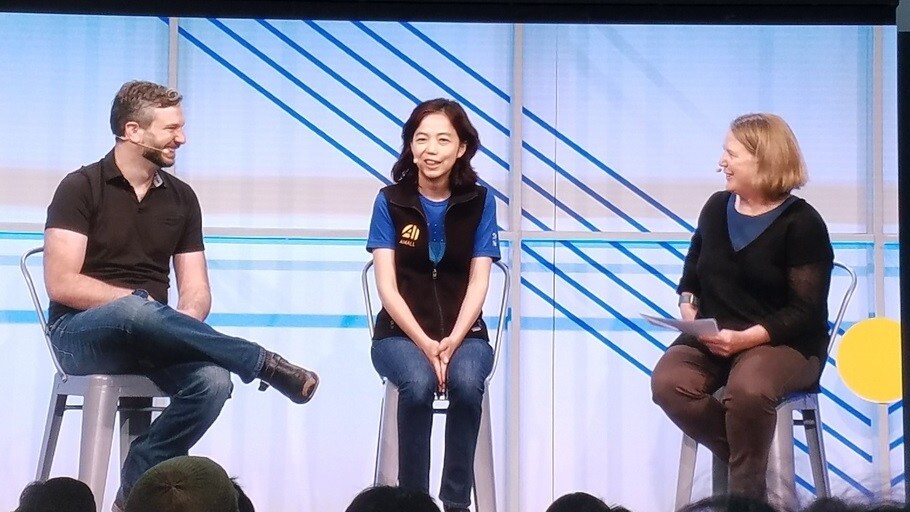

Fei Fei Li, Chief Scientist of Machine Learning and Artificial Intelligence for Google Cloud, says that question is:

Can machines think?

The answer today, according to Google, is a resounding no. When asked if solving image recognition was enough for her to believe we’re on the way to machines that truly think, Li simply says “No, that’s not enough.”

It seems we’re nowhere near artificial general intelligence (AGI), or machines that equal or surpass humans in our ability to think, process, predict, examine, and learn.

Greg Corrado, Principle Scientist at Google and co-founder of the Google Brain program, was asked if we’re close to AGI, and his response sums up what many in the community are thinking:

I really don’t think so. My feeling is: okay we finally got artificial neural networks to be able to recognize cats, and we’ve solved this holy grail problem of image recognition, but that’s only one small sliver, a tiny sliver, of what goes into something like intelligence. We haven’t even scratched the surface. So to me, it’s really just a leap too far to imagine that, having finally cracked pattern recognition after decades of trying, that we would then be on the verge of artificial general intelligence.

The problem is the same for Google’s top researchers as it is for laymen: we’re watching as a technology that was little more than a hobby for cognitive science researchers seven or eight years ago becomes as important to the core development of all technologies as networking is. And, if you believe the experts, in five to ten years machine learning will be as important as electricity.

No more walled gardens

Despite what pundits and so-called experts claim, deep learning doesn’t always happen in a magical void. While some refer to neural networks as ‘alchemy,’ others have a very different view. According to Corrado:

There’s this pathology that AI or deep learning is a black box, but it really isn’t. We didn’t study how it works because, for a long time, it really didn’t work well. But now that it’s working well, there are a lot of tools and techniques that go into examining how these systems work.

And this means that the only way forward is through open source algorithms, equal opportunity access to training data sets, and better tools for everyone. Corrado continues:

It’s critical that we share as much as possible about how these things work, I don’t belive these technologies should live in walled gardens but instead we should develop tools that can be used by everyone in the community … The same tools that my applied machine learning team uses to tackle problems that we’re interested in, those same tools are accessible to you to try to solve the same problems in the same way.

The future is centaurs not killer robots

The future, according to these top researchers, is a world where AI augments nearly everything people do — people plus machines, not people or machines. It’s easy to see that blueprint in Google’s plans – whether you buy in to the corporate message or not is up to you. But, much like the bursting of the dotcom bubble, we’ve had our child-like optimism and innocence crushed in the wake of the Cambridge Analytica scandal. AI runs on data, and that data belongs to humans, not companies.

The future of AI is unwritten. The field of AI is simply too nascent for anyone to predict what’ll happen next. At the same time, however, there’s more than half-a-century of work in the field indicating that AI is the future of all technology.

Corrado and Li called this “phase two,” after 60 years of development in the field of artificial intelligence they, and their peers around the world, are just getting started.

Check out our event page for more Google I/O stories this week, or follow our reporters on the ground until the event wraps on Thursday: @bryanclark and @mrgreene1977

Get the TNW newsletter

Get the most important tech news in your inbox each week.