How many times have you seen a video being badly cropped when you watch it on a mobile device? It’s quite frustrating and annoying, and most of the time, there’s not much you can do about it.

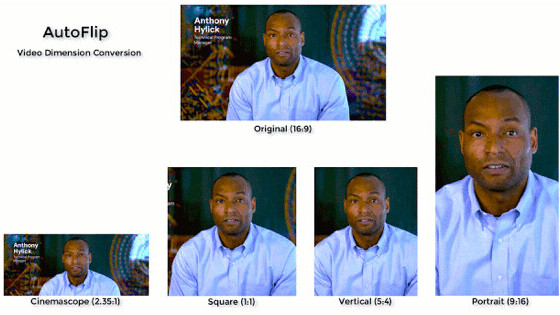

To address this problem, Google’s AI team has developed an open-source solution, Autoflip, that reframes the video that suits the target device or dimension (landscape, square, portrait, etc.).

Autoflip works in three stages: Shot (scene) detection, video content analysis, and reframing. The first part is scene detection, in which the machine learning model needs to detect the point before a cut or a jump from one scene to another. So it compares one frame with the previous one before to detect the change of colors and elements.

Once the model determines a shot, it moves on to the video content analysis to determine important objects in a scene. It uses a deep learning neural network to determine not just people or animals, but motion and moving balls in sports, and logos in commercials.

For the final stage, the AI model determines if it will use stationary mode for scenes that take place in a single space, or tracking mode for when objects of interest are constantly moving. Based on that, and the target dimensions in which the video needs to be displayed, Autoflip will crop frames while reducing jitter and retaining the content of interest.

[Read: This AI can perfectly dub videos in Indic languages — and correct lip syncing]

Google researchers said that Autoflip can be used to convert videos to many formats and screens without much effort. For the next stage, the team wants to improve object tracking in interviews and animation films. It wants to use text detection and image inpainting techniques to better place foreground and background objects in one frame.

You can checkout Autoflip’s code here.

You’re here because you want to learn more about artificial intelligence. So do we. So this summer, we’re bringing Neural to TNW Conference 2020, where we will host a vibrant program dedicated exclusively to AI. With keynotes by experts from companies like Spotify, RSA, and Medium, our Neural track will take a deep dive into new innovations, ethical problems, and how AI can transform businesses. Get your early bird ticket and check out the full Neural track.

Get the TNW newsletter

Get the most important tech news in your inbox each week.