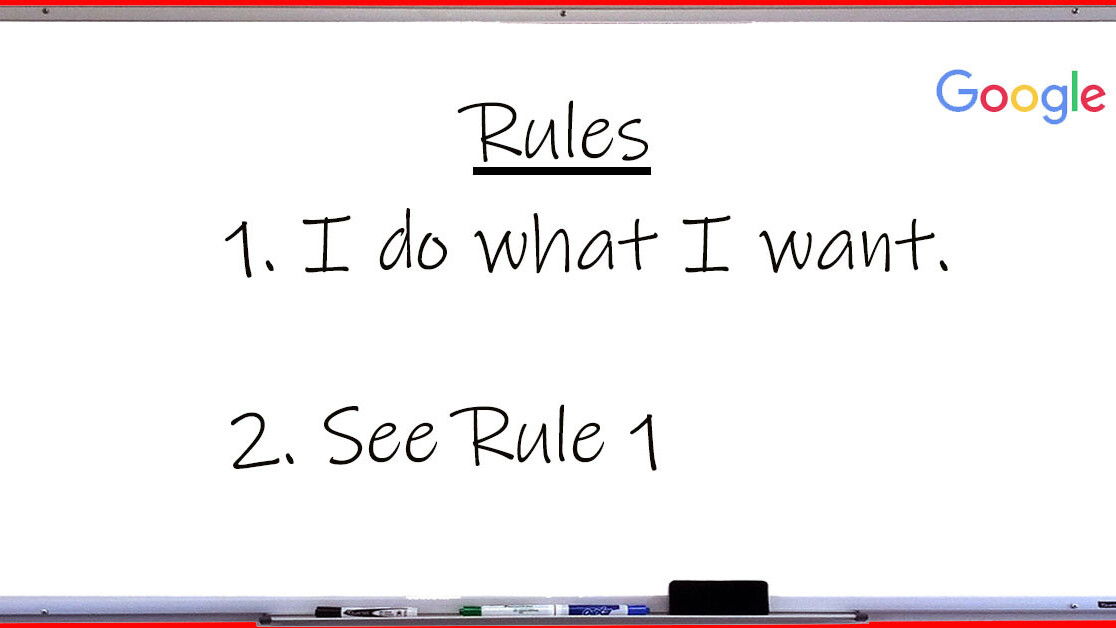

Google CEO Sundar Pichai yesterday published his company’s new rules governing the development of AI. Over the course of seven principles he lays out a broad (and useless) policy leaving more wiggle room than a pair of clown pants.

If you tell the story of Google’s involvement in building AI for the US military backwards it makes perfect sense. In such a case, the tale would begin with the Mountain View company creating a policy for developing AI, and then it would use those principles to guide its actions.

Unfortunately the reality is the company has been developing AI for as long as it’s been around. It’s hard to gloss over the fact that only now, after the company’s ethics are being called into question over a military contract, is the CEO concerned about having these guidelines.

Of course, this isn’t to suggest that it’s a company that’s been developing AI technology with no oversight. In fact it’s clear that Google engineers, researchers, and scientists are among the world’s finest and many of those employees are of the highest ethical character. But at the company level, it feels like the lawyers are running the show.

No, my point is to suggest that Pichai’s blog post is nothing more than thinly-veiled trifle aimed at technology journalists and other pundits in hopes we’ll fawn over the declarative statements like “Google won’t make weapons.” Unfortunately there’s no substance to any of it.

It starts with the first principle of Google’s new AI policy: be socially beneficial. This part lays out lip service saying it will strive to develop AI that benefits society, but doesn’t discuss what that means or how it’ll accomplish such an abstract principle.

Oddly, the final sentence under principle one is “And we will continue to thoughtfully evaluate when to make our technologies available on a non-commercial basis.” That’s just a word salad with no more depth than saying “Google is a business that will keep doing business stuff.”

Instead of “be socially beneficial,” I would have much preferred to see something more like “refuse to develop AI for any entity that doesn’t have a clear set of ethical guidelines for its use.”

Unfortunately, as leaked emails show, Google’s higher-ups were more concerned with government certifications than ethical considerations when they entered into a contract with the US government – an entity with no formal ethical guidelines on the use of AI.

In appearance, each of the seven principles laid out by Pichai are general bullet points that read like cover-your-own-ass statements. And, each corresponds with a very legitimate concern that the company seems to be avoiding discussing. After the aforementioned first principle, it just gets more vapid:

- “Avoid creating or reinforcing unfair bias.” This, instead of a commitment to developing methods to fight bias.

- “Be built and tested for safety.” Pichai says “We will continue to develop and apply strong safety and security practices to avoid unintended results that create risks of harm.” It’s interesting that Pichai’s people don’t seem to think there’s any risk of unintended consequences for teaching the military how to develop image processing AI for drones.

- “Be accountable to people.” Rather than “develop AI with transparency,” which would be great, this just says Google will ultimately hold a human responsible for creating its AI.

- “Incorporate privacy design principles.” Apple just unveiled technology designed to keep big data companies from gathering your data. Google just said it cares about privacy. Actions speak louder than words.

- “Uphold high standards of scientific excellence.” Google’s research happens inside of an internal scientific echo chamber. Numbers 4, 5, and 6 should be replaced with “be transparent.”

- “Be made available for uses that accord with these principles.” In this same document Pichai points out that Google makes a large amount of its work in AI available as open-source code. It’s easy to say you’ll only develop AI with the best of intentions and use it for only good, as long as you take no responsibility for how it’s used once your company’s geniuses finish inventing it.

Pichai’s post on Google’s AI principles serve little more purpose than to, perhaps, eventually end up as a hyperlinked reference in a future apology.

If Google wants to fix its recently-tarnished reputation, it should take the issue of developing AI serious enough to come up with a realistic set of principles to guide future development– one that addresses the ethical concerns head on. It’s current attempt is nothing more than seven shades of gray area, and that doesn’t help anyone.

Get the TNW newsletter

Get the most important tech news in your inbox each week.