Landscape photography is hard, no matter how beautiful an environment you’re shooting in. You need to be well-versed in composition, deal with weather conditions, know how to adjust your camera settings for the best possible shot, and then edit it to come up with a pleasing picture.

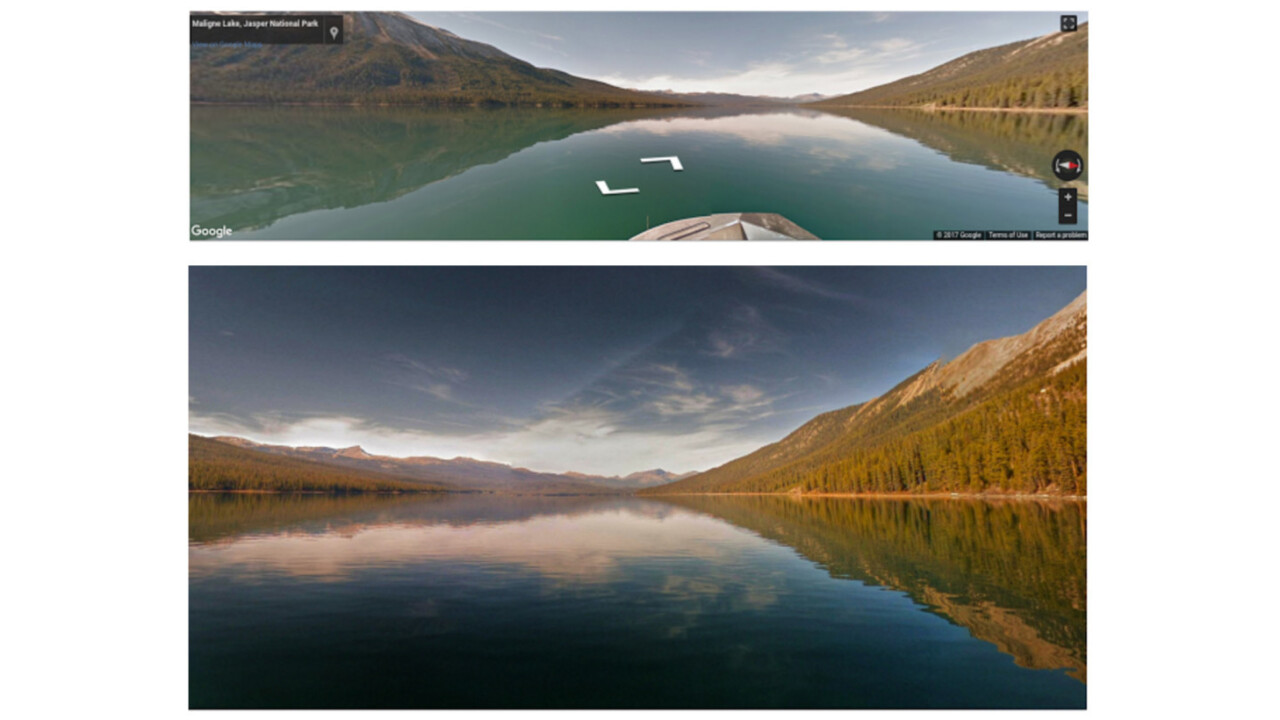

Google might be close to solving the last part of that puzzle: a couple of its Machine Perception researchers have trained a deep-learning system to identify objectively fine landscape panorama photos from Google Street View, and then artistically crop and edit them like a human photographer would. Here’s some of its handiwork:

The results don’t just speak for themselves: Google showed a bunch of these photos, along with others from various sources, and asked several pro photographers to grade them for quality; about 40 percent of Google’s submissions were perceived as being created by ‘semi-pro’- or ‘pro’-level photographers.

What’s especially interesting is that the AI is capable of applying contextually meaning adjustments in different parts of each photograph, making for dramatic lighting and more compelling images – as opposed to simply applying a filter to the entire picture or adding something predictable like a vignette.

I imagine this research will eventually find its way into Google Photos’ editing tools, or in the company’s other mobile editing app, Snapseed.

You can take a look at more AI-edited landscape pictures in this gallery, and find the research paper published by Google’s Hui Fang and Meng Zhang here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.