Deep learning shows a lot of promise in healthcare, especially in medical imaging, where it can help improve the speed and accuracy of diagnosing patient conditions. But it also faces a serious barrier: The shortage of labeled training data.

In medical contexts, training data come at great costs, which makes it very difficult to use deep learningfor many applications.

To overcome this hurdle, scientists have explored different solutions to various degrees of success. In a new paper, artificial intelligence researchers at Google suggest a new technique that uses self-supervised learning to train deep learning models for medical imaging. Early results show that the technique can reduce the need for annotated data and improve the performance of deep learning models in medical applications.

Supervised pre-training

Convolutional neural networks (CNN) have proven to be very efficient at computer vision tasks. Google is one of several organizations that has been exploring its use in medical imaging. In recent years, the company’s research arm has built several medical imaging models in domains like ophthalmology, dermatology, mammography and pathology.

“There is a lot of excitement around applying deep learning to health, but it remains challenging because highly accurate and robust DL models are needed in an area like healthcare,” Shekoofeh Azizi, AI resident at Google Research and lead author of the self-supervised paper, told TechTalks.

One of the key challenges of deep learning is the need for huge amounts of annotated data. Large neural networks require millions of labeled examples to reach optimal accuracy. In medical settings, data labeling is a complicated and costly endeavor.

“Acquiring these ‘labels’ in medical settings is challenging for a variety of reasons: it can be time-consuming and expensive for clinical experts, and data must meet relevant privacy requirements before being shared,” Azizi said.

For some conditions, examples are scarce to begin with, and in others, such as breast cancer screening, it may take many years for the clinical outcomes to manifest after a medical image is taken.

Further complicating the data requirements of medical imaging applications are distribution shifts between training data and deployment environments, such as changes in the patient population, disease prevalence or presentation, and the medical technology used for imaging acquisition, Azizi added.

One popular way to address the shortage of medical data is to use supervised pre-training. In this approach, a CNN is initially trained on a dataset of labeled images such as ImageNet. This phase tunes the parameters of the model’s layers to the general patterns found in all kinds of images. The trained deep learning model can then be fine-tuned on a limited set of labeled examples for the target task.

Several studies have shown supervised pre-training to be helpful in applications such as medical imaging, where labeled data is scarce. However, supervised pre-training also has its limits.

“The common paradigm for training medical imaging models is transfer learning where models are first pre-trained using supervised learning on ImageNet. However, there is a large domain shift between natural images in ImageNet and medical images, and previous research has shown such supervised pre-training on ImageNet may not be optimal for developing medical imaging models,” Azizi said.

Self-supervised pre-training

Self-supervised learning has emerged as a promising area of research in recent years. In self-supervised learning, the deep learning models learn the representations of the training data without the need for labels. If done right, self-supervised learning can be of great advantage in domains where labeled data is scarce and unlabeled data is abundant.

Outside of medical settings, Googlehas developed several self-supervised learning techniques to train neural networks for computer vision tasks. Among them is the Simple Framework for Contrastive Learning (SimCLR), which was presented at the ICML 2020 conference. Contrastive learning uses different crops and variations of the same image to train a neural network until it learns representations that are robust to changes.

In their new work, the Google Research team used a variation of the SimCLR framework called Multi-Instance Contrastive Learning (MICLe), which learns stronger representations by using multiple images of the same condition. This is often the case in medical datasets, where there are multiple images of the same patient, though the images might not be annotated for supervised learning.

“Unlabeled data is often available in large quantities in various medical domains. One important difference is that we utilize multiple views of the underlying pathology commonly present in medical imaging datasets to construct image pairs for contrastive self-supervised learning,” Azizi said.

When a self-supervised deep learning model is trained on different viewing angles of the same target, it learns more representations that are more robust to changes in viewpoint, imaging conditions, and other factors that might negatively affect its performance.

Putting it all together

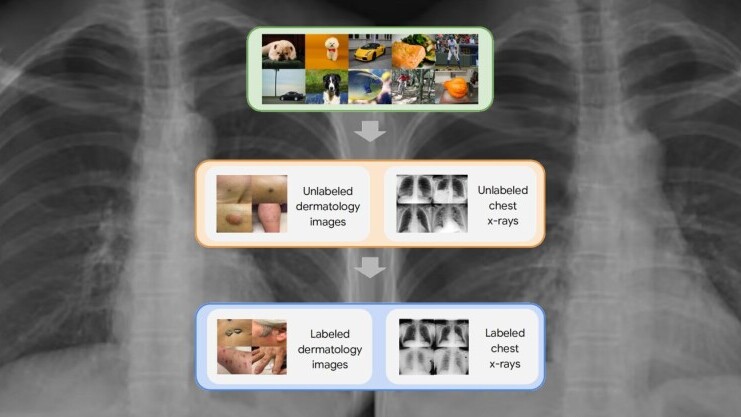

The self-supervised learning framework the Google researchers used involved three steps. First, the target neural network was trained on examples from the ImageNet dataset using SimCLR. Next, the model was further trained using MICLe on a medical dataset that has multiple images for each patient. Finally, the model is fine-tuned on a limited dataset of labeled images for the target application.

The researchers tested the framework on two dermatology and chest x-ray interpretation tasks. When compared to supervised pre-training, the self-supervised method provides a significant improvement in the accuracy, label efficiency, and out-of-distribution generalization of medical imaging models, which is especially important for clinical applications. And it requires much less labeled data.

“Using self-supervised learning, we show that we can significantly reduce the need for expensive annotated data to build medical image classification models,” Azizi said. In particular, on the dermatology task, they were able to train the neural networks to match the baseline model performance while using only a fifth of the annotated data.

“This hopefully translates to significant cost and time savings for developing medical AI models. We hope this method will inspire explorations in new healthcare applications where acquiring annotated data has been challenging,” Azizi said.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech, and what we need to look out for. You can read the original article here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.