Repeat after me: An AI is only as fair as the data it learns from. And all too often, the world is unfair with its myriad gender, race, class, and caste biases.

Now, fearing one such bias might affect users and rub them the wrong way, Google has blocked Gmail’s Smart Compose AI tool from suggesting gender-based pronouns.

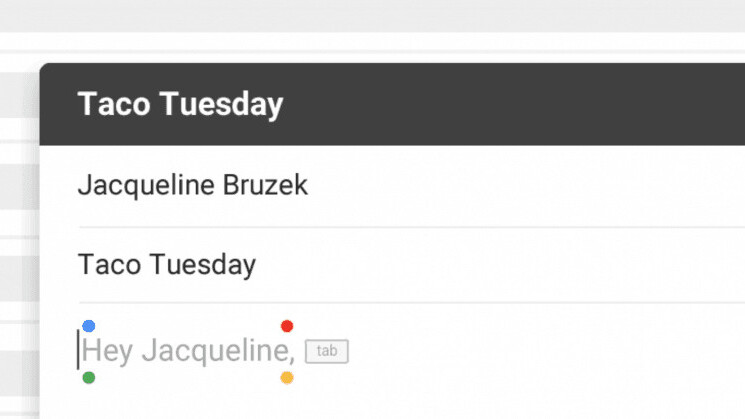

Introduced in May, Gmail’s Smart Compose feature allowed users to automatically complete sentences as they typed them. You can see an example below, with Gmail’s suggested words in gray.

If you still haven’t enabled the feature on your Gmail, you can learn how to do so here.

Smart Compose is an example of natural language generation (NLG), in which computers learn from patterns and relationships between words in literature, emails and web pages to write sentences – similar to the predictive text feature on your phone’s keyboard or messaging app.

But as I mentioned, Google’s AI was showing worrying signs that it was biased. Gmail product manager Paul Lambert told Reuters that a problem was discovered in January when a company researcher typed “I am meeting an investor next week,” and Smart Compose suggested “Do you want to meet him?” instead of “her” when attempting to auto-complete the question.

This bias towards the male gender pronoun ‘him’ was because the AI thought an investor was highly likely to be male, and very unlikely to be female, based on the data it got from emails written and received by 1.5 billion Gmail users around the world.

Since fields like finance, technology, and engineering, for instance, are over-represented by men, Gmail’s AI had concluded from its data that an investor or an engineer was a “him” and not “her.” This could be even more concerning if the recipient of the email identified as a trans person and preferred to be addressed by the pronoun ‘they.’

It’s not the first time Google’s automated services have messed up; however, the company seemed to have learned from those past hiccups and caught the issue before it received complaints from the public.

In 2015, Google Photos’ auto-tagging feature was introduced that promised to allow users to “search by what you remember about a photo, no description needed.” With millions of images uploaded to the system everyday, and each of these being named by the uploader, Google used this data to train its system to auto-tag images. But, that didn’t turn out quite well.

Software engineer Jacky Alciné pointed out to Google that the image recognition algorithms classified his black friends as “gorillas.” – a term loaded with racist history.

Google Photos, y'all fucked up. My friend's not a gorilla. pic.twitter.com/SMkMCsNVX4

— Jacky lives on @jalcine@playvicious.social now. (@jackyalcine) June 29, 2015

Google said it was “appalled” at the mistake, apologized to Alciné, and promised to fix the problem. After nearly three years of struggling to solve the problem, it was only in January that Google swept the problem under the rug by blocking its algorithm from identifying gorillas altogether.

In 2012, the search engine released a privacy policy that it sought to aggregate data about users from its array of products like Gmail, YouTube, Google Search, and others into a single profile. When people visited the “ad preferences” section of their profiles to see what the Google knew about them, many women, especially in the tech industry, were in for a shock.

Google had listed many women as middle aged men. From proxy data on web search patterns like “Computers and Electronics,” or “Parenting”, its model had inferred that the users were 40-something-males – because most people with that kind of search pattern were middle-aged men.

Sara Wachter-Boettcher, author of the book Technically Wrong: Sexist Apps, Biased Algorithms, and Other Threats of Toxic Tech, noted on the incident in her book:

So if your model assumes, from what it has seen and heard in the past, that most people interested in technology are men, it will learn to code users who visit tech websites as more likely to be male. Once that assumption is baked in, it skews the results: the more often women are incorrectly labeled as men, the more it looks like men dominate tech websites—and the more strongly the system starts to correlate tech website usage with men.

I’m not an expert in AI and machine learning, so I can’t comment authoritatively on whether it’s easy to remedy issues with biased automated systems. But it’s worrying to see that, rather than attempting to fix these problems, even mighty firms like Google prefer to shy away from them or turn them off entirely. Hopefully, the next few years will see experts in the field tackle bias so that powerful AI systems can be used by more people.

Stay up to date on tech trends and issues, follow TNW on Flipboard, Twitter and Instagram.

Get the TNW newsletter

Get the most important tech news in your inbox each week.