In our ongoing series, High Contrast, we’re looking at how assistive technology can help people with disabilities overcome challenges in their lives, and the pioneers innovating in solutions to make everyday tools more accessible.

Earlier this year, Google teased a neural network-powered algorithm that could do speech-to-speech translation in real-time. As impressive as that might be, the app was mostly inaccessible to people with speech impediments. This is why the company’s AI division has been working on new software specifically aimed at those with verbal impairments.

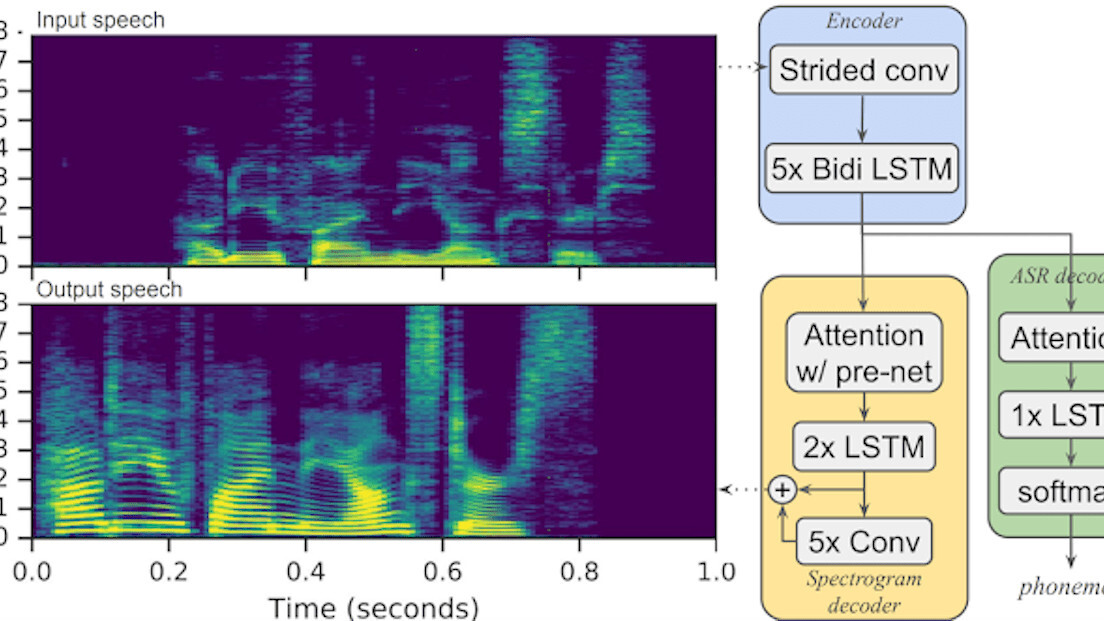

In a new blog post, the Big G presented a new speech-to-text technology specifically designed for people with verbal impairments and atypical speech patterns. Dubbed Parrotron, the software runs on a deep neural network trained to convert atypical speech patterns into fluent synthesized speech.

What’s particularly interesting is that the technology doesn’t rely on visual cues like lip movement.

To accomplish this, Google fed the neural network with a “corpus of [nearly] 30,000 hours that consists of millions of anonymized utterance pairs.”

The technology essentially reduces “the word error rate for a deaf speaker from 89 percent to 25 percent,” but Google hopes ongoing research will improve the results even further.

Indeed, the researchers have also shared a couple of demo videos to show the progress they have made. You can check them out below:

For a more detailed breakdown of the technology behind Parrotron, head to Google’s blog here. The full research has been posted on ArXiv, while you can find more examples of Parrotron in action in this GitHub repository.

Get the TNW newsletter

Get the most important tech news in your inbox each week.