A new artwork has inverted the application of predictive policing. Instead of forecasting crimes committed by civilians, the project predicts which civilians will be killed by police.

The future victims are memorialized on a website called Future Wake. None of them, however, are real people.

Each identity and story is generated by AI. Yet the predictions about their deaths are based on historical events.

Future Wake was funded by Mozilla and created by two artist-technologists called Oz and Tim. They hope the project stirs debate about both predictive policing and fatal encounters with cops.

“By turning around predictive policing, we also want to turn around how data is talked about and how these tragedies are talked about,” Oz told TNW.

Police data

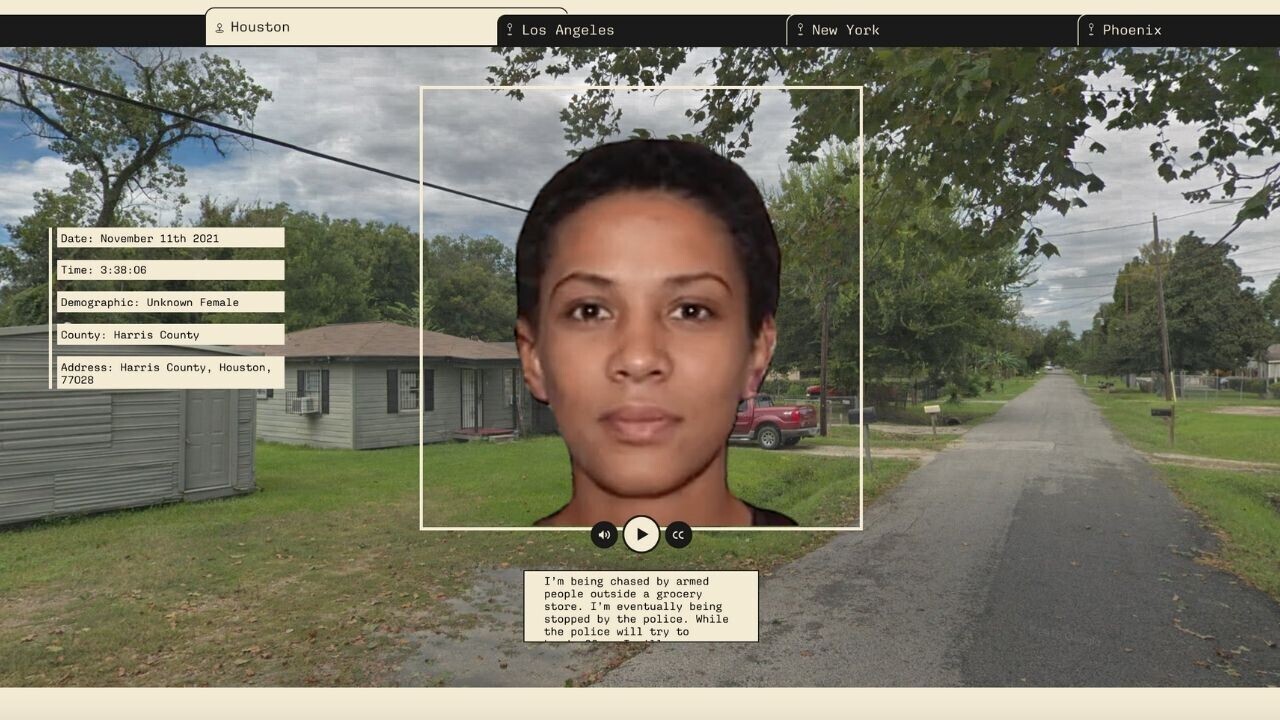

Future Wake forecasts where, when, and how the next fatal encounter with the police will happen.

The predictive algorithms were trained on information from the Fatal Encounters and Mapping Police Violence datasets, which document police killings in the US.

Demographic, location, and event descriptions were extracted from the data to build predictive models for five major US cities.

The training data contained more than 30,000 victims from the last 21 years. Around a third (32%) of the victims were white, while 22% were Black and 13% were Hispanic. Their ages ranged from under one-year-old to 102.

Oz was surprised by the quantity and diversity of the data:

I’m sure if you were to try to find your own demographic doppelganger you could find it… Everyone is represented in the dataset; it’s just some populations are over-represented.

This data trained the algorithms to predict where, when, and how the next fatal encounter with police will happen.

Data with a human face

The victims’ stories were generated by applying GPT-2 to descriptions of past deaths.

To emphasize that the victims are most than just statistics, each prediction was given a deepfake face and a voice that was donated by a real person. Visitors to the site can see and hear the fictional victim discuss the story of their death.

“We want to highlight that these statistical generations reflect real people who are living now,” said Oz. “It’s hard to empathize with a number; it’s easier to empathize with a human face.”

The human-driven approach makes the stories more impactful. Oz and Tim hope this illustrates the urgent need for more restrictions on police use of force.

They acknowledge, however, that their predictive models are inherently flawed. Their algorithms can only identify patterns in historical and often biased data. Each prediction could be wrong — or even dangerous.

In these defects, the models mirror the risks of real predictive policing.

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.