Those of us in America are very familiar with the rights of free speech — we take the First Amendment very seriously. But when global platforms like Facebook and Google are charged with protecting the value of hate speech, even in places where it’s more common to be mindful of hurtful or embarrassing speech, things can get tricky.

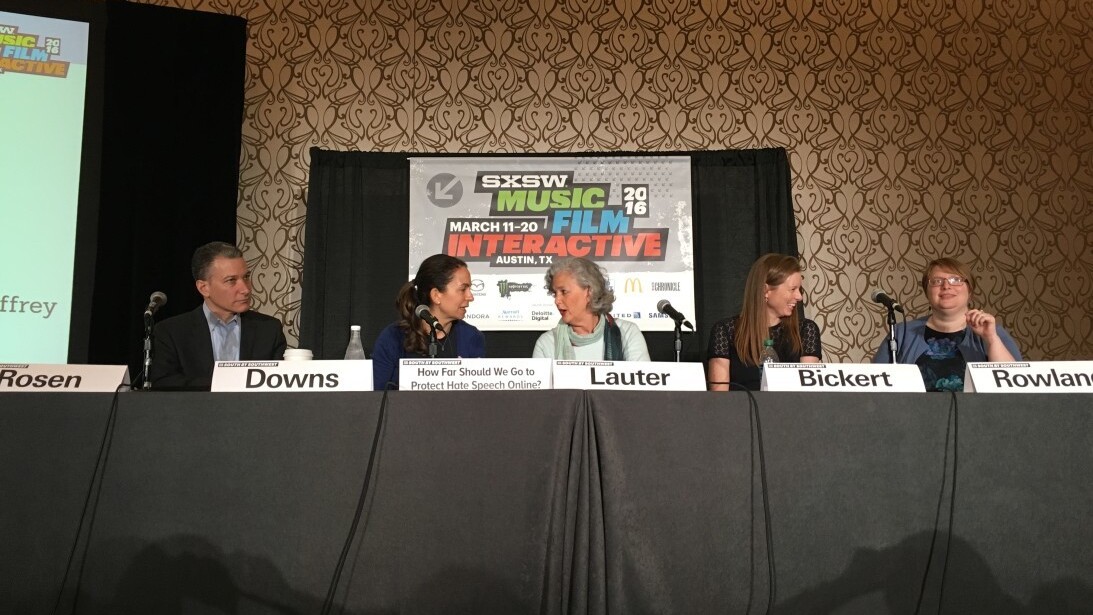

Drawing the line was the subject of a panel at SXSW’s Online Harassment Summit, which devoted an entire day’s worth of panels to understanding the ethics and morals surrounding the Internet and its harboring of your friendly neighborhood troll. But ACLU staff attorney Lee Rowland began the conversation with nailing down the things that could be classified as hate speech.

“It is speech that is fully protected by the constitution but you don’t think it should be,” she said.

Private platforms like Facebook do have an opportunity of how to draw the line based on user safety and comfortability, and Head of Global Policy Management Monika Bickert said that the company filters through more than 1 million reports per day of content on the platform that violates the company’s Terms of Service.

While she admitted that the company does make mistakes in determining when speech violates that criteria — something she chalked up to the volume of materials — that Facebook’s overall mission is to ensure people feel comfortable on the site.

And she added that Facebook is taking crucial steps to enforce “counter speech,” which can include anything from speaking out against harassment online to waging “Like attacks” or positively-focused message campaigns rather than out and out suppressing a voice.

“We have been working alongside Google to empower people in the community to stand up,” she said. “We’re working with groups like Affinis Labs to run hackathons to talk about hate speech and those that combat it. We’re also providing tools through the site that allow people to, in an easy way, contact someone about something that’s inappropriate.”

The same tactics are employed at YouTube, said Global Head of Public Policy and Government Relations Juniper Downs. The company struggles with the same volume problem that Facebook does — in 2014, the platform removed 14 million videos for policy violations — and relies heavily on community moderation and flagging in order to get overtly aggressive or violent speech removed. But counter speech is something the company is working on as part of the arsenal, in part because it’s much more attractive than simply squashing bad actors.

“We also are thinking hard about how to create more transparency, including a robust appeals process,” she added. “We invest heavily in a robust counter-notice that people are educated about.”

The possibility of an increase in taking down hate speech concerns Jeffrey Rosen, the lone male on the panel and a well known academic and writer. He argued that the protection of free speech on the Internet is of upmost importance, because platforms run the risk of kowtowing to other cultures and complying in a way that could splinter the online world into areas with varying degrees of speech acceptance.

“It’s not speech that is defamatory or speech that it is a true threat or incites violence,” he said. “It’s about offending a user’s dignity.”

“It’s not speech that is defamatory or speech that it is a true threat or incites violence,” he said. “It’s about offending a user’s dignity.”

This, Rosen points out, is the core problem with finding and destroying hate speech: the sacrifice of liberty in the name of security could mean that, online, we lose both.

So when people go online to speak ill about a religion, a race, or a gender, those controlling the environment have to be careful to not throw the baby out with the bathwater.

It’s not a neat or efficient conversation to have, but companies are still trying to strike the balance between creating a safe space and creating a free space. And it’s also the symptom of a wider issue that extends well into our offline lives.

“The problem is not the hateful posts,” Bickert said. “The problem is the underlying hate.”

Follow our coverage of SXSW 2016 here

Get the TNW newsletter

Get the most important tech news in your inbox each week.