“Deepfake” is the name being given to videos created through artificially intelligent deep learning techniques. Also referred to as “face-swapping”, the process involves inputting a source video of a person into a computer, and then inputting multiple images and videos of another person.

The neural network then learns the movements and expressions of the person in the source video in order to map the other’s image onto it to look as if they are carrying out the speech or act.

This practice was first used extensively in the production of fake pornography in late 2017 – where the faces of famous female celebrities were swapped in. Research has consistently shown that pornography leads the way in technological adoption and advancement when it comes to communication technologies, from the Polaroid camera to the internet.

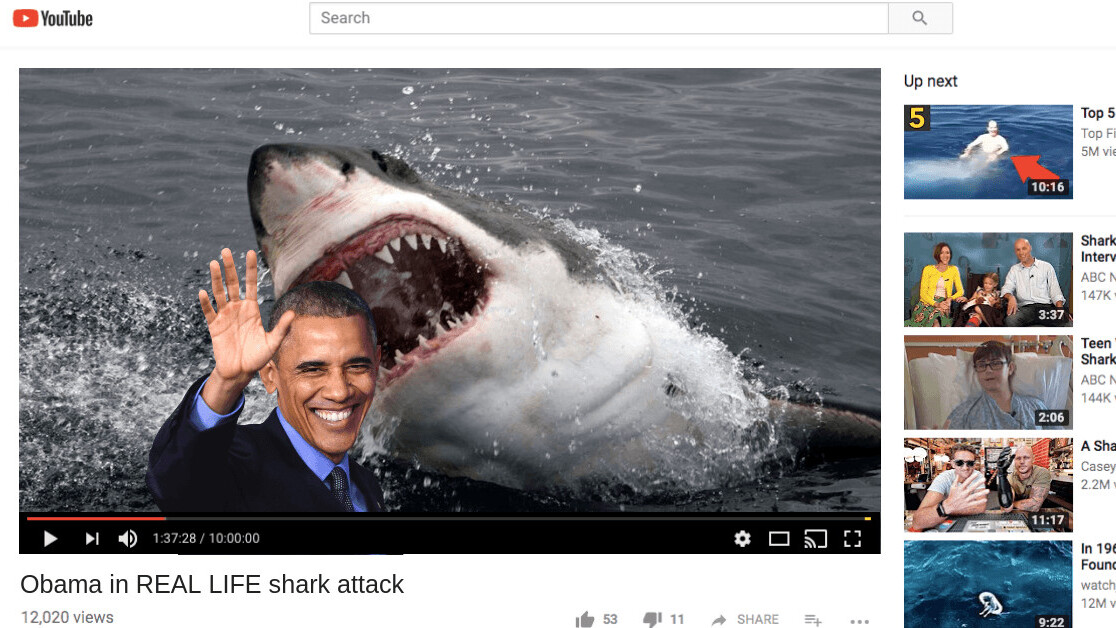

Deepfaking has also been used to manipulate and subvert political speeches: early experimentation with this technology undertaken by researchers at the University of Washington used speeches by Barack Obama as their source material, and appear plausible at first glance.

Prior to the emergence of AI-enabled face-swapping in pornography, similar principles, and techniques had already been well tried and tested in filmmaking, although through very labour intensive and lengthy processes involving huge teams of film production specialists, equipment and software.

Take for example Furious 7, in which Paul Walker, the actor playing the lead role, died during production. Post production experts Weta Digital meticulously completed Walker’s performance using CGI and advanced compositing techniques.

Now, however, deep learning and machine vision technologies have advanced to such a stage that the relevant software has become publicly accessible and can be used on a normal computer.

As a consequence, fears have been justifiably expressed. There are concerns that deepfakes will soon become widespread, saturating all of our everyday encounters with moving images, to the point where we no longer be able to discern which videos are real and which are fake.

A moment looking back through history, and examining the moments of the introduction or populariZation of all preceding new media – from photography to the world wide web – is useful here. Because there has always been a period of uncertainty and confusion as audiences grapple with the apparent blurring of the lines between their reality and fiction.

The recent emergence of the “deepfakes” phenomena can be understood within this media continuum. It is simply the latest in a lineage of examples throughout history where the interrelationship between technologies and illusion is tightly woven.

A history of fakery

Take for example The Arrival of a Train at La Ciotat, one of the first pieces of moving image cinematography, created by Auguste and Louis Lumière. Contemporary news reports described people running screaming from the screen as the fast steam train approached them during its first public screening in Paris in 1895.

Or the infamous Cottingley Fairies photographs, taken in 1917, which were believed to be authentic by some for over six decades. The perpetrators finally admitted they were fake in the 1980s.

Then there’s the 1938 radio play broadcast of The War of the Worlds, the first radio play to use the method of fictional news reporting. Newspapers reported that thousands of Americans fled their homes on listening to the play. Apparently, they believed a Martian invasion was unfolding.

In 1999, during the advent of the internet, research showed that audiences of The Blair Witch Project genuinely believed the reports of missing film students represented in a documentary and the accompanying website to be true.

2007’s The Truth about Marika was a mixed reality transmedia story of a missing person, Marika. Reports of her disappearance were broadcast on the Swedish Public Service network, leading some sections of the audience to believe this to be real.

The AI media age

While these instances may beggar belief today, if you think about them in the context of technological developments they become more understandable. All were part of watershed moments in the evolution of media forms with much in common – they all situated a fictional story in what was originally trusted to be a factual context. It is also true that all of the associated accounts in the media were, to some extent, exaggerated.

Hindsight shows us that all of these examples are representative of a transitional moment. These types of projects only occur once at the advent of the new media form. After this point, audiences become literate and are able to effectively discern between fact and fiction – they don’t get caught out twice. This is a cyclical phenomena in which “deepfakes” are simply the most recent manifestation. So perhaps current fears are overstated.

But it is also true that due to the rapidity of technological innovation, the potential exponential propagation of video across multiple online spaces, and the potential scope for exploitation and subversion, we find ourselves at a fairly unique moment. This time, the distinction between what is real and what is fake could actually become imperceptible. At such a point, all screen-based media will be assumed to be fake.

And so while research advances into counter technologies and deepfake detection, platforms will have to carefully manage content. Despite this, current modes of political delivery and news reporting may well become entirely unreliable. New ways for effective communication will no doubt have to evolve.

One thing is certain: deepfakes are symptomatic of modern media entering an era of artificial intelligence. They will take their place in media history as an intrinsic facet of the post-truth, fake news landscape that characterizes our current moment.

This article is republished from The Conversation by Sarah Atkinson, Senior Lecturer in Digital Cultures, King’s College London under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.