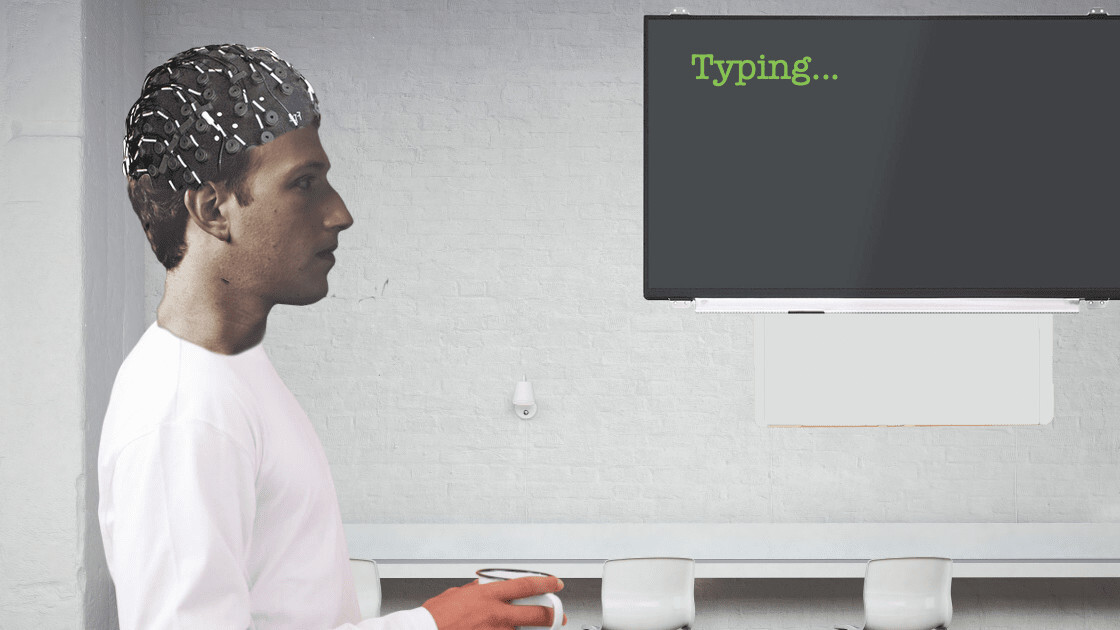

During its developer conference in 2017, Facebook announced its plans to develop a brain-computer interface (BCI) that would let you type just by thinking. Now, researchers from the University of California, San Francisco (UCSF) working under this program have posted a study today noting their algorithm was able to detect spoken words from brain activity in real-time.

The team connected high-density electrocorticography (ECoG) arrays to three epilepsy patients’ brains to record brain activity. Then it asked these patients simple questions, and asked them to answer aloud. Researchers said the algorithm recorded the brain activity while patients spoke. They noted the model decoded these words with accuracy as high as 76 percent.

Facebook said it doesn’t expect this system to be available anytime soon, but it could soon make interaction with AR and VR hardware very easy:

We don’t expect this system to solve the problem of input for AR anytime soon. It’s currently bulky, slow, and unreliable. But the potential is significant, so we believe it’s worthwhile to keep improving this state-of-the-art technology over time. And while measuring oxygenation may never allow us to decode imagined sentences, being able to recognize even a handful of imagined commands, like “home,” “select,” and “delete,” would provide entirely new ways of interacting with today’s VR systems — and tomorrow’s AR glasses.

Earlier this month, Elon Musk’s Neuralink also announced a project that will let you control your iPhone via a device attached to your brain. While these devices may not hit the market in a couple of years, it’ll be an exciting space to watch out for.

Get the TNW newsletter

Get the most important tech news in your inbox each week.