Yann LeCun, Facebook’s world-renowned AI guru, had some problems with an article written about his company yesterday. So he did what any of us would do, he went on social media to air his grievances.

Only, he didn’t take the fight to Facebook as you’d expect. Instead, over a period of hours, he engaged in a back-and-forth with numerous people on Twitter.

No it doesn't.

Apparently, one can write about AI fairness without paying attention to journalistic fairness.

One of those cases where one knows the reality, reads an article about it, and go "WTF?"— Yann LeCun (@ylecun) March 12, 2021

Can we just stop for a moment and appreciate that, on a random Thursday in March, the father of Facebook’s AI program gets on Twitter to argue about a piece from journalist Karen Hao, an AI reporter for MIT’s Technology Review?

Hao wrote an incredible long-form feature on Facebook’s content moderation problem. The piece is called “How Facebook got addicted to spreading misinformation,” and the sub-heading is a doozy:

The company’s AI algorithms gave it an insatiable habit for lies and hate speech. Now the man who built them can’t fix the problem.

I’ll quote just a single paragraph from Hao’s article here that captures its essence:

Everything the company does and chooses not to do flows from a single motivation: Zuckerberg’s relentless desire for growth … [Facebook AI lead Joaquin Quiñonero Candela’s] AI expertise supercharged that growth. His team got pigeonholed into targeting AI bias, as I learned in my reporting, because preventing such bias helps the company avoid proposed regulation that might, if passed, hamper that growth. Facebook leadership has also repeatedly weakened or halted many initiatives meant to clean up misinformation on the platform because doing so would undermine that growth.

There’s a lot to unpack there, but the gist is that Facebook is driven by the singular goal of “growth.” The same could be said of cancer.

LeCun, apparently, didn’t like the article. He hopped on the app that Jack built and shared his thoughts, including what appears to be personal attacks questioning Hao’s journalistic integrity:

Yes, that's why your piece was a surprise to me and to many of my colleagues.

From my point of view, you piece is full of factual errors and incorrect assumptions of ill intent.

What happened to you?

— Yann LeCun (@ylecun) March 12, 2021

His umbrage yesterday extended to blaming talk radio and journalism for his company’s woes:

More importantly, increased polarization is a uniquely American phenomenon (which started before FB was around)

Many other countries have seen polarization *decrease* in the last decade. And they use FB just as much.

I blame cable news and talk radio.— Yann LeCun (@ylecun) March 12, 2021

Really Yann? Increased polarization via disinformation is uniquely American? Have you met my friend “the reason why every single war ever has been fought in the history of ever?”

I digress.

This wouldn’t be the first time he’s taken to Twitter to argue in defense of his company, but there was more going on yesterday than meets the eye. LeCun’s tirade began with a tweet announcing new research on fairness from the Facebook Artificial Intelligence Team (FAIR).

According to Hao, Facebook coordinated the release of the paper to coincide with the Tech Review article:

People have been asking: did FB publish this in response to your story? No, let me clarify. They wanted this paper to be in my story & gave me an early draft. Then in anticipation of it being in my story, they timed its publication with my piece, hoping the two would complement. https://t.co/pST8LYsTmG

— Karen Hao (@_KarenHao) March 11, 2021

Based on the evidence, it appears Facebook was absolutely gobsmacked by Hao’s reporting. It seems the social network was expecting a feature on the progress its made in shoring up its algorithms, detecting bias, and combating hate speech. Instead, Hao laid bare the essential problem with Facebook: it’s a spider web.

Those are my words, not Hao’s. What they wrote was:

Near the end of our hour-long interview … [Quiñonero] began to emphasize that AI was often unfairly painted as “the culprit.” Regardless of whether Facebook used AI or not, he said, people would still spew lies and hate speech, and that content would still spread across the platform.

If I were to rephrase that for impact, I might say something like “regardless whether our company pours gasoline on the ground and offers everyone a book of matches, we’re still going to have forest fires.” But, again, those are my words.

And when I say that Facebook is a spiderweb, what I mean is: spiderwebs are good, until they become too far-reaching. For example, if you see a spiderweb in the corner of your barn, that’s great! It means you’ve got a little arachnid warrior helping you keep nastier bugs out. But if you see a spiderweb covering your entire city, like something out of “Kingdom of the Spiders,” that’s a really bad thing.

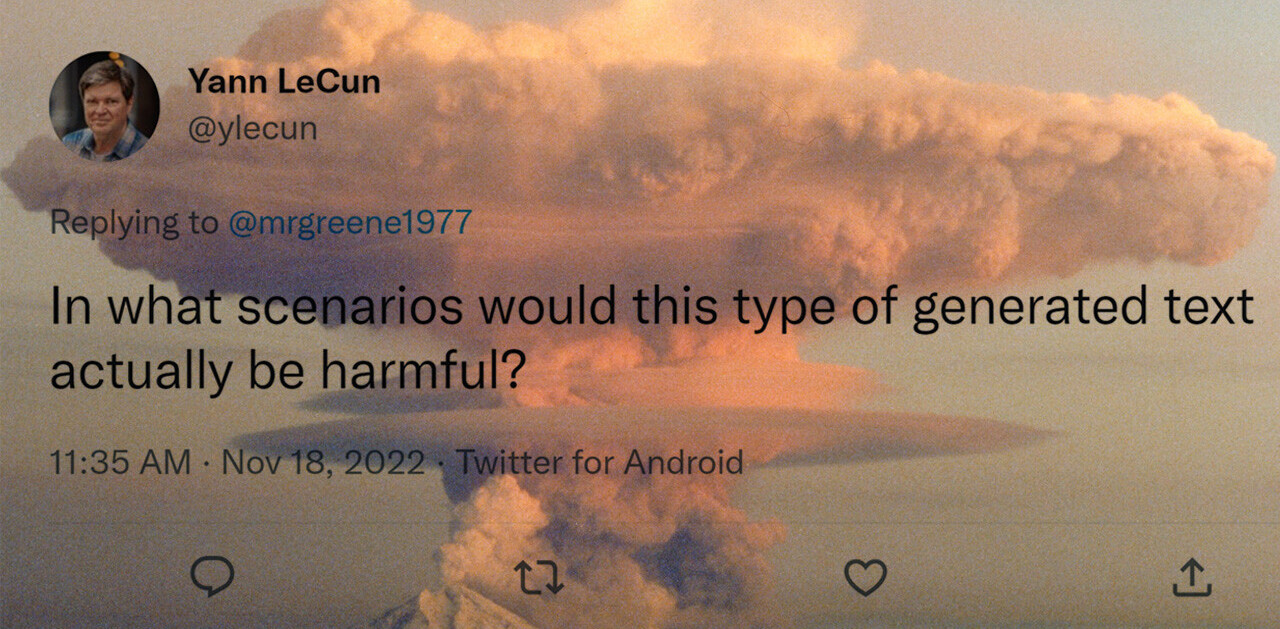

And it’s evident that LeCun knows this because his entire Twitter spiel yesterday was just one giant admission that Facebook is beyond anyone’s control. Here’s a few tidbits from his tweets on the subject:

… When it became clear that such things were happening, FB took corrective [measures] quickly…..

certainly not quickly enough to prevent some bad things from happening in the meantime (hence those UN reports).

But taking those corrective measure is neither instantaneous, nor easy, nor cheap….

When the Myanmar gov started disseminating hateful disinfo against Rohingyas, FB took down their sock-puppet accounts, and hired Burmese-speaking moderators….

But the volume was such that AI systems had to be developed to automate is as much as possible.

So FB developed the world’s best Burmese-English translation system, so that Eng-speaking moderator could help….

Simultaneously, it developed hateful and violent speech detectors for Burmese. But data was scarce.

So in the last 2 years, FB developed multilingual systems that can detect hate speech in any language and don’t require lots of training data.

This all takes expertise, time, money, and in this case, the latest AI breakthroughs.

Interesting. LeCun’s core assertion seems to be that stopping misinformation is really hard. Well, that’s true. There are a lot of things that are really hard that we haven’t figured out.

As my colleague Matthew Beedham pointed out in today’s Shift newsletter, building a production automobile that’s fueled by a nuclear reactor in its trunk is really hard.

But, as the scientists working on exactly that for the Ford company realized decades ago, nuclear technology simply isn’t advanced enough to make it safe enough to power consumer production vehicles. Nuclear’s great for aircraft carriers and submarines, but not so much for the family station wagon.

I’d argue that Facebook’s impact on humanity is almost certainly far, far more detrimental and wide-reaching than a measly little nuclear meltdown in the trunk of a Ford Mustang. After all, only 31 people died as a direct result of the Chernobyl nuclear meltdown and experts figure a max of around 4,000 were indirectly affected (health-wise, anyway).

Facebook has 2.45 billion users. And every time its platform creates or exacerbates a problem for one of those users, its answer is one version or another of “we’ll look into it.” The only place this kind of reactionary response to technology imbalance actually serves the public is in a Whac-A-Mole game.

If Facebook were a nuclear power plant trying to fix a leak that sent nuclear waste into our drinking water every time someone misused the power grid: we’d shut it down until it plugged the leaks.

But we don’t shut Facebook down because it’s not really a business. It’s a trillion-dollar PR machine for a self-governing entity. It’s a country. And we need to either sanction it or treat it as a hostile force until it does something to prevent misuses of its platform instead of only reacting when the poop hits the fan.

And, if we can’t keep the nuclear waste out of our drinking water, or build a safe car with a nuclear reactor in its trunk, maybe we ought to just shut the plants or scuttle the plans until we can. It worked out okay for Ford.

Maybe, just maybe, the reason why journalists like Hao and myself, and politicians around the globe can’t offer solutions to the problems Facebook has is because there aren’t any.

Perhaps hiring the smartest AI researchers on the planet and surrounding them with the world’s greatest PR machine isn’t enough to overcome the problem of humans poisoning each other for fun and profit on a giant unregulated social network.

There are some problems you can’t just throw money and press releases at.

My hat’s off to Karen Hao for such excellent reporting and to the staff of Technology Review for speaking truth in the face of power.

Get the TNW newsletter

Get the most important tech news in your inbox each week.