Elliptic Labs has released a new SDK to bring its ultrasound technology for touchless gesture control that will allow Android manufacturers and developers to adopt the interface for their smartphones, tablets and apps.

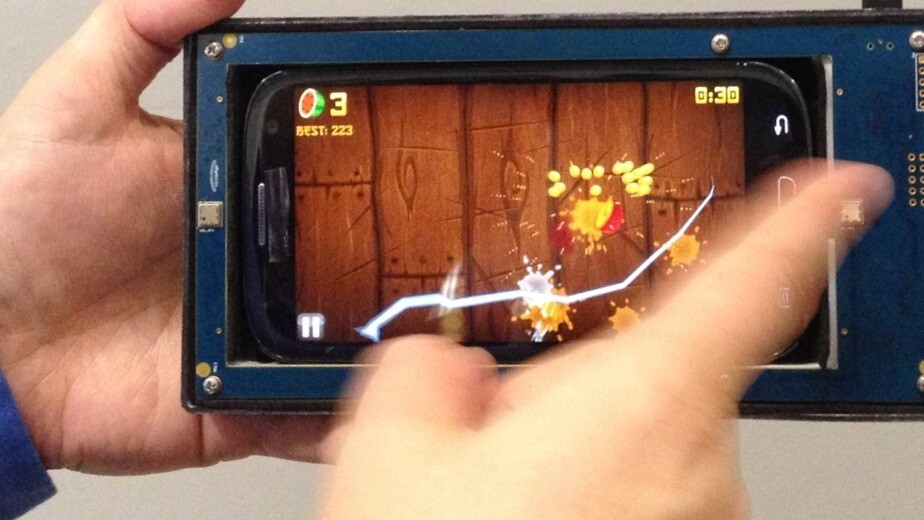

The company announced the news at the CEATEC show in Japan alongside a demonstration of the phone controlled by waving a hand.

“We’re currently working with OEM manufacturers to add this to smartphones that will appear in the market in 2014,” Elliptic Labs CTO Haakon Bryhni said in an interview.

Elliptics Labs says it can outfit a smartphone with its technology using just a couple of transceivers and extra microphones. Android OEMs are expected to release the first handsets with it next year.

The majority of touchless interfaces currently on the market, such as Kinect and Leap Motion, are camera-based, but Elliptic Labs touts its ultrasound solution as working outside of a field of view. It should also work in both darkness and direct sunlight.

Elliptic Labs has already developed a Windows 8 version of its gesture interface, but the small footprint of its technology suits it for mobile.

The gesture interface works with legacy applications by translating gestures into touch control, but the company’s API will open up custom interactions for developers and manufacturers.

While it certainly sounds cool to implement ultrasound for touchless smartphone control, developers and OEMs need to come up with creative uses for these next-generation interfaces. Elliptic Labs’ tech demo is intriguing, but I’m not sold on how much of an improvement it is over existing touchscreens.

Get the TNW newsletter

Get the most important tech news in your inbox each week.