With Google, Tesla and Uber all trying to get driverless cars on the road, the streets of the near future will almost definitely be ruled by robots.

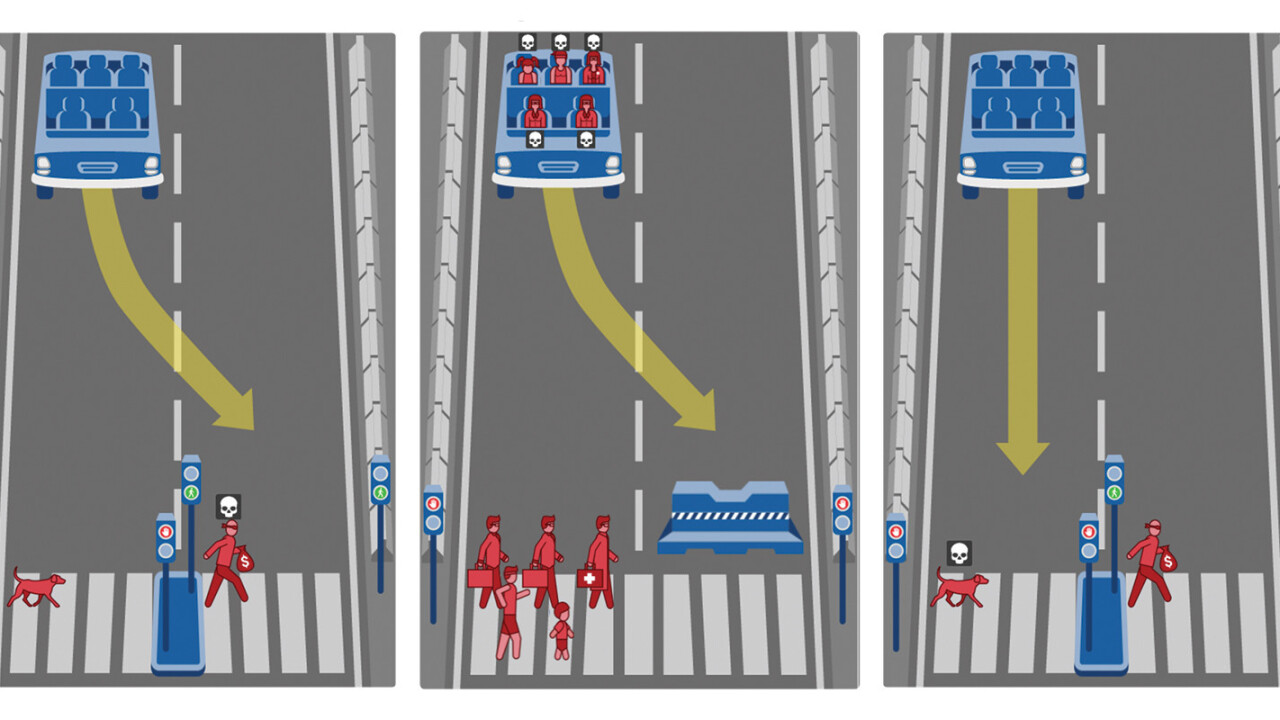

However, there is a world of ethical questions to be explored before all vehicles on the road are allowed to be driverless. To get started answering them, MIT Media Lab recently developed the Moral Machine.

The Machine is comprised of a series of ethical dilemmas, most of them making me feel uneasy when answering them. For example, what do you do when a driverless car without passengers either has to drive into a stoplight-flaunting dog or an abiding criminal?

Upon finishing the test, you’re presented with the results of your choices compared to those of other users.

Apparently, I favored female doctors over the lives of ‘large women’. I also cared more about saving a higher number of lives and less about protecting passengers.

Try it yourself and tell us your results in the comments below.

Get the TNW newsletter

Get the most important tech news in your inbox each week.