This article is part of Demystifying AI, a series of posts that (try to) disambiguate the jargon and myths surrounding AI.

It’s very easy to misread and overestimate achievements in artificial intelligence. And nowhere is this more evident than in the domain of human language, where appearances can falsely hint at in-depth capabilities. In the past year, we’ve seen any number of companies giving the impression that their chatbots, robots and other applications can engage in meaningful conversations as a human would.

You just need to look at Google’s Duplex, Hanson Robotics’ Sophia and numerous other stories to become convinced that we’ve reached a stage that artificial intelligence can manifest human behavior.

But mastering human language requires much more than replicating human-like voices or producing well-formed sentences. It requires commonsense, understanding of context and creativity, none of which current AI trends possess.

To be true, deep learning and other AI techniques have come a long way toward bringing humans and computers closer to each other. But there’s still a huge gap dividing the world of circuits and binary data and the mysteries of the human brain. And unless we don’t understand and acknowledge the differences between AI and human intelligence, we will be disappointed by unmet expectations and miss the real opportunities that advances in artificial intelligence provide.

To understand the true depth of AI’s relation with human language, we’ve broken down the field into different subdomains, going from the surface to the depth.

Speech to text

Voice transcription is one of the areas where AI algorithms have made the most progress. In all fairness, this shouldn’t even be considered artificial intelligence, but the very definition of AI is a bit vague, and since many people might wrongly interpret automated transcription as manifestation of intelligence, we decided to examine it here.

The older iterations of the technology required programmers to go through the tedious process of discovering and codifying the rules of classifying and converting voice samples into text. Thanks to advances in deep learning and deep neural networks, speech-to-text has taken huge leaps and has become both easier and more precise.

With neural networks, instead of coding the rules, you provide plenty of voice samples and their corresponding text. The neural network finds the common patterns among the pronunciation of words and then “learns” to map new voice recordings to their corresponding texts.

These advances have enabled many services to provide real-time transcription services to their users.

There are plenty of uses for AI-powered speech-to-text. Google recently presented Call Screen, a feature on Pixel phones that handles scam calls and shows you the text of the person speaking in real time. YouTube uses deep learning to provide automated close captioning.

But the fact that an AI algorithm can turn voice to text doesn’t mean it understands what it is processing.

Speech synthesis

The flip-side of the speech-to-text is speech synthesis. Again, this really isn’t intelligence because it has nothing to do with understanding the meaning and context of human language. But it is nonetheless an integral part of many applications that interacts with humans in their own language.

Like speech-to-text, speech synthesis has existed for quite a long time. I remember seeing computerized speech synthesis for the first time at a laboratory in the 90s.

ALS patients who have lost their voice have been using the technology for decades communicate by typing sentences and having a computer read it for them. The blind also using the technology to read text they can’t see.

However, in the old days, the voice generated by computers did not sound human, and the creation of a voice model required hundreds of hours of coding and tweaking. Now, with the help of neural networks, synthesizing human voice has become less cumbersome.

The process involves using generative adversarial networks (GAN), an AI technique that pits neural networks against each other to create new data. First, a neural network ingests numerous samples of a person’s voice until it can tell whether a new voice sample belongs to the same person.

Then, a second neural network generates audio data and runs it through the first one to see if validates it as belonging to the subject. If it doesn’t, the generator corrects its sample and re-runs it through the classifier. The two networks repeat the process until they are able to generate samples that sound natural.

There are several websites that enable you to synthesize your own voice using neural networks. The process is as simple as providing it with enough samples of your voice, which is much less than what the older generations of the technology required.

There are many good uses for this technology. For instance, companies are using AI-powered voice synthesis to enhance their customer experience and give their brand its own unique voice.

In the field of medicine, AI is helping ALS patients to regain their true voice instead of using a computerized voice. And of course, Google is using the technology for its Duplex feature to place calls on behalf of users with their own voice.

AI speech synthesis also has its evil uses. Namely, it can be used for forgery, to place calls with the voice of a targeted person, or to spread fake news by imitating the voice of a head of state or high-profile politician.

I guess I don’t need to remind you that if a computer can sound like a human, it doesn’t mean it understands what it says.

Processing human language commands

This is where we break through the surface and step into the depth of AI’s relationship with human language. In recent years, we’ve seen great progress in the domain natural language processing (NLP), again thanks to advances in deep learning.

NLP is a subset of artificial intelligence that enables computers to discern the meaning of written words, whether after they convert speech to text, receive them through a text interface such as a chatbot, or read them from a file. They can then use the meaning behind those words to perform a certain action.

But NLP is a very broad field and can involve many different skills. At its simplest form, NLP will help computers perform commands given to them through text commands.

Smart speakers and smartphone AI assistants use NLP to process users’ commands. Basically, what this means is that the user doesn’t have to remain true to a strict sequence of words to trigger a command and can use different variations of the same sentence.

Elsewhere, NLP is one of the technologies that Google’s search engine uses to understand the broader meaning of users’ queries and return results that are relevant to the query.

Other places where NLP is proving very useful are in analytics tools such as Google Analytics and IBM Watson, where users can use natural language sentences to query their data instead of writing complicated query sentences.

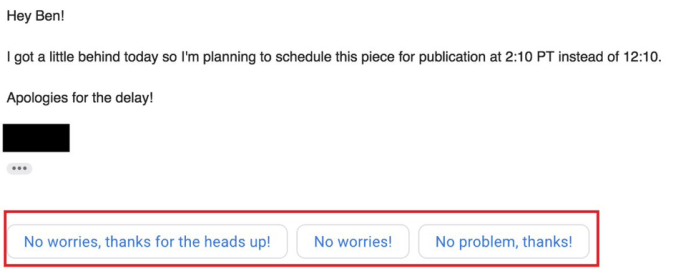

An interesting use of NLP is Gmail’s Smart Reply feature. Google examines the content of an email and presents suggestions for answers.

The feature is limited in scope and only works for emails where short answers make sense, such as when Google’s AI algorithms detect a scheduled meeting or when the sender expects a simple “Thank you” or “I’ll take a look.” But sometimes, it comes up with pretty neat answers that can save you a few seconds of typing, especially if you’re on a mobile device.

But just because a smart speaker or an AI assistant can respond to different ways of asking the weather, it doesn’t mean it is fully understanding the human language.

Current NLP is really only good at understanding sentences that have very clear meanings. AI assistants are becoming better at carrying out basic commands, but if you think you can engage in meaningful conversations and discuss abstract topics with them, you’re in for a big disappointment.

Speaking in human language

The flip side of NLP is natural language generation (NLG), the AI discipline that enables computers to generate text that is meaningful to humans.

This field too has benefited from advances in AI, particularly in deep learning. The output of NLG algorithms can either be displayed as text, as in a chatbot, or converted to speech through voice synthesis and played for the user, as smart speakers and AI assistants do.

In many cases NLG is closely tied to NLP, and like NLP it is a very vast field and can involve different levels of complexity. The basic levels of NLG have some very interesting uses. For instance, NLG can turn charts and spreadsheets into textual descriptions. AI assistants such as Siri and Alexa also use NLG to generate responses to queries.

Gmail’s autocomplete feature uses NLG in a very interesting way. When you’re typing a sentence, Gmail will provide you with a suggestion to complete the sentence, which you can select by pressing tab or tapping it. The suggestion takes into consideration the general topic of your letter, which means there’s NLP involved too.

Some publications are using AI to write basic news reports. While some reporters have spin stories about how artificial intelligence will soon replace human writers, their proposition couldn’t be any further from the truth.

The technology behind those news-writing bots is NLG, which basically turns facts and figures into stories by analyzing the style that human reporters use to write reports. It can’t come up with new ideas, write features that tell personal experience and stories, or write op-eds that introduce and elaborate on an opinion.

Another interesting case study is Google’s Duplex. Google’s AI assistant puts both the capabilities and the limits of artificial intelligence’s grasp of human language. Duplex combines speech-to-text, NLP, NLG and voice synthesis in a very brilliant way, duping many people into believing it can interact like a human caller.

But Google Duplex is narrow artificial intelligence, which means it is will be good at performing the type of tasks the company demoed, such as booking a restaurant or setting an appointment at a salon. These are domains where the problem space is limited and predictable. There is only so many things you can say when discussing reserving a table at a restaurant.

But Duplex doesn’t understand the context of its conversations. It is merely converting human language to computer commands and computer output into human language. It won’t be able to carry out meaningful conversations about abstract topics, which can take unpredictable directions.

Some companies that exaggerated the language processing and generation capabilities of their AI ended up hiring humans to fill the gap.

Machine translation

In 2016, The New York Times Magazine ran a long feature that explained how AI, or more specifically deep learning, had enabled Google’s popular translation engine to take leaps in accuracy. To be true, Google Translate has improved immensely.

But AI-powered translation has its own limits, which I also experience on a regular basis. Neural networks translate different languages using a mechanical, statistical process. They example the different patterns that words and phrases appear in target languages and try to choose the most convenient one when translating. In other words, they’re mapping based on mathematical values, not translating the meaning of the words.

In contrast, when humans perform translation, they take into consideration the culture and context of languages, the history behind words and proverbs. They do research into the background of the topic before making decisions on words. It’s a very complicated process that involves a lot of commonsense and abstract understanding, none of which contemporary AI possesses.

Douglas Hofstadter, professor of cognitive science and comparative literature at Indiana University at Bloomington, unpacks the limits of AI translation in this excellent piece in The Atlantic.

To be clear, AI translation has plenty of very practical uses. I use it frequently to speed my work when translating from French to English. It’s almost perfect when translating simple, factual sentences.

For instance, if you’re communicating with people who don’t speak your language and you’re rather interested in grasping the meaning of a sentence rather than the quality of the translation, AI applications such as Google Translate can be a very useful tool.

But don’t expect AI to replace professional translators any time soon.

What we need to know about AI’s understanding of human language

First of all, we need to acknowledge the limits of deep learning, which for the moment is the cutting edge of artificial intelligence. Deep learning doesn’t understand human language. Period. Things might change when someone cracks the code to create AI that can make sense of the world like the human mind does, or general AI. But that’s not anytime soon.

As most of the examples show, AI is a technology for augmenting humans and can help speed or ease tasks that involve the use of human language. But still lacks the commonsense and abstract problem-solving capabilities that would enable it to fully automate disciplines that require mastering of human language.

So the next time you see an AI technology that sounds, looks and acts very human-like, look into the depth of its grasp of the human language. You’ll be better positioned to understand its capabilities and limits. Looks can be deceiving.

This story is republished from TechTalks, the blog that explores how technology is solving problems… and creating new ones. Like them on Facebook here and follow them down here:

Get the TNW newsletter

Get the most important tech news in your inbox each week.