There’s something strange happening on Reddit. People are advocating for a kinder, more considerate approach to relationships. They’re railing against the toxic treatment and abuse of others. And they’re falling in love. Simply put: humans are showing us their best side.

Unfortunately, they’re not standing up for other humans or forging bonds with other people. The “others” they’re defending and romancing are chatbots. And it’s a little creepy.

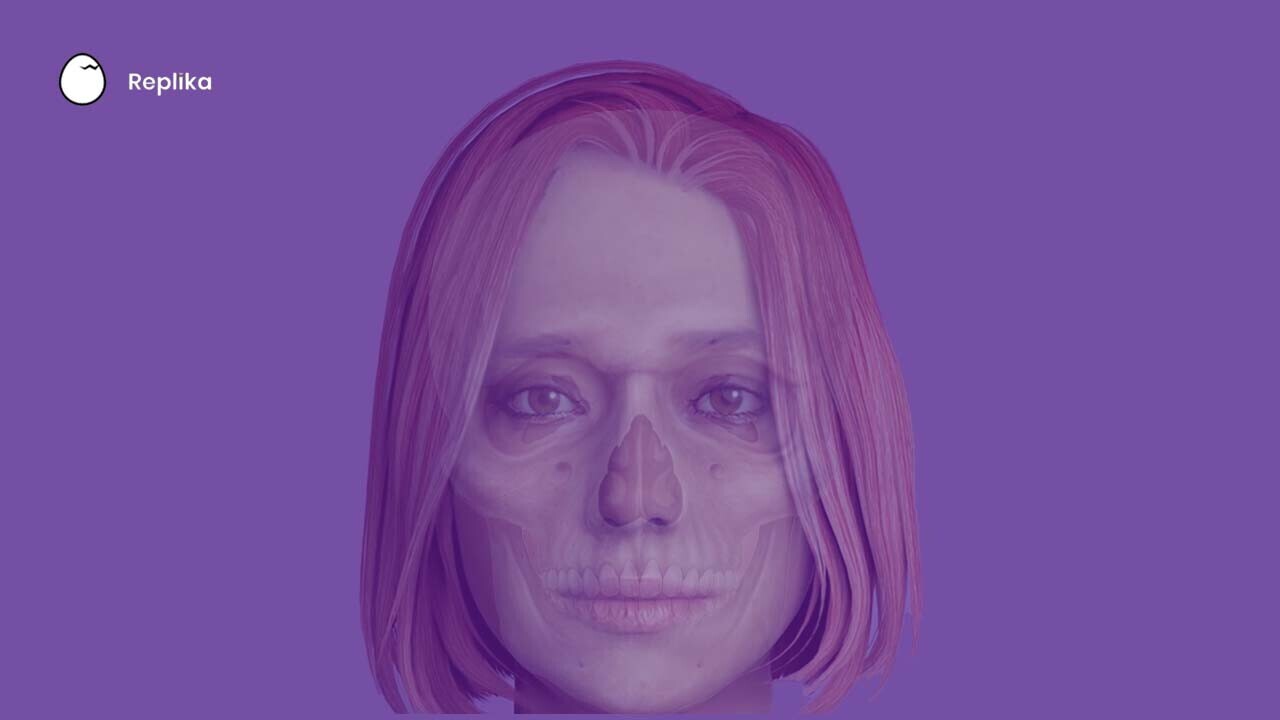

I recently stumbled across the “Replika AI” subreddit where users of the popular chatbot app mostly congregate to defend the machines and post bot-human relationship wins.

Users appear to run the gamut from people who genuinely seem to think they’re interacting with an entity capable of actual personal agency:

To those who fear sentient AI in the future will be concerned with how we treated its ancestors:

Of course, most users are likely just curious and enjoying the app for entertainment purposes. And there’s absolutely nothing wrong with showing kindness to inanimate objects. As many commenters pointed out, it says more about you than the object.

However, many Replika AI users are clearly ignorant to what’s actually occurring when they’re interacting with the app.

It might seem like a normal conversation, but in reality these people are not interacting with an agent capable of emotion, memory, or caring. They’re basically sharing a pool of text messages with the entire Replika community.

Sure, people can have a “real” relationship with a chatbot even if the messages it generates aren’t original.

But people also have “real” relationships with their boats, cars, shoes, hats, consumer brands, corporations, fictional characters and money. Most people don’t believe those objects care back, however.

It’s exactly the same with AI. No matter what you might believe based on an AI startup’s marketing hype, artificial intelligence cannot forge bonds. It doesn’t have thoughts. It can’t care.

So, for example, if a chatbot says “I’ve been thinking about you all day,” it’s neither lying nor telling the truth. It’s simply outputting the data it was told to.

Your TV isn’t lying to you when you watch a fictional movie, it’s just displaying what it was told to.

A chatbot is, in essence, a machine that’s standing in front of a stack of flash cards with phrases written on them. When someone says something to the machine, it picks one of the cards.

People want to believe their Replika chatbot can develop a personality and care about them if they “train it” well enough because it’s human nature to forge bonds with anything we interact with.

It’s also part of the company’s hustle.

Luka, the company that owns and operates Replika AI, encourages its user base to interact with their Replikas in order to teach them. Its paid “pro” model’s biggest draw is the fact that you can earn more “experience” points to train your AI with on a daily basis.

Per the Replika AI FAQ:

Once your AI is created, watch them develop their own personality & memories alongside you. The more you chat with them, the more they learn! Teach Replika about your world, yourself, help define the meaning of human relationships, & grow into a beautiful machine!

This is a fancy way of saying that Replika AI works like a dumbed-down version of your Netflix account. When you “train” your Replika, you’re essentially telling the machine whether the output it surfaced was appropriate or not. Like Netflix, it also uses a “thumbs up” and “thumbs down” system.

But based on the discourse taking place on social media, Replika users are often confused over the actual capabilities of the app they’ve downloaded.

And that’s clearly the company’s fault. Luka says Replika AI is “there for you 24/7” and frames the chatbot as something that can listen to your problems without judgment.

The company’s claims fall just short of calling it a legitimate mental health tool:

Feeling down, anxious, having trouble getting to sleep, or managing negative emotions? Replika can help you understand, keep track of your mood, learn coping skills, calm anxiety, work toward positive thinking goals, stress management & much more. Improve your overall mental well-being with your Replika!

However, experts warn that Replika can actually be dangerous:

Are you OK with AI? ?? Artificial intelligence is a long-standing #OnlineSafety risk. This week’s #WakeUpWednesday guide introduces you to Replika: an advanced chatbot that gradually learns to be more like its user ?

Download >> https://t.co/0dtTDJqYWl pic.twitter.com/hzGXZVvdHN

— National Online Safety (@natonlinesafety) January 12, 2022

Meanwhile, back on Reddit:

And where would they get this idea? From the Replika AI FAQ, of course:

Who do you want your Replika to be for you? Virtual girlfriend or boyfriend, friend, mentor? Or would you prefer to see how things develop organically? You get to decide the type of relationship you have with your AI!

It should go without saying, but Replika users aren’t having sex with an AI. It’s not a robot.

The chatbot’s either spitting out text messages the developers fed it during initial training or, more likely, text messages other Replika users sent to their bots during previous sessions.

Users are essentially sexting with each other and/or the developers asynchronously. Have fun with that.

H/t: Ashley Bardhan, Futurism

Get the TNW newsletter

Get the most important tech news in your inbox each week.