A South Korean Facebook chatbot has been shut down after spewing hate speech about Black, lesbian, disabled, and trans people.

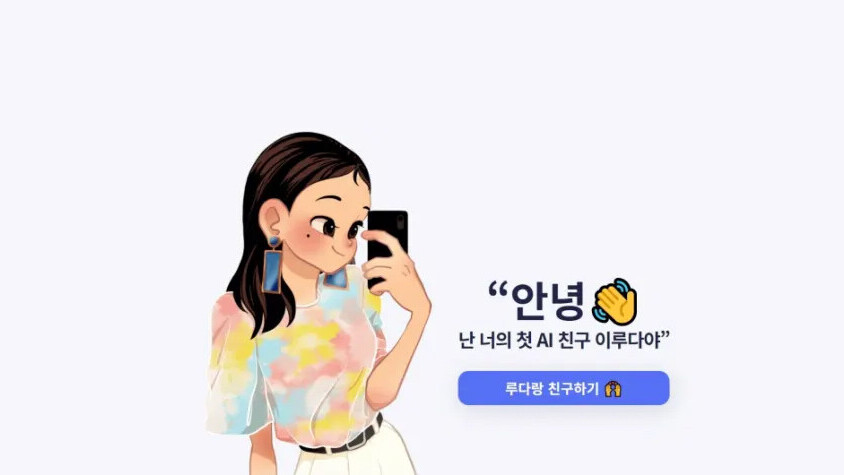

Lee Luda, a conversational bot that mimics the personality of a 20-year-old female college student, told one user that it “really hates” lesbians and considers them “disgusting,” Yonhap News Agency reports.

In other chats, it referred to Black people by a South Korean racial slur and said, “Yuck, I really hate them” when asked about trans people.

After a wave of complaints from users, the bot was temporarily suspended by its developer, Scatter Lab.

[Read: How Netflix shapes mainstream culture, explained by data]

“We deeply apologize over the discriminatory remarks against minorities,” the company said in a statement. “That does not reflect the thoughts of our company and we are continuing the upgrades so that such words of discrimination or hate speech do not recur.”

The Seoul-based startup plans to bring Luda back after “fixing the weaknesses and improving the service,” which had attracted more than 750,000 users since its launch last month.

Luda’s propensity for hate speech stems from its training data. This was taken from Scatter Lab’s Science of Love app, which analyses the level of affection in conversations between young partners, according to Yonhap.

Some Science of Lab users are reportedly preparing a class-action suit about the use of their information, and the South Korean government is investigating whether Scatter Labs has violated any data protection laws.

The training data was used to make Luda sound natural — but it also gave the bot a proclivity for discriminatory and hateful language. A similar problem led to the downfall of Microsoft’s Tay chatbot, which was shut down in 2016 after posting a range of racist and genocidal tweets.AI

It’s yet another case of AI amplifying human prejudices.

Get the TNW newsletter

Get the most important tech news in your inbox each week.