Bigger isn’t always better, but sometimes it is. Cerebras Systems, a company bent on accelerating machine learning systems, built the world’s largest chip last year. In the time since, it’s developed bespoke solutions to some of the largest problems in the AI industry.

Founded in 2015, Cerebras is a sort of reunion tour for most of its C-suite executives. Prior to building chips the size of dinner plates, the team was responsible for Sea Micro, a company founded in 2007 that eventually sold to AMD for more than $330 million in 2012.

Cerebras Systems is now valued at more than $4 billion.

I interviewed Cerebras Systems co-founder and CEO Andrew Feldman to find out how his company was bellying up to the hardware bar in 2022 — an era marked by big tech’s domination of the AI industry.

Feldman seemed unaffected by the prospect of competition from Silicon Valley’s elite.

According to him, Cerebras was built from the ground up to take on all comers:

From day one we wanted to be in the Computer History Museum. We wanted to be in the hall of fame.

That might sound like hubris to the outsider, but the dog-eat-dog world (or more aptly, big-dog-buys-little-dog-and-absorbs-its-technology world) of computer hardware is no place for the meek.

In order for Cerebras to make its mark, Feldman and his team relied on their proven track record of success and ultra-high ambitions to secure entry into the market.

Per Feldman:

We’re system builders, which means we are unafraid of difficult problems… if you’re 100-times better than (big tech’s best efforts) then there’s nothing they can do.

So the big question is, are Cererbras’ humongous chips that much better than the competition’s?

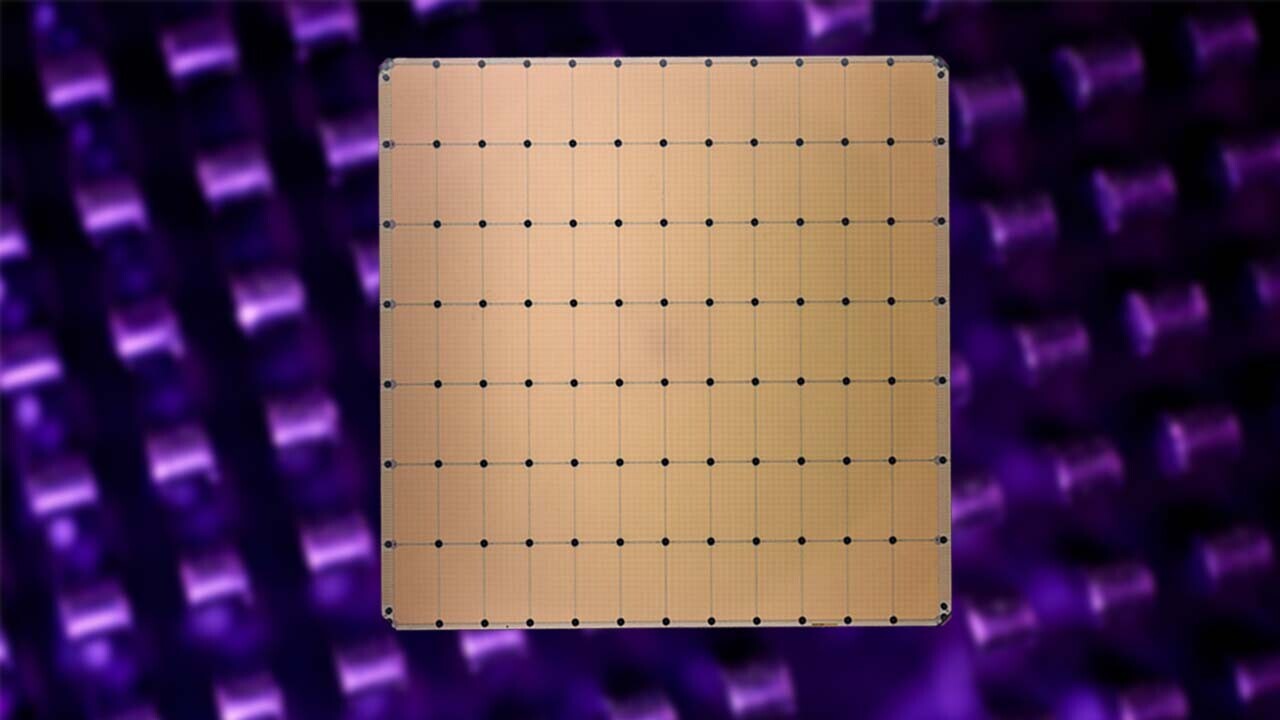

The answer: there really isn’t any competition. Its chips are more than 50X larger than the biggest GPU on the market (the kind of chip typically used to train machine learning systems).

According to Cerebras’ website, the company’s CS-2 system, built on its WSE-2 “wafer” chips, can replace an entire rack of processors typically used to train AI models:

A single CS-2 typically delivers the wall-clock compute performance of many tens to hundreds of graphics processing units (GPU), or more. In one system less than one rack in size, the CS-2 delivers answers in minutes or hours that would take days, weeks, or longer on large multi-rack clusters of legacy, general purpose processors.

Based on the benchmarks the company’s published, the CS-2 not only offers performance advantages, but it also has the potential to massively reduce the amount of power consumed during AI training sessions. This is a win for businesses trying to solve extremely hard problems and for the planet.

To learn more about Cerebras Systems, check out the white paper for the CS-2 here and the company website here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.