Where there’s live content, there’s bound to be someone taking joy in some massive trolling. A little over a year after it launched, Periscope today announced that you can now moderate comments to prevent abuse and spam.

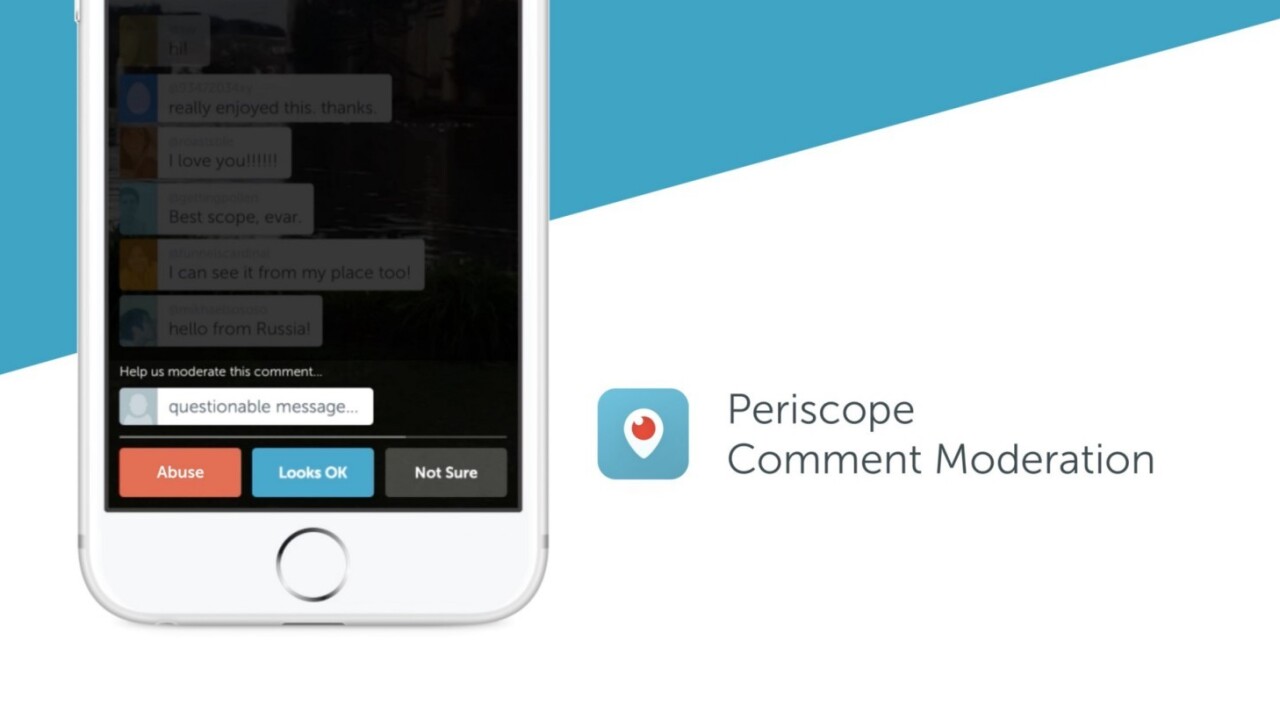

The system works like this: If you’re watching a stream and notice an inappropriate comment, you can flag it as “spam,” “abuse,” or “other reasons.” Then, Periscope will randomly select other viewers who watched the stream when that comment went live and ask them to vote on whether that comment truly was offensive.

The result of the votes are then revealed to those randomly selected, and if the majority agrees the comment was abusive, that user will have their commenting rights temporarily disabled. If the offense is repeated, they’ll be banned for the rest of the stream. Periscope did not say whether it will ban those users entirely if they get flagged regularly.

The system comes after the service had gone through recent strings of questionable content, such as livestreamed suicide and rape. Twitter also said it was working with Periscope to develop an algorithm to scan content – ideally this is meant for users to search for relevant videos but may also help flag inappropriate content and swiftly remove them.

Get the TNW newsletter

Get the most important tech news in your inbox each week.