In this post, I’ll show you how to use beginner-friendly ML tools – Semantic Reactor and TensorFlow.js – to build an app that’s powered by natural language.

NEW: Semantic Reactor has been officially released! Add it to Google Sheets here.

Most people are better at describing the world in language than they are at describing the world in code (well… most people). It would be nice, then, if machine learning could help bridge the gap between the two.

That’s where “Semantic ML” comes in, an umbrella term for machine learning techniques that capture the semantic meaning of words or phrases. In this post, I’ll show you how you can use beginner-friendly tools (Semantic Reactor and Tensorflow.js) to prototype language-powered apps fast.

[Read: How to protect AI systems against image-scaling attacks]

Scroll down to dive straight into the code and tools, or keep reading for some background:

Understanding semantic ML

What are embeddings?

One simple (but powerful) way Semantic ML can help us build natural-language-powered software is through a technique called embeddings.

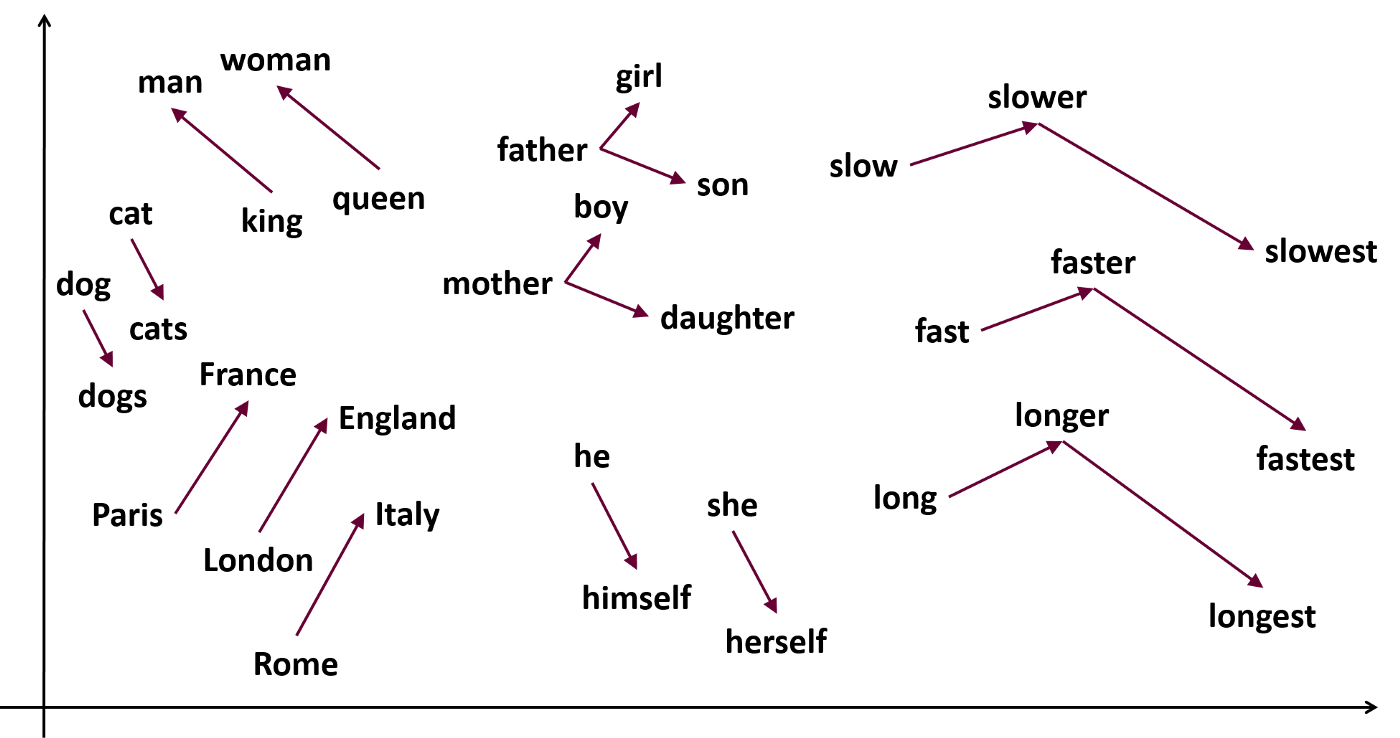

In machine learning, embeddings are a learned way of representing data in space (i.e. points plotted on an n-dimensional grid) such that the distances between points are meaningful. Word vectors are one popular example:

The picture above shows words (“England,” “he,” “fast”) plotted in space such that similar words (“dog” and “dogs,” “Italy” and “Rome,” “woman” and “queen”) are near each other. Each word is represented by a set of coordinates (or a vector), so the word “queen” might be represented by the coordinates [0.38, 0.48, 0.99, 0.48, 0.28, 0.38].

Check out this neat visualization.

Where do these numbers come from? They’re learned by a machine learning model through data. In particular, the model learns which words tend to occur in the same spots in sentences. Consider these two sentences:

My mother gave birth to a son.

My mother gave birth to a daughter.

Because the words “daughter” and “son” are often used in similar contexts, the machine learning model will learn that they should be represented close to each other in space.

Word embeddings are extremely useful in natural language processing. They can be used to find synonyms (“semantic similarity”), to do clustering, or as a preprocessing step for a more complicated NLP model.

Embedding whole sentences

It turns out that entire sentences (and even short paragraphs) can be effectively embedded in space too, using a type of model called a universal sentence encoder. Using sentence embeddings, we can figure out if two sentences are similar. This is useful, for example, if you’re building a chatbot and want to know if a question a user asked (i.e. “When will you wake me up?”) is semantically similar to a question you–the chatbot programmer–have anticipated and written a response to (“What time is my alarm?”).

Semantic reactor: Prototype with semantic ML in a Google sheet

Alright, now on to the fun part – building things!

First, some inspiration: I originally became excited by Semantic ML when Anna Kipnis (former Game Designer at Double Fine, now at Stadia/Google) showed me how she used it to automate video game interactions. Using a sentence encoder model, she built a video game world that infers how the environment should react to player inputs using ML. It blew my mind. Check out our chat here:

In Anna’s game, players interact with a virtual fox by asking any question they think of:

“Fox, can I have some coffee?”

Then, using Semantic ML, the game engine (or the utility system) considers all of the possible ways the game might respond:

Fox turns on lights.

Fox turns on radio.

Fox move to you.

Fox brings you mug.

Using a sentence encoder model, the game decides what the best response is and executes it (in this case, the best response is Fox brings you mug, so the game animates the Fox bringing you a mug). If that sounds a little abstract, definitely watch the video I linked above.

One of the neatest aspects of this project was that Anna prototyped it largely in a Google Sheet using a tool called Semantic Reactor.

Semantic Reactor is a plugin for Google Sheets that allows you to use sentence encoder models right on your own data, in a sheet. It was just released, and you can add it to your Google Sheets here. It’s a really great way to prototype Semantic ML apps fast, which you can then turn into code using TensorFlow.js models (but more on that in a minute).

Here’s a little gif of what the tool looks like:

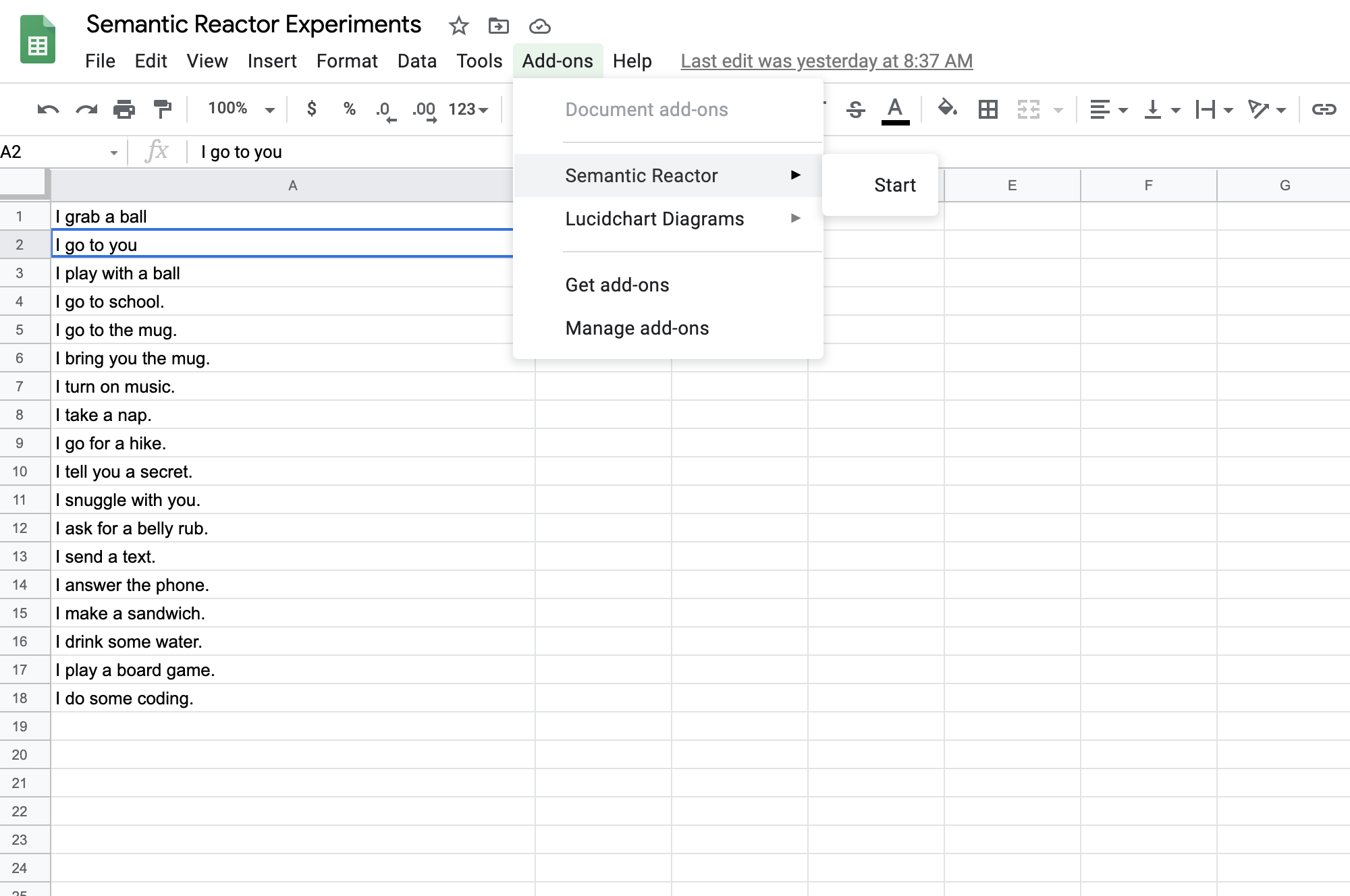

To use Semantic Reactor, create a new Google sheet and write some sentences in the first column. Here, I’ll loosely recreate Anna’s fox demo (for all the nitty gritties, check out her original post). I put these sentences in the first column of my Google sheet:

I grab a ball

I go to you

I play with a ball

I go to school.

I go to the mug.

I bring you the mug.

I turn on music.

I take a nap.

I go for a hike.

I tell you a secret.

I snuggle with you.

I ask for a belly rub.

I send a text.

I answer the phone.

I make a sandwich.

I drink some water.

I play a board game.

I do some coding.

You’ll have to use your imagination here and think of these “actions” that a potential character (e.g. a chatbot or an actor in a video game) might take.

Once you’ve added Semantic Reactor to Sheets, you’ll be able to enable it by clicking on “Add-ons -> Semantic Reactor -> Start.”

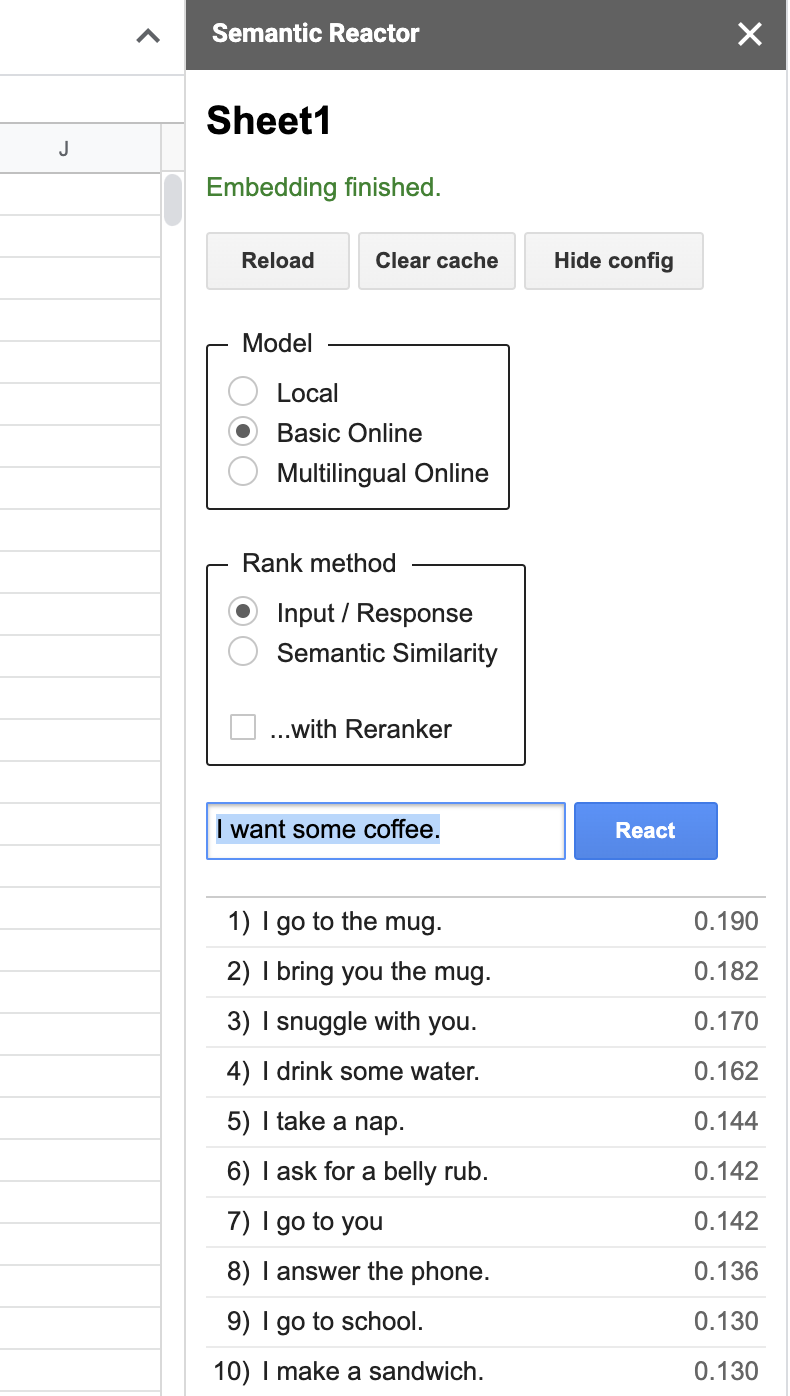

Clicking “Start” will open a panel that allows you to type in input and hit “React:”

When you hit “React,” Semantic Reactor uses a model to embed all of the responses you’ve written in that first column, calculate a score (how good a response is this sentence to the query?), and sorts the results. For example, when my input was “I want some coffee,” the top-ranked responses from my spreadsheet were, “I go to the mug” and “I bring you the mug.”

You’ll also notice that there are two different ways to rank sentences using this tool: “Input/Response” and “Semantic Similarity.” As the name implies, the former ranks sentences by how good they are as responses to the given query, whereas “Semantic Similarity” simply rates how similar the sentences are to the query.

From spreadsheet to code with TensorFlow.js

Underneath the hood, Semantic Reactor is powered by the open-source TensorFlow.js models found here.

Let’s take a look at how to use those models in JavaScript, so that you can convert your spreadsheet prototype into a working app.

1 – Create a new Node project and install the module:

2 – Create a new file (use_demo.js) and require the library:

3 – Load the model:

4 – Encode your sentences and query:

5- Voila! You’ve transformed your responses and query into vectors. Unfortunately, vectors are just points in space. To rank the responses, you’ll want to compute the distance between those points (you can do this by computing the dot product, which gives you the squared Euclidean distance between points):

If you run this code, you should see output like:

Check out the full code sample here.

And that’s it – that’s how you go from a Semantic ML spreadsheet to code fast!

Pretty cool, right? If you build something with these tools, make sure you let me know in the comments below or on Twitter.

This article was written by Dale Markowitz, an Applied AI Engineer at Google based in Austin, Texas, where she works on applying machine learning to new fields and industries. She also likes solving her own life problems with AI, and talks about it on YouTube.

Get the TNW newsletter

Get the most important tech news in your inbox each week.