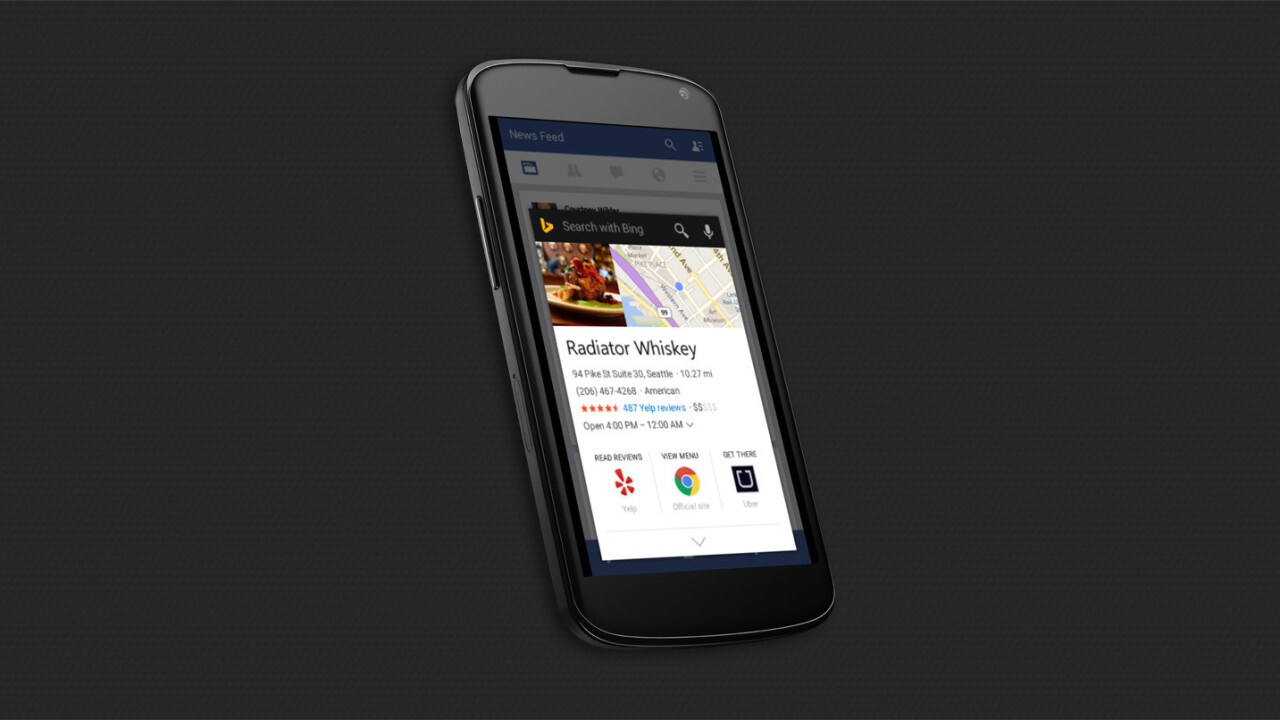

Microsoft just beat Google at its own game: the company has updated Bing Search on Android with a new feature that analyzes what’s on your screen to bring you useful, relevant information without the need to switch apps.

Google’s promised to offer similar functionality in its upcoming Android Marshmallow release, with a feature called Now on Tap. But Microsoft has beaten it to the punch and doesn’t require developers to integrate any code into their apps for it to work.

The new Bing feature works with any app: just long-press the home button and it will read the contents of your screen — images and all — and display related information in an overlay.

Say, for example, you’re chatting with a friend about your next vacation destination. Invoke Bing and it’ll pull in details about that location you mentioned, as well as links to connected apps and services like Lonely Planet.

It draws from the search engine’s extensive knowledge and action graph, which contains over 21 billion facts and 5 billion relationships between people, places and things. The idea is that you shouldn’t have to leave your current app to search for information you’ve just come across.

Microsoft is also opening up the graph to developers with a new API that they can plug into their apps. This will allow for functionality like automatically adding location information to a photo a user is sharing on a social network, and displaying artist profiles and news in a music app.

Microsoft says it’s launching the new feature first on Android and will soon bring it Windows 10 on desktop and mobile devices. The company is also exploring options to extend it to other platforms like iOS.

Developers can request early access to the new API by writing to Microsoft; the company will make it available through its Dev Center in the fall.

➤ Bing Search [Android via Bing Dev Center]

Get the TNW newsletter

Get the most important tech news in your inbox each week.