Across the United States, millions of progressives and proponents for equality cheered (or at least had a good chuckle) earlier this month when Facebook, Apple, Spotify, and YouTube collectively banned alt-right conspiracy theorist and hate speech-spewing Alex Jones from their respective platforms.

In the words of Spotify, this was done because his brand “expressly and principally promotes, advocates, or incites hatred or violence against a group or individual based on characteristics.” In the words of Apple, “Apple does not tolerate hate speech, and we have clear guidelines that creators and developers must follow to ensure we provide a safe environment for all of our users.”

And to the average consumer who’s heard about Jones’s denial of the Sandy Hook Elementary School Shooting or about his assertion that Barack Obama and Hillary Clinton are literally demons (or possessed by them), these bans seem pretty reasonable.

But even as someone who’s ecstatic that there’s one less source of hate-filled drivel on the internet, I’m troubled—because banning problematic accounts isn’t the answer.

The dangers of tech collusion

It appears that decision makers at each of these major tech companies either colluded, or were of the same mind with this decision, which is problematic.

We live in an era where the majority of our news and information come from these powerful sources, and if they collectively have the power to determine what’s acceptable to say and what’s not acceptable to say, it could create chaos, or limit the possibilities for free speech down the line.

As Ben Wizner, director of ACLU’s Speech, Privacy, and Technology Project, put it, “Progressives are being short-sighted if they think more censorship authority won’t come back to bite them.”

Most platforms ended Alex Jones’s influence on the basis that his posts were “hateful,” which I personally agree with, but with such a vague description, how are they going to determine the next “hateful” offender? And where is the line drawn? Apparently, only major tech companies are going to get a say in that debate.

Even if these companies aren’t colluding together, a single platform with millions of users still has enough power to make an impact on our society. It potentially jeopardizes the possibility for free expression in the distant future, but also deepens the echo chamber crisis of the present.

Users of platforms like Facebook continue to be exposed to only the sources they already agree with. Meanwhile, users with controversial views, like the followers of Jones, are forced to seek asylum on other platforms, where their numbers could feasibly make them a majority, and give them the illusion of being the dominant view.

The martyrdom effect

Let’s assume the vast majority of Americans think Alex Jones is a lunatic (I don’t have the stats in front of me, but I feel this is a safe assumption). It’s still a bad idea to completely ban his presence on major media channels, because it could strengthen his already-powerful martyrdom complex.

People who have built their reputations on a controversial set of core beliefs often find it easy to gain followers (or promote loyalty among existing followers) when they get to play the role of the victim; if persecuted for their beliefs, they get to illustrate themselves as the underdog, as the lone purveyor of truth, and as the sympathetic hero their followers deserve. Getting banned allows Jones, and anyone like him, to build up this rhetoric, ultimately giving them more power.

In fact, it may even play into his end game. Imagine getting into a verbal confrontation with someone, and after a heated argument, they try to provoke you into hitting them. They know, despite all the nasty things they may have said, if you’re the one who throws the first punch, they’re the ones who will get the sympathy.

How to change a mind

I’m working under the assumption that the end goal here is to eliminate hateful speech from the internet and, ideally, eliminate the asinine conspiracy theories brandied about by Jones and his ilk.

You can either block these types of posts from ever getting exposure (which companies have done), or work to change someone’s mind, so they don’t feel compelled to make those posts. I think, inarguably, it’s better to change someone’s mind on a core issue like this. For example, I’d rather have two proponents for equality in a room with me than one proponent for equality and one racist with duct tape over his mouth.

You don’t change a mind by banning an ideology from being discussed. In fact, taking this kind of action is seen as combative, and combative positioning only serves to entrench existing beliefs—even if you can prove those beliefs wrong.

Instead, the best way to change a mind is through sympathetic, understanding conversation. But if you’re banning people from participating in an open forum, you rob them of that chance to engage with others, learn, and grow as people.

Obviously, social media isn’t the best place for productive discourse on either side, and you could argue that the arguments on social media are worse for entrenching people in their positions than radio silence.

But I maintain that banning a group of people, or a powerful leader of a group, outright is only going to strengthen and embitter the people committed to those ideologies.

Alternative 1: trust indicators and contrary evidence

I’ve been complaining about why this ban (and future bans to come) are inherently flawed, so it’s only fair I offer a couple of alternative ways to handle the situation.

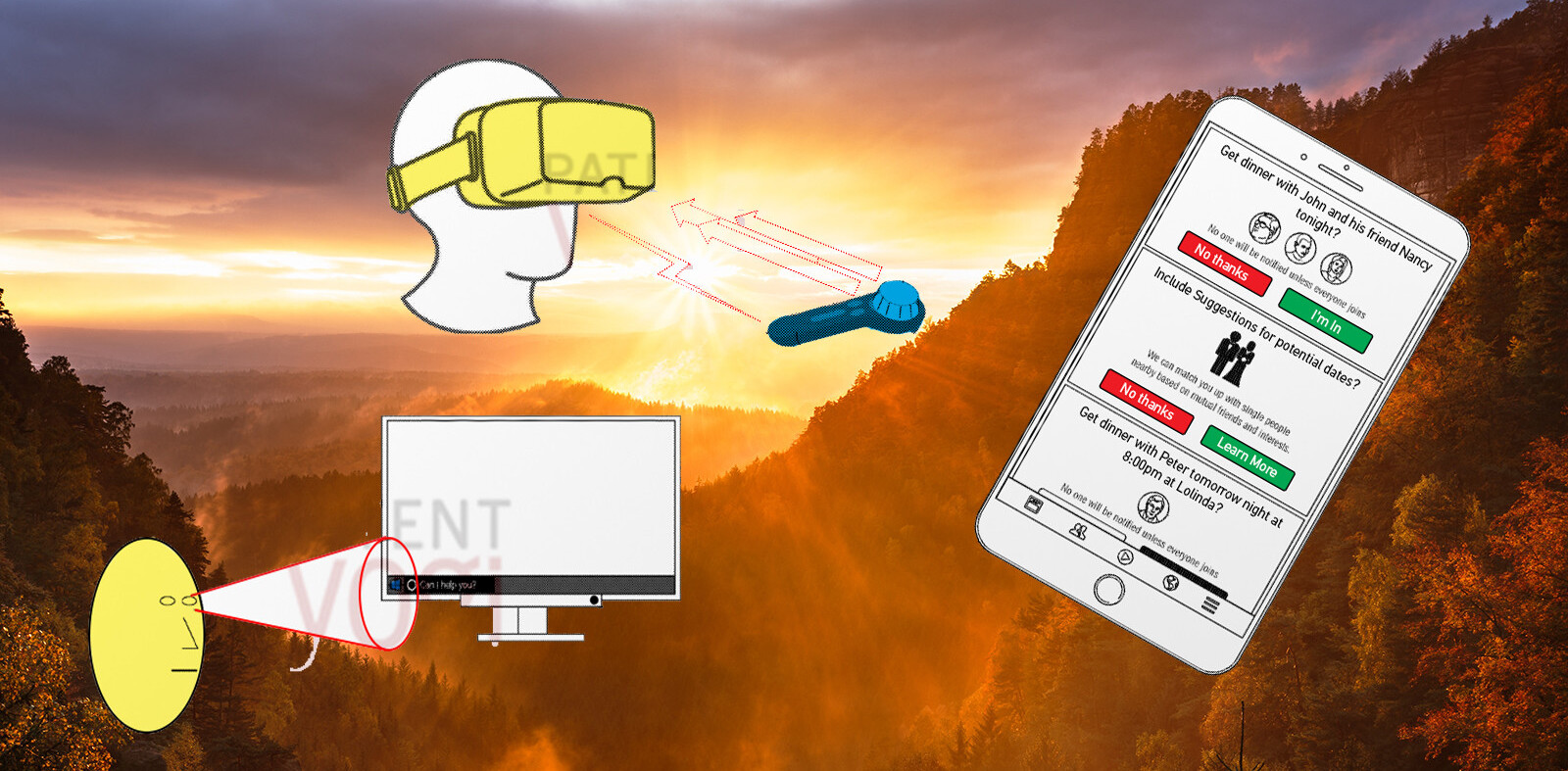

The first is through a system of trust indicators, and intelligent presentations of contrary evidence. Rather than banning individual users for posting misinformation or hateful language, you can use a grading system of trust indicators, not unlike Google’s PageRank system for evaluating website authority, that shows social media users how trustworthy a given source is.

Or, if an algorithm detects a controversial or unsubstantiated claim, it can present contrary evidence side-by-side. This allows controversial figures to keep their voice, while still dismantling their influence.

Alternative 2: democratized action

We can also mitigate the problem of corporate authority and collusion by democratizing action against offenders. Rather than a group of companies conspiring and executing a full-fledged ban, they could allow users to vote on what happens to offenders, with consequences ranging from limited access to the platform (such as fewer posts allowed per week), or full banning (in the case of an extreme majority).

There will be problems with any model set forth to solve the problem of abusive and toxic users on social media, but working together, I have to believe there’s a better solution than outright censorship.

Let’s resist the temptation to take the easy way out, and remember that a power executed for a sensible decision today could be used to make a much more destructive decision tomorrow.

Get the TNW newsletter

Get the most important tech news in your inbox each week.