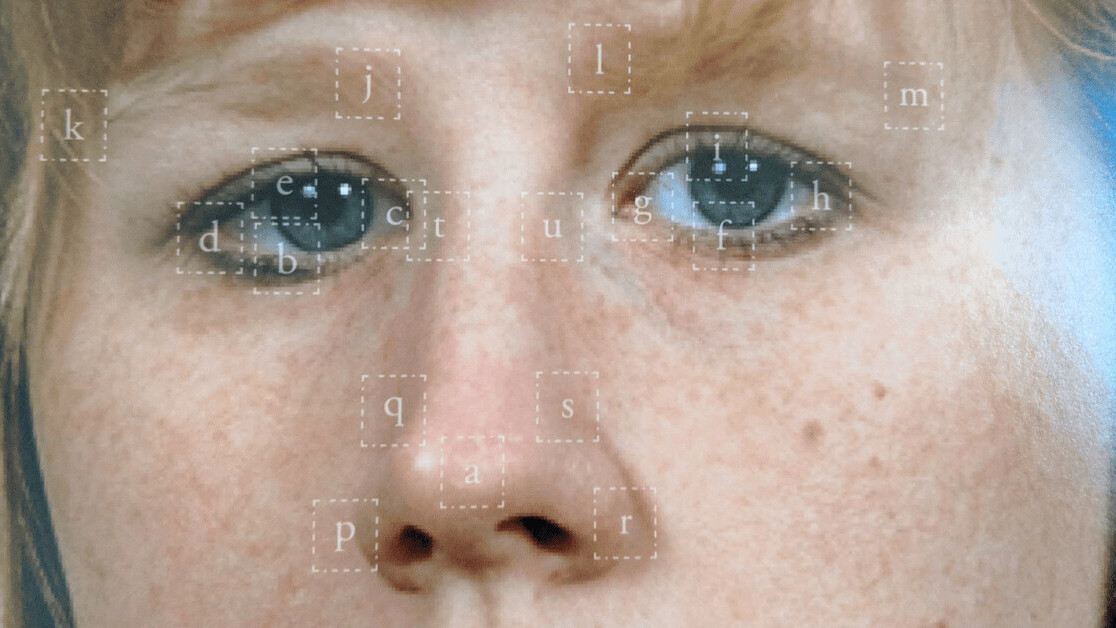

If you’ve uploaded any photos to the web in recent years, there’s a good chance they’ve been used to build facial recognition systems.

Developers routinely train facial recognition algorithms on images from websites — without the knowledge of the people who posted them.

A new online tool called Exposing.AI can help you find out if your photos are among the snaps they’re scrapped.

The system uses information from publicly available image datasets to determine if your Flickr photos were used in AI surveillance research.

[Read: How this company leveraged AI to become the Netflix of Finland]

Just enter your Flickr username, photo URL, or hashtag in the website’s search bar and the tool will scan through over 3.5 million photos for your pics.

The search engine checks whether your photos were included in the datasets by referencing Flickr identifiers such as username and photo ID. It doesn’t use any facial recognition to detect the images.

If it finds an exact match, the results are displayed on the screen. The images are then loaded directly from Flickr.com.

Exposing.ai is based on the MegaPixels art and research project, which explores the stories behind how biometric image training datasets are built and used.

The tool’s creators said the project is based on years of research on the tech:

After tracking down and analyzing hundreds of these datasets a pattern emerged: millions of images were being downloaded from Flickr.com where permissive content licenses are encouraged and biometric data is abundant. Telling the complex story of how yesterday’s photographs became today’s training data is part of the goal of this project.

Flickr is a logical target for the tool’s launch. The photo-sharing service is regularly used in AI research, from the MegaFace database of millions of images to what Yahoo called “the largest public multimedia collection that has ever been released.”

In 2019, IBM released a dataset of almost a million pictures scraped from Flickr without the uploaders’ consent.

The company said that the collection would help researchers reduce the rampant biases of facial recognition. But it could also be used to develop powerful surveillance tech.

“This is the dirty little secret of AI training sets,” NYU School of Law professor Jason Schultz told NBC News at the time. “Researchers often just grab whatever images are available in the wild.”

Rapid results

I tested out the tool on Flickr accounts that have shared photos with the public under a Creative Commons license. The second account I tried was spotted in the datasets.

Unfortunately, there isn’t too much you can do once your photos have been scraped.

It’s not possible to remove your face from image datasets that have already been distributed, although some allow you to request removal from future releases. The Exposing.ai team said they’ll soon include information about this process below your search results.

Exposing.AI also only works on Flickr and doesn’t cover every image training dataset used for facial recognition. The creators say future versions could include more search options.

At this point, the tool’s main power is exposing how our photos are used to develop facial recognition without our consent. Only changes to laws and company policies can prevent the practice.

Get the TNW newsletter

Get the most important tech news in your inbox each week.