Facial recognition is permeating our everyday lives, but the tech is fraught with errors, biases, and privacy concerns.

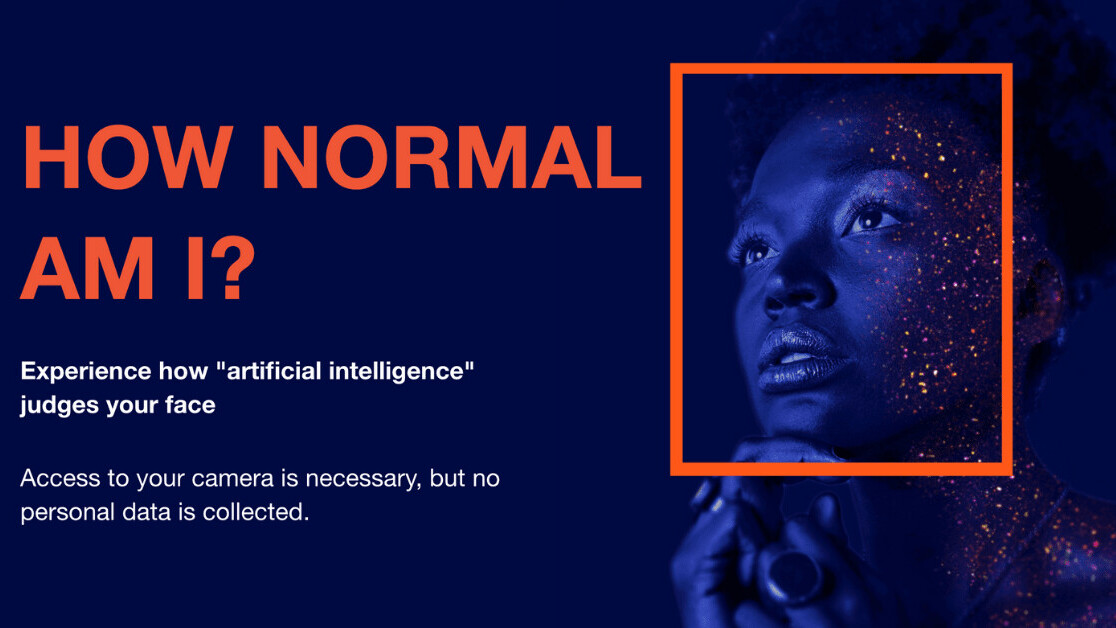

A new website called How Normal Am I? aims to shed light on the risks by using algorithms to judge your age, attractiveness, BMI, life expectancy, and gender.

Sharing your photo and all these sensitive inferences might appear a little reckless. But as the site promises to not collect any personal data or use any cookies, I naively gave it a go.

After agreeing to the terms and conditions — which, of course, I read in great depth — I’m welcomed to the site by Tijmen Schep, the artist-in-residence at SHERPA, an EU-funded project that explores how AI impacts ethics and human rights.

[Read: Amsterdam and Helsinki become first cities to launch AI registers explaining how they use algorithms]

The site then displays some images of apparently innocent doggies (more on those devious pups later) while the system loads up, before asking me to face my webcam so it can rate my looks from 0-10.

Schep explains that dating apps such as Tinder use similar algorithms to match people it thinks are equally attractive, while social media platforms including TikTok have deployed them to promote content by good-looking people.

The algorithms are typically trained on thousands of photos that have been manually tagged with attractiveness scores, often by university students. As beauty standards can vary between countries and cultures, their perceptions can be embedded in the algorithms.

Schep explained on Hacker News that the main algorithm his site uses is FaceApiJS, a JavaScript API for face detection and recognition.

“In my own experience it’s doesn’t vary as wildly if you’re a white male,” he said. “With other ethnicities, the predictability levels can drop, especially if you’re Asian.”

As a white male, I should be relatively easy to assess. But I significantly boosted my score by moving closer to the camera. However, my attempts at a coquettish smile saw my looks brutally marked down.

These variations show the system is far from precise and open to manipulation — which is the whole point of the project.

“If you have a low score, it might just be because the judgment of these algorithms is so dependent on how they were trained,” explained Schep. “Of course, if you got a really high score, that’s just because you are incredibly beautiful.”

AI creep continues

The next algorithm judges your age. Schep says companies use these tools to learn more about shoppers, or to guess if someone’s lying about their age on dating sites. Again, the system proved simple to deceive: I knocked a decade off my age just by giving my head a shake.

The website goes on to infer my gender, another calculation that can vary depending on where the algorithm was developed. It then predicts your BMI, using the only algorithm Schep trained himself, which he did by feeding it a diet of 50% Chinese celebrities and 50% American arrest records. This estimate is made by measuring proportions of your face, such as the space above your eyes, so raising your eyebrows can lead your BMI to plummet.

The one consistent result I got was a ludicrously optimistic life expectancy of 81. Clearly, the algorithm can’t see my lungs and liver. But insurance agencies still use similar models to price their policies as they’re seen as better than nothing.

Finally, the system showed me my “face print,” a digital identifier that the likes of lovable Clearview AI use to match your photo to a vast repository of images.

Schep then revealed the truth about those dogs: they were used to guess my emotional state by analyzing my facial expressions as I watched them — another way in which AI can make some seriously personal inferences.

“As face recognition technology moves into our daily lives, it can create this subtle but pervasive feeling of being watched and judged all the time,” said Schep. “You might feel more pressure to behave ‘normally’, which for an algorithm means being more average. That’s why we have to protect our human right to privacy, which is essentially our right to be different. You could say that privacy is a right to be imperfect.”

Using these tools can seem convenient and useful in the short-term. But are the long-term risks to us as individuals and a society worth the potential benefits?

I don’t think so. Now, excuse me while I update my dating profile pictures.

Update October 7 6PM CET: The original version of this article implied that HireVue uses voices, facial expressions, and vocabulary to analyze a job candidate’s emotional state. HireVue has confirmed that this is not something the company does. We have corrected the article to reflect this, and regret the error.

Get the TNW newsletter

Get the most important tech news in your inbox each week.